Intuitive Virtual Objects Manipulation in Augmented Reality: Interaction between User’s Hand and Virtual Objects

- DOI

- 10.2991/jrnal.k.200221.003How to use a DOI?

- Keywords

- Augmented reality; virtual objects; occlusion problem; hidden surface removal

- Abstract

Augmented Reality (AR) is a technological advancement which brings to reality the intuitive interaction that a user is attracted to virtual objects. In this paper, we focus on intuitive manipulation of virtual objects with bare hands. Specifically, we propose a method to deform, move, and join virtual objects by intuitive gestures, and aim at intuitive interactions with virtual objects. In order to manipulate virtual objects with bare hands, the position coordinates of the user’s fingers are acquired using the Leap Motion Controller. Additionally, we use Bullet Physics as an engine to represent virtual objects. On the other hand, in AR technology, since the three-dimensional (3D) model is superimposed on the image of the real space afterward, it is always displayed on the front end rather than the user’s hand (occlusion problem). Thus, the user cannot perform an intuitive manipulation. We solve the occlusion problem by performing appropriate hidden surface removal. From the results of a study using data gathered from a questionnaire survey, we found support for the intuitive interaction between the user and virtual objects.

- Copyright

- © 2020 The Authors. Published by Atlantis Press SARL.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

Augmented Reality (AR) is a technique of superimposing information generated by a computer on perceptual information that we receive from real space. The combination of AR technology with many contents creates new type of applications and AR is used in many fields, including education, medicine and entertainment [1–3].

More recently, techniques for realizing interaction between a user and virtual objects in AR have garnered much attention. Therefore, it is expected that these technologies will be used in various applications in the future. On the other hand, most of the existing studies focused on interactions using rigid virtual objects. However, in this investigation, we aim to realize a flexible virtual object that can be deformed—much like clay—and use it in a wider range of applications.

This paper introduces examples of flexible virtual objects.

Kato et al. [4] introduced flexible body virtual objects by using Bullet Physics (or physics engine), reflecting the action of crushing on the virtual object with the user’s bare hand in the virtual space, and realized the deformation manipulation of virtual objects by interaction with the user.

Suzuki et al. [5] depicted the moving manipulation of rigid body virtual objects through an interaction between the user and virtual objects. However, after deforming the virtual object, moving or rejoining the virtual object while maintaining the original shape was not possible.

To the best of our knowledge, a series of movements that deformed, moved, or joined virtual objects were not realized.

2. PROPOSAL

In this paper, we aim to manipulate virtual objects more intuitively by allowing a user to perform basic manipulations, such as creating virtual objects, deforming, moving and combining them.

We make it possible to realize the interaction of the user and the virtual objects by adopting the Leap Motion Controller specialized for acquisition of three-dimensional (3D) coordinates of fingers and introducing flexible body virtual objects using Bullet Physics. We also perform a hidden surface removal to allow the user to recognize the context of the user’s hand and the virtual objects.

3. SYSTEM COMPONENT

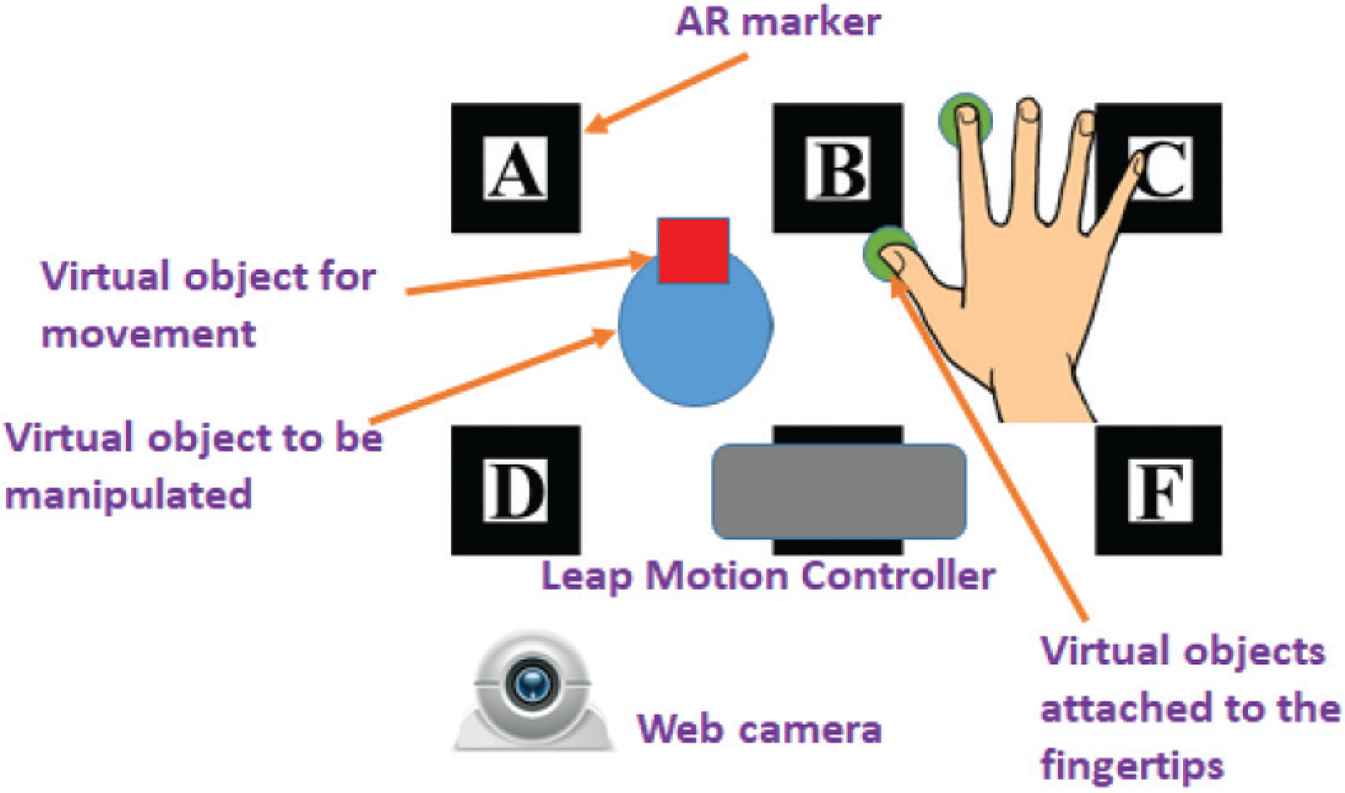

This system consists of a Web camera for real image acquisition and marker recognition, the Leap Motion Controller for obtaining 3D coordinates of fingers, and PC for performing arithmetic processing and video output. In this system, we use network programming to prevent interference between the Leap Motion Controller and the Web camera. This system acquires the 3D coordinates of fingers with the Leap Motion Controller on the server side. In addition, this system acquires images with the Web camera, and performs physical calculation, and video output on the client side.

As shown in Figure 1, we place the paper on which the AR marker is printed and the Leap Motion Controller, and observe the paper with the Web camera. The virtual objects are displayed by recognizing the AR marker.

System component.

This system consists of virtual objects of the following types:

- •

Virtual object to be manipulated: a virtual object of an object to be actually manipulated using bare hands.

- •

Virtual objects attached to the fingertips: virtual objects following the tip of the user’s thumb and index finger.

- •

Virtual object for movement: a virtual object providing the position information accompanying “virtual object to be manipulated”.

However, “virtual objects attached to the fingertips” and “virtual object for movement” are hidden.

4. MANIPULATION OF VIRTUAL OBJECTS BY GESTURE

In this section, we will explain the manipulation of virtual objects using bare hand gestures.

4.1. Generation of Virtual Object

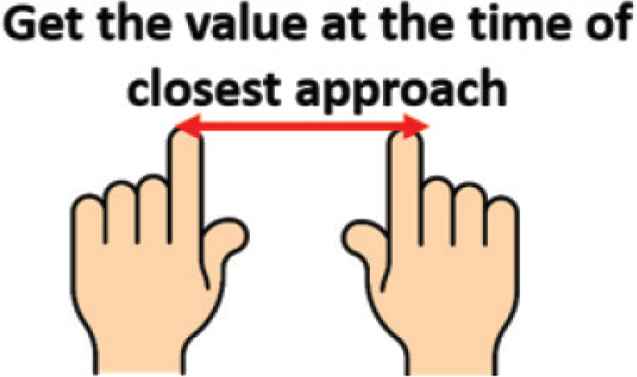

The user can newly generate a “virtual object to be manipulated”, which size is desired by user by gesture of index fingers of both hands (Figure 2).

Gesture for object generation.

The procedure will be described below.

- 1.

This system acquires the 3D coordinates by putting the user’s index finger of the right hand on Leap Motion Controller.

- 2.

This system acquires the 3D coordinates by putting the user’s index finger of left hand on the Leap Motion Controller while keeping the state of Step 1.

- 3.

The user moves away the own left index finger from the Leap Motion Controller.

- 4.

This system determines the size of the “virtual object to be manipulated” based on the value when the index finger of both hands comes closest to generate the object.

4.2. Deformation and Moment of the Virtual Object

It is difficult to distinguish whether the user wants to grasp or wants to move the virtual object. Therefore, this system performs deformation of the virtual object when the user’s palm is downward, and movement of the virtual object when the user’s palm is upward.

This system decides that the palm is upward when the user’s little finger, ring finger, middle finger, index finger, thumb line up from the left of the Leap Motion Controller.

The system performs deformation and movement manipulations on virtual object with only the right hand.

The user turns the palm downward and squeezes the “virtual object to be manipulated” with the thumb and index finger, whereby the user’s “virtual objects attached to the fingertips” and “virtual object to be manipulated” collide with each other, and the “virtual object to be manipulated” can be deformed.

The user can move “virtual object to for movement” which hold the representative coordinate of “virtual object to be manipulated” by turning the user’s palm upward and moving his/her hand near the virtual object. Since “virtual object for movement” is attached to “virtual object to be manipulated” in advance, “virtual object to be manipulated” can be moved by moving “virtual object for movement”.

In the case where two virtual objects are generated, this system obtains the Euclidean distance between the center of the hand and each “virtual object for movement” of each of the two virtual objects and selects a closer “virtual object for movement” as the moving target.

4.3. Joining of the Virtual Objects

The user can join two virtual objects by bringing one “virtual object to be manipulated” closer to the other “virtual object to be manipulated”.

The procedure is described below.

- 1.

The user brings one “virtual object to be manipulated” closer to the other “virtual object to be manipulated”.

- 2.

Since this system is not performed the collision determination between the “virtual objects to be manipulated”, the user can bring the two “virtual manipulation target objects” closer to each other so as to overlap with each other.

- 3.

When the two “virtual objects to be manipulated” approach each other to where they overlap, the two virtual objects are joined.

4.4. Movement after Joining the Virtual Objects

The user can move the joined virtual object. The procedure is described as follows:

- 1.

The user performs a gesture of “movement of a virtual object” near the joined virtual object.

- 2.

The each of two “virtual object for movement” move according to the user’s finger coordinates.

- 3.

As a result, it is possible to move the “virtual object to be manipulated” in the joined state.

5. EXECUTION RESULT

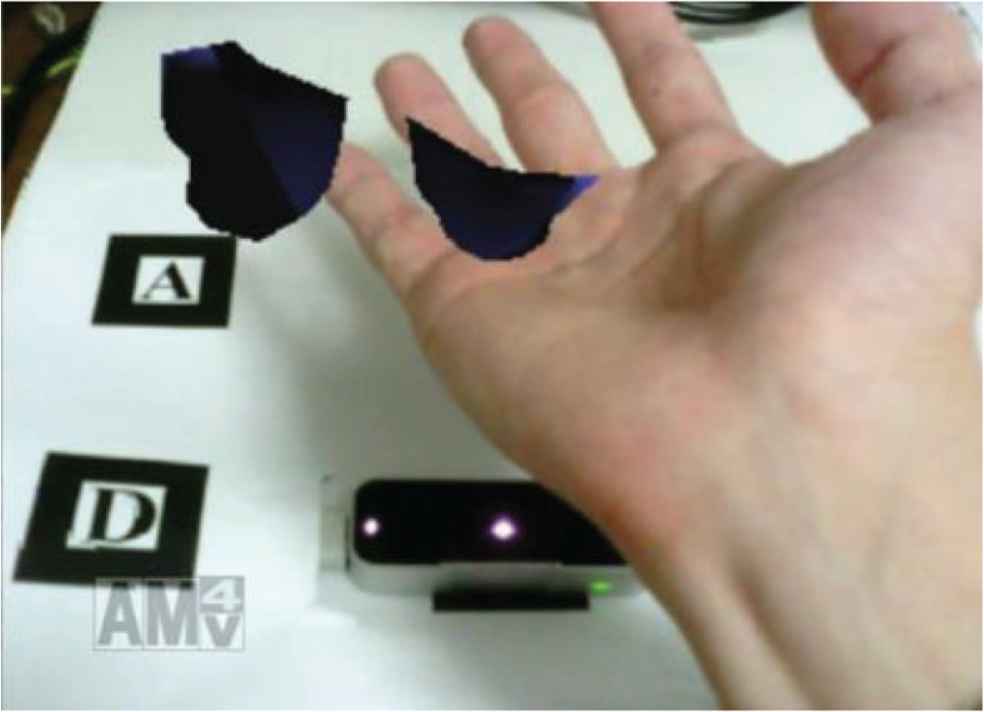

The execution result of this system is shown in Figures 3–6.

Generate.

Deform.

Move.

Joint and move.

As depicted in Figure 3, we confirmed that “virtual object to be manipulated” is newly generated by the user’s generation gesture.

Figure 4 is a deformation manipulation of the virtual object. We confirmed that the virtual object can be deformed by crushing the virtual object with the user’s thumb and index finger.

Figure 5 is a movement manipulation of the virtual object. The virtual object on the right side of the figure is moved to the left side of the figure. Finally, the two virtual objects are combined.

Figure 6 is a movement manipulation of the virtual object after joining. The two virtual objects move with the virtual objects did join was confirmed.

6. EVALUATION

We conducted questionnaire survey in order to evaluate whether the user can intuitively perform a series of tasks such as creation, deformation, movement, and combination of virtual objects, and what problems are involved in performing the tasks.

6.1. Outline of Experiment

We asked six university students of subjects to perform basic manipulations on virtual objects as shown in Figures 3–6.

6.2. Evaluation Method

After having pretested this system, each item on the survey was anchored by responses of “good point”, “difficult point in manipulation”, and “improvement point” in this system.

6.3. Experiment Result

The results using data from the survey questionnaire is as follows.

As for “good point”, there was some opinions such as “manipulation method is intuitive and easy to understand”, and “movements of the hands were reflected in the system”.

In response to “difficult points in manipulation”, some respondents indicated that “it is difficult to join two virtual objects”, “it is difficult to grasp the sense of distance with the virtual object”, and “when the hand is manipulated at a low position from the desk, the virtual object does not react in user’s gesture”.

As for “improvement point”, some respondents indicated “I want feedback when crushing virtual objects”, “I want functions that can be transformed into arbitrary figures, such as cones and rectangular parallelepipeds by gesture”, and “I want a manipulation function to cut off virtual objects”.

6.4. Discussion

From the “good point” perspective, the study confirms that the interaction manipulation with the virtual object incorporating the gesture is intuitive. Therefore, we believe that it is possible to superimpose the physical phenomenon, such as deformation object by hand in the real space on the virtual objects.

Next, we considered the “difficult point in manipulation”. A possible reason as to why this response was obtained is that “it is difficult to grasp the sense of distance with the virtual object.” What’s more, when the user manipulated the virtual object, an error occurred due to a delay in the position information of the finger acquired with the Leap Motion Controller, and the user felt a sense of incompatibility.

It is possible the subjects responded that “it is difficult to join two virtual objects” in this system because it was not possible to couple virtual objects. Indeed, when an attempt is made to join the two virtual objects as they approach a certain distance, “it becomes difficult to grasp the sense of distance with the virtual object”. Specifically, an error occurs between the position of the finger on the real space and the position of the finger on the virtual space.

It is possible that the reason why respondents reported that “when the hand is manipulated at a low position from the desk, the virtual object does not react in user’s gesture” is that when the hand is in a low position, the hand covers the Leap Motion Controller, and the coordinates of the fingertips cannot be acquired we considered.

7. CONCLUSION

In this study, we aimed more intuitive manipulation between users and virtual objects using gestures by acquiring 3D coordinates of the user’s fingers with the Leap Motion Controller and introducing flexible body virtual objects using Bullet Physics.

In this exploratory experiment, we asked six subjects to perform the basic manipulation on the virtual objects and gathered data using a survey questionnaire. of the data from the experiments show support that this system can seamlessly perform a series of operations, such as generating virtual objects, transforming, moving, and joining them, and intuitively interact with virtual objects.

Future research investigations should address the issues of “difficult point in manipulation” and add functions pointed out in “improvement point,” so that it can more intuitively interact with virtual objects. Furthermore, we think that it is necessary to increase the precision of interaction, such as enabling finer deformation of virtual objects.

CONFLICTS OF INTEREST

The authors declare they have no conflicts interest.

AUTHORS INTRODUCTION

Dr. Makoto Sakamoto

He received the PhD degree in computer science and systems engineering from Yamaguchi University. He is presently an associate professor in the Faculty of Engineering, University of Miyazaki. He is a theoretical computer scientist, and his current main research interests are automata theory, languages and computation. He is also interested in digital geometry, digital image processing, computer vision, computer graphics, virtual reality, augmented reality, entertainment computing, complex systems and so on.

He received the PhD degree in computer science and systems engineering from Yamaguchi University. He is presently an associate professor in the Faculty of Engineering, University of Miyazaki. He is a theoretical computer scientist, and his current main research interests are automata theory, languages and computation. He is also interested in digital geometry, digital image processing, computer vision, computer graphics, virtual reality, augmented reality, entertainment computing, complex systems and so on.

Mr. Takahiro Ishizu

He is a master student at Department of Computer Science and System Engineering, University of Miyazaki. His current research interests are computer graphics and image processing.

He is a master student at Department of Computer Science and System Engineering, University of Miyazaki. His current research interests are computer graphics and image processing.

Mr. Masamichi Hori

He received the master of engineering at Department of Computer Science and System Engineering, University of Miyazaki. He is working for NEC Corporation.

He received the master of engineering at Department of Computer Science and System Engineering, University of Miyazaki. He is working for NEC Corporation.

Dr. Satoshi Ikeda

He received PhD degree from Hiroshima University. He is an associate professor in the Faculty of Engineering, University of Miyazaki. His research interest includes graph theory, probabilistic algorithm, fractal geometry and measure theory.

He received PhD degree from Hiroshima University. He is an associate professor in the Faculty of Engineering, University of Miyazaki. His research interest includes graph theory, probabilistic algorithm, fractal geometry and measure theory.

Prof. Amane Takei

He is working as an associate professor for Department of Electrical and systems Engineering, University of Miyazaki, Japan. His research interest includes high performance computing for computational electromagnetism, iterative methods for the solution of sparse linear systems, domain decomposition methods for large-scale problems. He is a member of IEEE, an expert advisor of The Institute of Electronics, Information and Communication Engineers (IEICE), a delegate of the Kyushu branch of Institute of Electrical Engineers of Japan (IEEJ), a director of Japan Society for Simulation Technology (JSST).

He is working as an associate professor for Department of Electrical and systems Engineering, University of Miyazaki, Japan. His research interest includes high performance computing for computational electromagnetism, iterative methods for the solution of sparse linear systems, domain decomposition methods for large-scale problems. He is a member of IEEE, an expert advisor of The Institute of Electronics, Information and Communication Engineers (IEICE), a delegate of the Kyushu branch of Institute of Electrical Engineers of Japan (IEEJ), a director of Japan Society for Simulation Technology (JSST).

Dr. Takao Ito

He is a Professor of Management of Technology (MOT) in Graduate School of Engineering at Hiroshima University. He is serving concurrently as Professor of Harbin Institute of Technology (Weihai) China. He has published numerous papers in refereed journals and proceedings, particularly in the area of management science, and computer science. He has published more than eight academic books including a book on Network Organizations and Information (Japanese Edition). His current research interests include automata theory, artificial intelligence, systems control, quantitative analysis of inter-firm relationships using graph theory, and engineering approach of organizational structures using complex systems theory.

He is a Professor of Management of Technology (MOT) in Graduate School of Engineering at Hiroshima University. He is serving concurrently as Professor of Harbin Institute of Technology (Weihai) China. He has published numerous papers in refereed journals and proceedings, particularly in the area of management science, and computer science. He has published more than eight academic books including a book on Network Organizations and Information (Japanese Edition). His current research interests include automata theory, artificial intelligence, systems control, quantitative analysis of inter-firm relationships using graph theory, and engineering approach of organizational structures using complex systems theory.

REFERENCES

Cite this article

TY - JOUR AU - Makoto Sakamoto AU - Takahiro Ishizu AU - Masamichi Hori AU - Satoshi Ikeda AU - Amane Takei AU - Takao Ito PY - 2020 DA - 2020/02/26 TI - Intuitive Virtual Objects Manipulation in Augmented Reality: Interaction between User’s Hand and Virtual Objects JO - Journal of Robotics, Networking and Artificial Life SP - 265 EP - 269 VL - 6 IS - 4 SN - 2352-6386 UR - https://doi.org/10.2991/jrnal.k.200221.003 DO - 10.2991/jrnal.k.200221.003 ID - Sakamoto2020 ER -