Detecting COVID-19 Patients in X-Ray Images Based on MAI-Nets

, Xiao Huang1,

, Xiao Huang1,  , Ji Li1, Peng Zhang2, *,

, Ji Li1, Peng Zhang2, *,  , Xin Wang1, *,

, Xin Wang1, *,

- DOI

- 10.2991/ijcis.d.210518.001How to use a DOI?

- Keywords

- COVID-19; Deep learning; MAI-Net; Convolutional neural network; Chest X-ray images

- Abstract

COVID-19 is an infectious disease caused by virus SARS-CoV-2 virus. Early classification of COVID-19 is essential for disease cure and control. Transcription-polymerase chain reaction (RT-PCR) is used widely for the detection of COVID-19. However, its high cost, time-consuming and low sensitivity will significantly reduce the diagnosis efficiency and increase the difficulty of diagnosis for COVID-19. For X-ray images of COVID-19 patients have high inter-class similarity and low intra-class variability, we specifically designed a multi attention interaction enhancement module (MAIE) and proposed a new convolutional neural network, MAI-Net, based on this module. As a lightweight network, MAI-Net has fewer layers and amount of network parameters than other network models, enabling more efficient detection of COVID-19. To verify the performance of the model, MAI-Net performed a comparison experiment on two open-source datasets. The experimental results show that its overall accuracy and COVID-19 category accuracy are 96.42% and 100%, respectively, and the sensitivity of COVID-19 is 99.02%. Considering the factors such as accuracy rate, the parameters number of network model and the calculation amount, MAI-Net has better practicability. Compared with the existing work, the network structure of MAI-Net is simpler, and the hardware requirements of the equipment are lower, which can be better used in ordinary equipment.

- Copyright

- © 2021 The Authors. Published by Atlantis Press B.V.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

COVID-19, caused by the virus SARS-CoV-2, has spread worldwide, and affecting medical safety and the economy in many countries and regions. As of December 15, 2020, more than 73 million people had been infected. COVID-19 has a high infection rate and the number of patients has increased dramatically over time, which puts enormous pressure on medical staff in diagnosing and treating of COVID-19. In this case, how to diagnose COVID-19 patients quickly and accurately has become the priority for medical staff to consider seriously.

The current standard techniques for detecting COVID-19 are RT-PCR, chest X-ray detection and computed tomography (CT). RT-PCR has the advantage of simplicity and is used in most areas during the initial COVID-19 outbreak [1]. However, the sensitivity of RT-PCR for COVID-19 detection is limited. It has high requirements for the laboratory environment, which will significantly reduce the diagnostic efficiency and increase the difficulty of COVID-19 diagnosis. Aiming at these problems, medical professionals have mad more attempts to diagnose patients with radiographic findings [2]. CT and X-ray examination play an essential role in the early diagnosis and treatment of this disease [3–7]. Combining the features of radiological images can help detect COVID-19 as soon as possible, achieving timely screening of the suspected cases to confirm the diagnosis.

As a research direction in artificial intelligence, deep learning has become one of the popular research fields [8–12]. Through the application of deep learning in medical imaging, researchers have achieved remarkable results in diagnosing various diseases. In diagnosing COVID-19, the main advantage of AI technology is the ability to segment the lung and infected areas via medical images. Deep learning can analyze the input data without manually extracted features [13]. Compared with the traditional imaging workflow, which is highly dependent on manual operation, artificial intelligence can provide more accurate and efficient imaging processing scheme with objective and accurate analysis results in clinical diagnosis. Therefore, deep learning technology is expected to replace RT-PCR in the initial screening of suspicious patients and speed up the diagnosis [14–16].

Once infected with COVID-19, most patients have common complications such as lung infection or pneumonia, which can be diagnosed using imaging techniques. At present, CT and X-ray are the mainly mainstream imaging techniques. Compared with CT images, X-ray has lower cost and less radiation damage. Defining lung infection manually is a tedious and time-consuming task, and it is a highly subjective task that is more susceptible to personal factors than computers. Therefore, using deep learning technology to medical imaging applications provides a new feasible direction for the diagnosis of COVID-19.

In recent years, deep learning methods have been used to automatically detect many different diseases and lesions, such as detecting lung, breast, liver, head and brain tumor type and volume [17,18], and automatically detecting lung disease [19–21]. Xue et al. [22] used GAN to study the relationship between binary brain tumor segmentation and brain magnetic resonance imaging (MRI) images. Fatih Ozyurt et al. [23] proposed a new neural network for the detection of brain tumors by high-resolution brain MRI. Wang et al. [24] proposed a neural network model for detecting colon polyps. These models have achieved excellent results, reflecting the achievements of deep learning applied in the medical field.

Since the outbreak of COVID-19, many researchers have researched the detection of COVID-19. Wang and Wong [25] proposed the COVID-19 deep detection model (COVID-NET), which had 92.4% accuracy in classifying normal, non-COVID-19 and COVID-19. The model constructed by Harsh Panwar et al. [26] based on VGG-16 solved the problem of unbalanced X-ray image data set in the early stage of the epidemic, and applied the migration learning method to detect COVID-19 rapidly. CoroNet proposed by Asif Iqbal Khan et al. [27] was based on Xception-71, and the classification accuracy of the model reached 95% in the detection of COVID-19. When detecting COVID-19, Ali Abbasian Ardakani et al. [28] used 10 famous convolutional neural networks (CNNs) to distinguish COVID-19 patients from non-COVID-19 patients, and summarized the characteristics of these famous networks. The CFW-NET proposed by Wang et al. [29] has achieved an overall accuracy rate of 94.35% for COVID-19 detection.

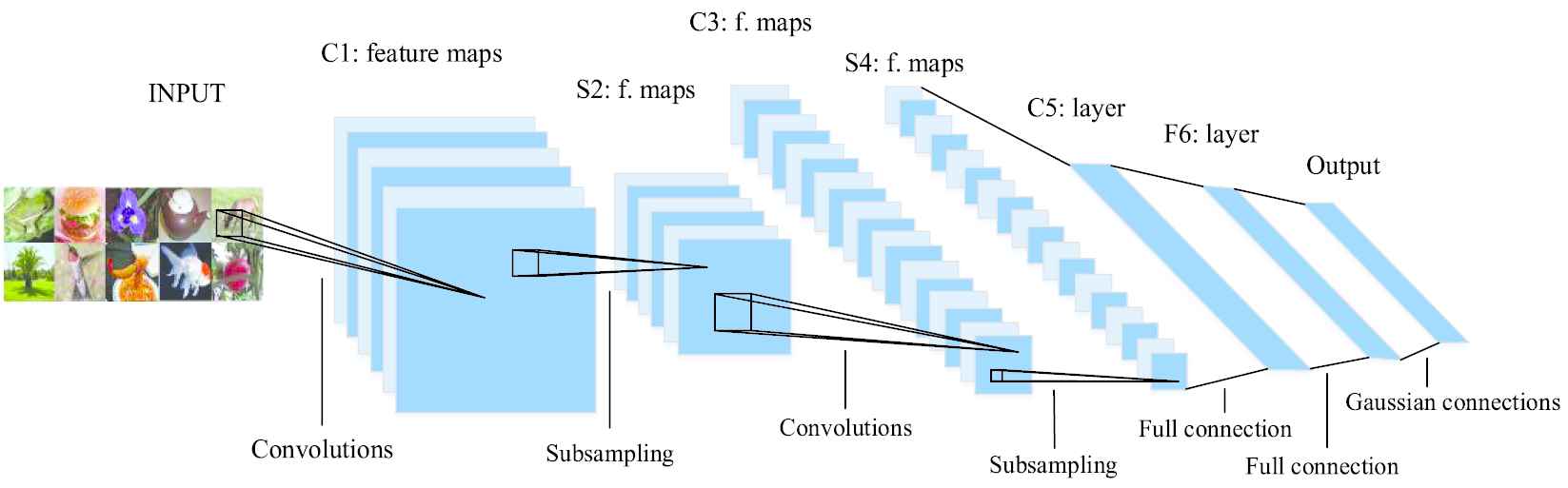

2. CONVOLUTIONAL NEURAL NETWORKS

The basic structure of deep CNN is shown in Figure 1. In recent years, CNNs have developed rapidly in the field of computer vision. Yann LeCun et al. [30] systematically summarized the relevant contents of CNNs, analyzed the application and development process of CNNs in detail.

The basic structure of convolution neural network.

In 2014, Simonyan and Zisserman [31] proposed visual geometry group networks (VGG-Nets). In the network, the idea of replacing the network layer with the basic module was proposed. The basic module can be reused in the construction of the deep network model. In the same year, Christian Szegedy proposed a new deep learning model GoogleNet [32]. In this network, the Inception module was proposed to improve the utilization of computing resources within the network, which alleviated the overfitting problem to some extent. In 2015, Kaiming He et al. [33] proposed a residual-net (ResNet). ResNet has made innovations in the network structure, instead of simply accumulating layers, and introduced residual units to solve the problem of network degradation. In 2017, Huang et al. [34] proposed the Dense Convolutional Network (DenseNet), in which the dense connection mechanism alleviates the problem of gradient disappearance, reduces the number of network parameters.

3. PROPOSED MODULE AND NETWORK

3.1. MAIE Module

The X-ray images of patients with COVID-19 show patchy shadows or interstitial changes, shallow and fuzzy edge density, ground-glass opacity [35]. In addition, because the chest X-ray image has high inter-class similarity and low intra-class variance, it will lead to model bias and overfitting, reduce performance and generalization of the model [36]. Aiming at the characteristics of X-ray images of COVID-19 patients, a multi attention interaction enhancement module (MAIE) is designed. Through multi-channel information interaction, MAIE module enhances the attention and improves the recognition ability of the model to COVID-19.

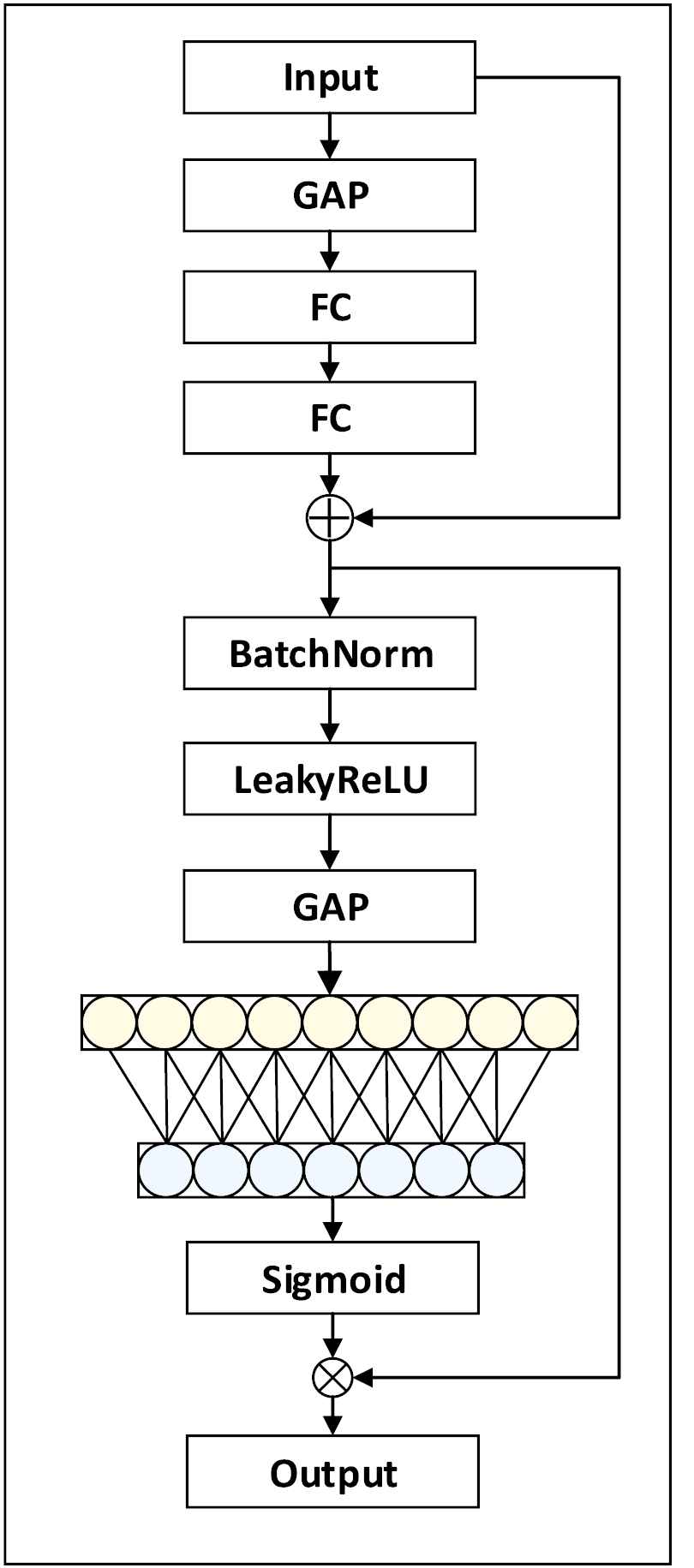

The structure of MAIE is shown in Figure 2. Where FC is the full-connection layer, Conv is the convolutional layer of 2 × 2 and GAP is the global average pooling layer. The MAIE module is a composite structure that contains point convolution, full-connection layer, batch standardization and activation function. The skip connection can solve the problem of network degradation to some extent.

Structure of MAIE.

The MAIE module compacts the feature maps on the channel into a global feature by passing the input feature map through the global average pooling layer, then passes through the two full-connection layers. GAP is used to compress the feature map on the channel into a global feature, and the first FC layer is used to reduce the dimensionality. The second FC layer is used to restore dimensionality. In this way, MAIE model can learn the weight coefficient of each channel. In the feature extraction process, the weight coefficient can help MAIE model extract more important channel features, suppress unimportant channel features and enhance the feature extraction ability of the network. Then the BatchNorm and the LeakyReLU operation are carried out to effectively avoid the gradient disappearance of the neural network.

To obtain the interaction information between each channel and adjacent channels, the feature map is compressed into a global feature through the 2nd GAP, and the multiple global features obtained are superimposed according to a certain weight. MAIE module extracts global features between multiple adjacent channels and superimposing certain weight, realizes information interaction among local multiple channels. This operation can be implemented by fast 1D convolution with a convolution kernel size k. k represents the number of channels participating in the information interaction. MAIE module only involves a handful of parameters while bringing network performance gain.

3.2. MAI-Net

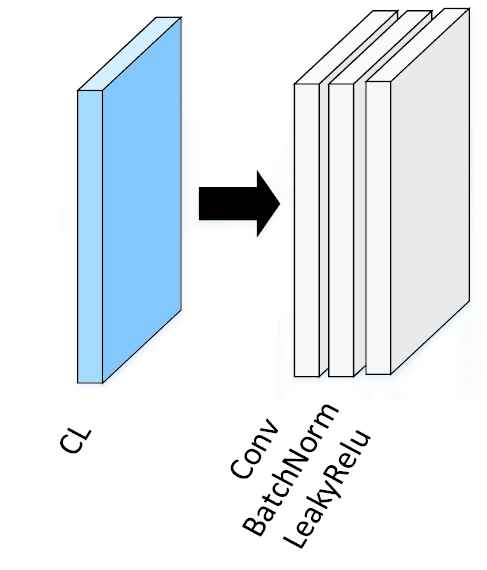

Based on MAIE module, a new CNN structure, MAI-Net, was proposed. In MAI-Net, the CL part (Figure 3) contains the convolutional layer, BatchNorm and LeakyReLU. CL part reduces network training time and improves model stability by standardizing batch processing of input. Compared to some classic networks, MAI-Net has a simpler network structure and fewer parameters.

Structure of CL.

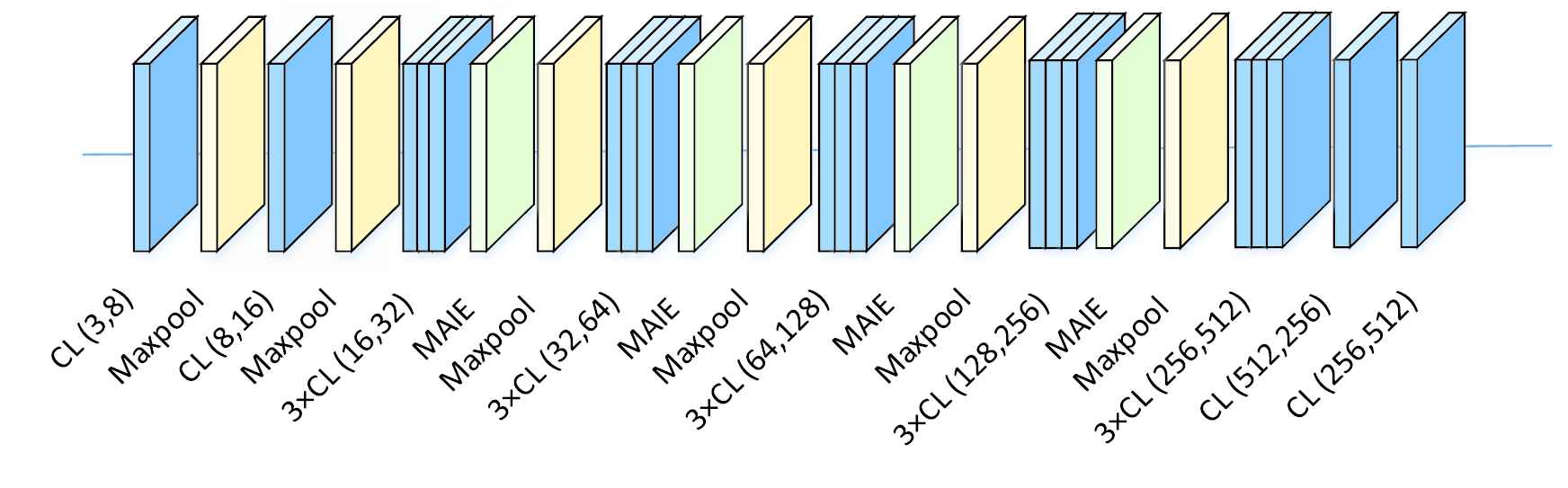

In order to explore the impacts of different depths on model performance, three deep CNN structures are proposed, namely MAI-Net16, MAI-Net19 and MAI-Net22, as shown in Table 1. The structure diagram of MAI-Net19 is shown in Figure 4.

| MAI-Net 16 | MAI-Net 19 | MAI-Net 22 | |||

|---|---|---|---|---|---|

| 2 × 2 Maxpool, stride = 2 | |||||

| CL (3, 8) | CL (3, 8) | CL (3, 8) | |||

| Maxpool | Maxpool | Maxpool | |||

| CL (8, 16) | CL (8, 16) | CL (8, 16) | |||

| Maxpool | Maxpool | Maxpool | |||

| CL (16, 32) | ×3 | CL (16, 32) | ×3 | CL (16, 32) | ×3 |

| MAIE | MAIE | MAIE | |||

| Maxpool | Maxpool | Maxpool | |||

| CL (32, 64) | ×3 | CL (32, 64) | ×3 | CL (32, 64) | ×3 |

| MAIE | MAIE | MAIE | |||

| Maxpool | Maxpool | Maxpool | |||

| CL (64, 128) | ×3 | CL (64, 128) | ×3 | CL (64, 128) | ×3 |

| MAIE | MAIE | MAIE | |||

| Maxpool | Maxpool | Maxpool | |||

| / | CL (128, 256) | ×3 | CL (128, 256) | ×3 | |

| / | MAIE | MAIE | |||

| / | Maxpool | Maxpool | |||

| / | / | CL (256, 512) | ×3 | ||

| / | / | MAIE | |||

| / | / | Maxpool | |||

| CL (128, 256) | ×3 | CL (256, 512) | ×3 | CL (512, 1024) | ×3 |

| CL (256, 128) | CL (512, 256) | CL (1024, 512) | |||

| CL (128, 256) | CL (256, 512) | CL (512, 1024) | |||

| Classifier, Softmax | |||||

MAI-Net configurations.

The architecture of MAI-Net19.

The traditional neural network, such as AlexNet and VGG, all take the three-layer full connection layer as the classifier, which contains a large number of parameters and requires high memory. In order to simplify the network structure and reduce the number of parameters, we choose to use a single-layer full-connection layer (FC) instead of the three-layer FC layer as our classifier.

Because the number of output features by the convolutional layer is huge, using a single fully connected layer as a classifier will still lead to excessive parameter quantity. So, the GAP layer [37] is used to reduce the feature map size to 1 × 1 and then use the fully connected layer for classification. GAP forces the features and categories to correspond to each other, performs feature weighting on the original channel, and extracts the weight relationship between each channel [38], which is more natural for the convolution structure. By using the GAP layer to reduce the feature map size to 1 × 1, and using fully connected layer as classifier, the number of parameters is greatly reduced.

3.3. Network Complexity

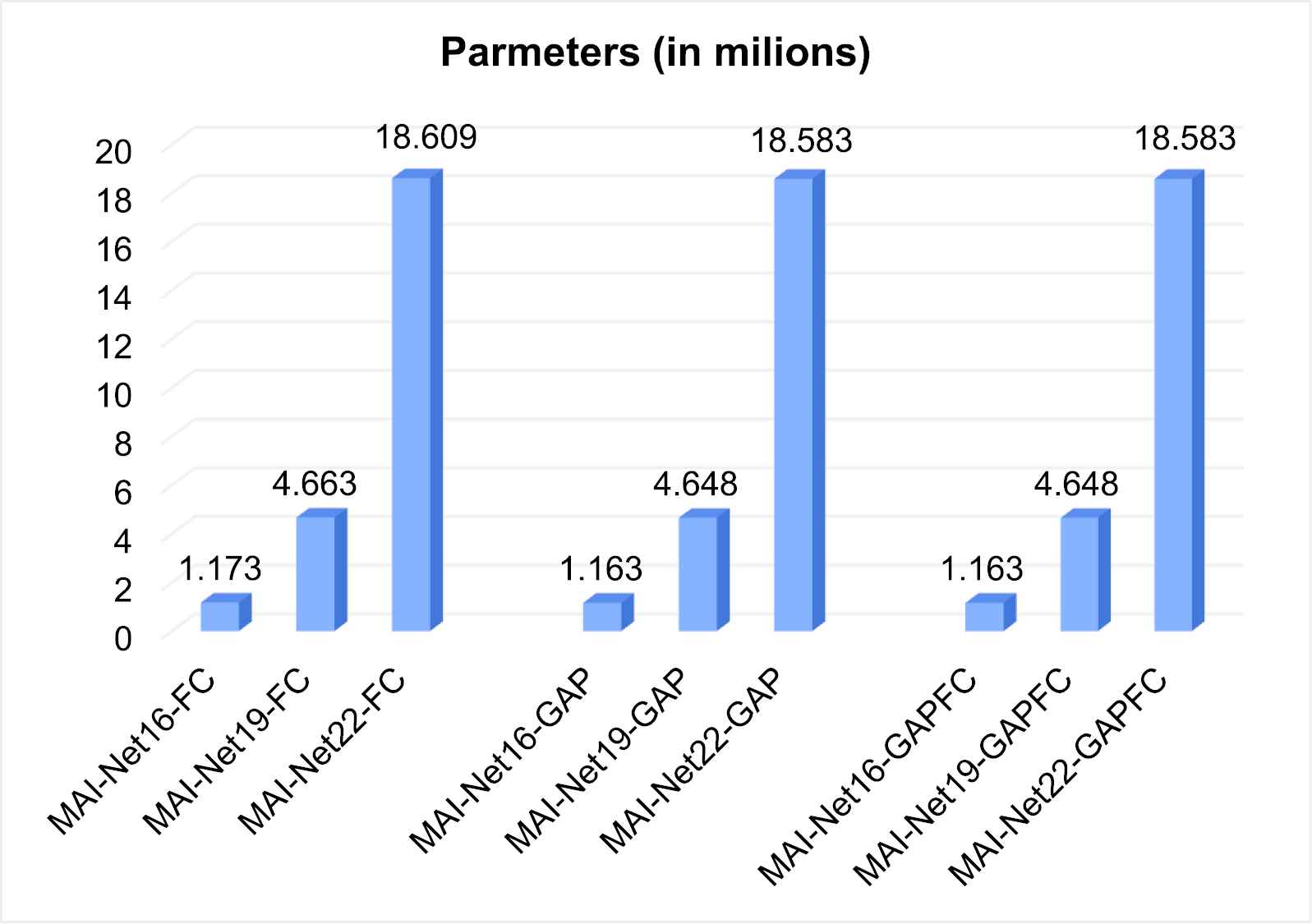

In order to study the influences of different depths and different classifiers on the amount of model calculations and parameters, we compare the parameters of models using different classifiers and different depth models.

Taking the 3-classes classification task as an example, the size of the output feature map of the last layer is

The parameters for different networks are shown in Figure 5.

The amount of parameters of MAI-Nets.

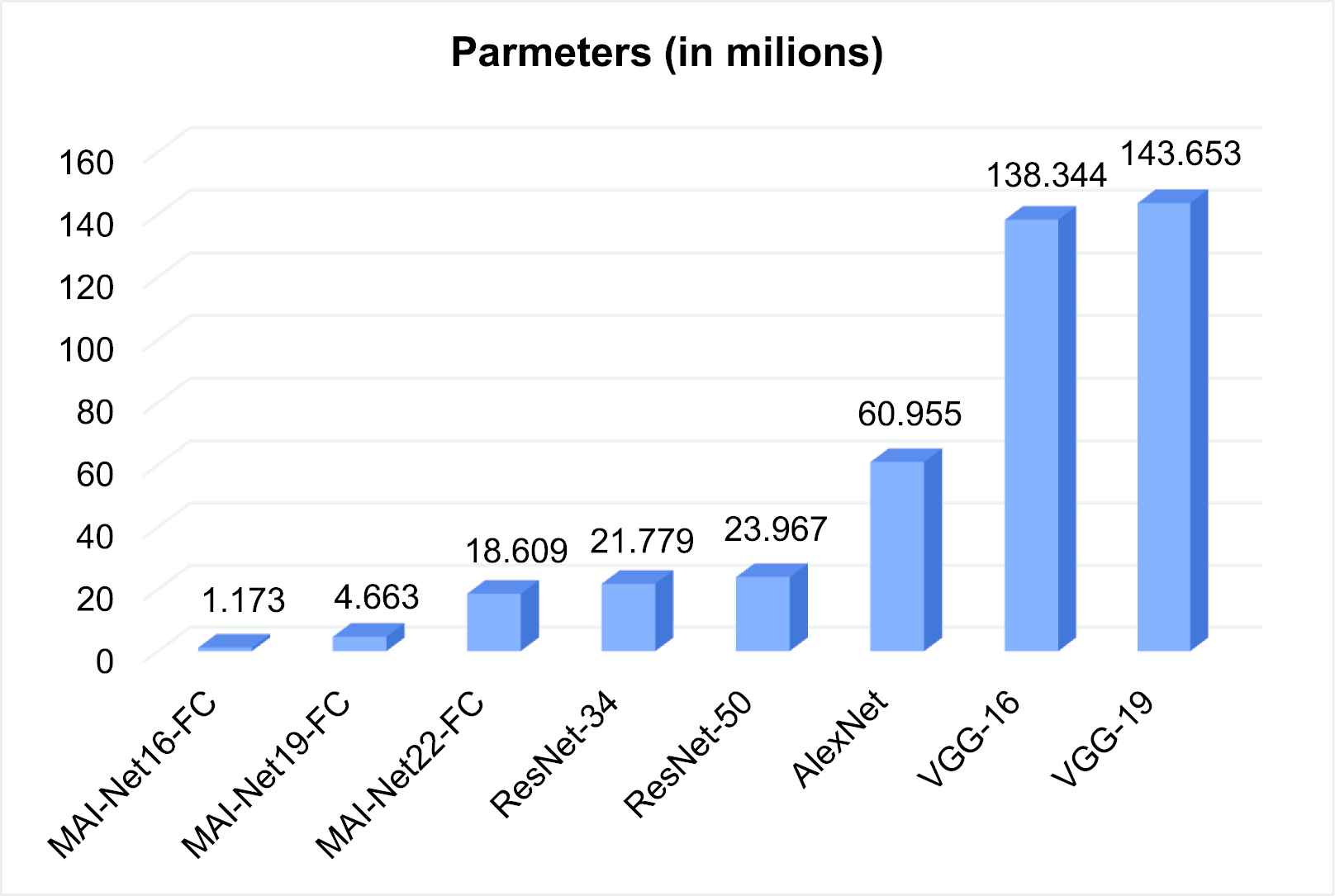

In Figure 5, the parameters vary with different classifiers when the network depths are the same, while the classifier has little effect on the amount of network parameters. The amount of network parameters of MAI-Net22-FC is 15.86 times that of MAI-Net16-FC. The amount of network parameters of MAI-Net19-FC is 3.99 times that of MAI-Net16-FC. Therefore, it can be seen that the network depth has the most significant influence on the model parameters. In Figure 6, the amount of network parameters of MAI-Net-FC is compared with some classical networks.

The amount of parameters of classic networks.

From Figure 6, it can be seen that the amount of network parameters of MAI-Net-FC are less than those of classical networks. ResNet-34 has the least amount of network parameters among these classical networks, but it is still 18.56 times that of MAI-Net16-FC and 1.17 times that of MAI-Net22-FC. The amount of parameters of VGG-19 (with maximum parameters) is 122.47 times that of MAI-Net16-FC and 7.72 times that of MAI-Net22-FC. Therefore, when medical conditions are limited, device memory is insufficient, and hardware performance does not support too many parameters, MAI-Net16 is a better choice than other classical networks.

4. EXPERIMENTS AND RESULTS ANALYSIS

4.1. Dataset

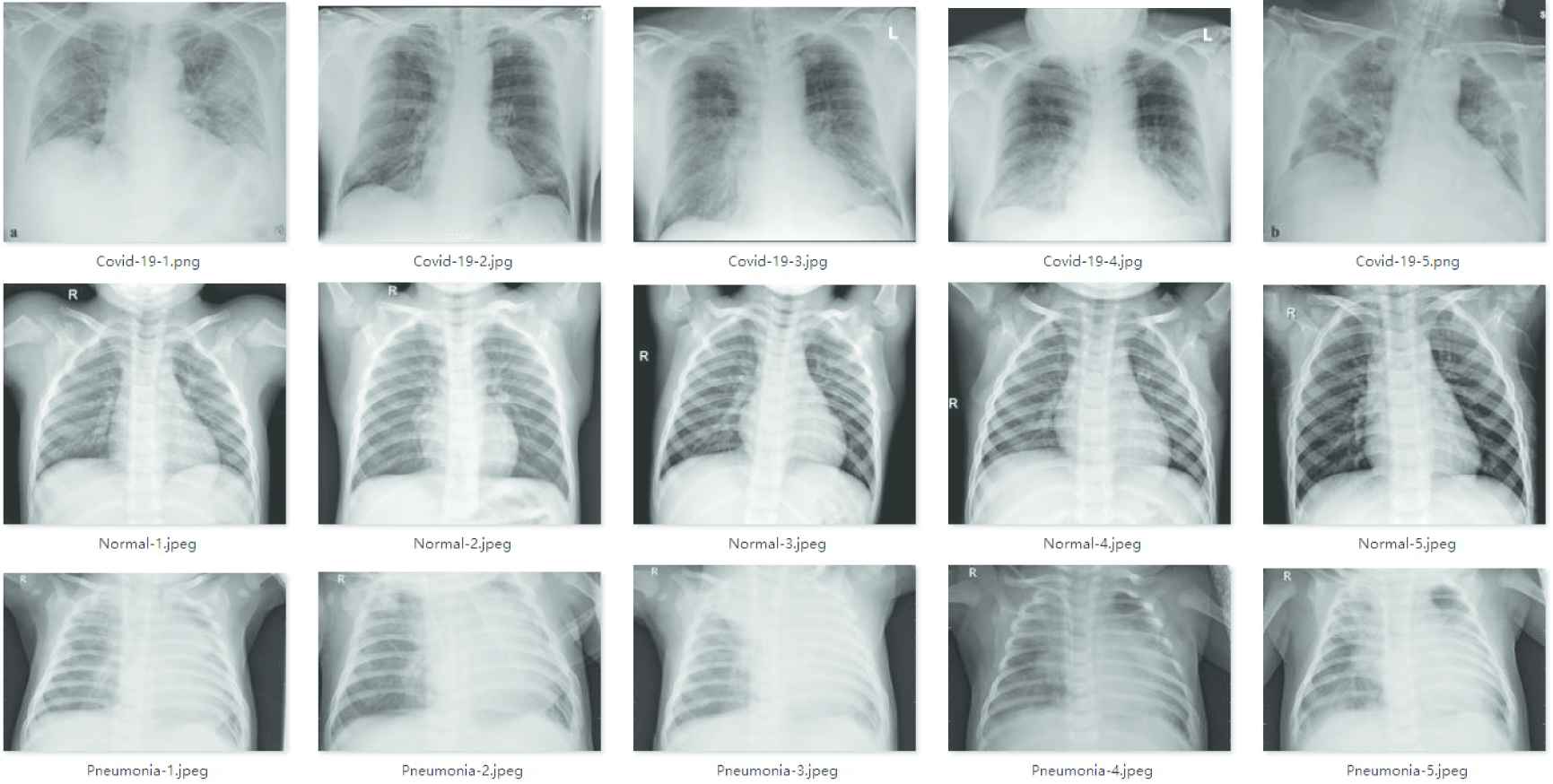

In the experiments, X-ray images obtained from two different open-source datasets were used for the diagnosis of COVID-19. The first dataset is the COVID-19 chest X-ray dataset from GitHub (https://github.com/ieee8023/covid-chestxray-dataset), which was collected from the public sources and indirectly by hospitals and doctors. This dataset consists of X-ray and CT scan images of different patients infected with COVID-19 and other pneumonia. A total of 760 images are included in the dataset, and 412 X-ray images of COVID-19 positive patients are selected for training. The second dataset is taken from Kaggle's chest X-ray images (https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia). These images are divided into normal images and pneumonia images, with a total of 5863 images. We selected 4265 X-ray images of pneumonia and 1575 normal X-ray images from this dataset. The training set contains 5526 X-ray images, including 310 COVID-19 patient images, 1341 normal images and 3875 pneumonia images. The test set contains 726 X-ray images, 102 COVID-19 patient images, 234 normal images and 390 pneumonia images. The chest X-ray images of various patients in datasets are shown in Figure 7.

Chest X-ray images.

Figure 7 shows that the chest X-ray images have high inter-class similarity and low intra-class variance, which increases the difficulty of the network to classify chest X-ray images.

4.2. Preprocessing and Experiment Setup

All the experiments are carried out on the same platform and environment to ensure the credibility of comparisons between different network models. Table 2 shows the software and hardware configuration information of the experimental platform. The “batchsize” size of the training and test set are both 32.

| Attributes | Configuration Information |

|---|---|

| Operating system | Ubuntu 14.04.5 LTS |

| CPU | Intel(R) Xeon(R) CPU E5-2670 v3 @ 2.30 GHz |

| GPU | GeForce GTX TITAN X |

| CUDNN | CUDNN 6.0.21 |

| CUDA | CUDA 8.0.61 |

| Frame | Fastai |

| IDE | PyCharm |

| Language | Python |

Experimental platform configuration.

In the training process, we use the prediction training method to adjust the learning rate gradually. First, the learning rate increases gradually from a very small value. Then the learning rate decay method is used to gradually reduce the learning rate, and finally obtain an appropriate value. After repeated experiments, the parameters are finally set as follows: the initial learning rate is set as 0.0003, and the training epoch is set as 20. In 0–10 epochs, the learning rate gradually increases from 0.0003 to 0.001. In 11–20 epochs, the learning rate is gradually reduced from 0.001 to 0.00036.

4.3. Evaluation Criteria

Based on the evaluation criteria adopted by most medical image classification models, accuracy, precision, recall, specificity and F1-score are used as performance indicators. Accuracy is summarized as the proportion of correctly classified samples to the total number of samples. Precision is the number of true positives divided by the number of true positives and false positives. Lower precision may also indicate a large number of false positives. Recall rate (sensitivity) is the categorizer's ability to correctly classify all people with the disease (true positive rate). The F1-score is a weighted average of precision and recall. Specificity is the ability of the classifier to identify people without disease (true negative rate) correctly.

4.4. Experimental Results

In the 3 classifications experiments, we classified the X-ray images into COVID-19 images, normal images and general pneumonia images. 10-fold cross-validation is used to evaluate the performance of the proposed network model to make better use of the dataset and prevent overfitting. In experimental datasets, 90% of the data are for training, and the remaining 10% are for validation.

In order to reflect the effect of net depth and classifier, 9 types of MAI-Net were used to conduct experiments on the datasets. The experimental results are shown in Table 3, where the best experimental results are bolded.

| MAI-Net 16 | Accuracy | Precision | Recall | Specificity | F1-Score |

|---|---|---|---|---|---|

| FC | 95.04 | 96.91 | 94.38 | 97.19 | 95.62 |

| GAP | 94.21 | 96.26 | 93.69 | 96.84 | 94.96 |

| GAPFC | 95.87 | 97.28 | 95.53 | 97.76 | 96.39 |

| MAI-Net 19 | Accuracy | Precision | Recall | Specificity | F1-Score |

| FC | 96.01 | 97.43 | 95.61 | 97.81 | 96.52 |

| GAP | 95.59 | 97.12 | 95.24 | 97.62 | 96.17 |

| GAPFC | 96.42 | 97.83 | 96.17 | 98.08 | 96.99 |

| MAI-Net 22 | Accuracy | Precision | Recall | Specificity | F1-Score |

| FC | 95.04 | 96.90 | 94.62 | 97.31 | 95.74 |

| GAP | 94.90 | 96.65 | 94.40 | 97.20 | 95.51 |

| GAPFC | 95.32 | 96.96 | 94.96 | 97.48 | 95.96 |

Performance of different depth MAI-Nets (%).

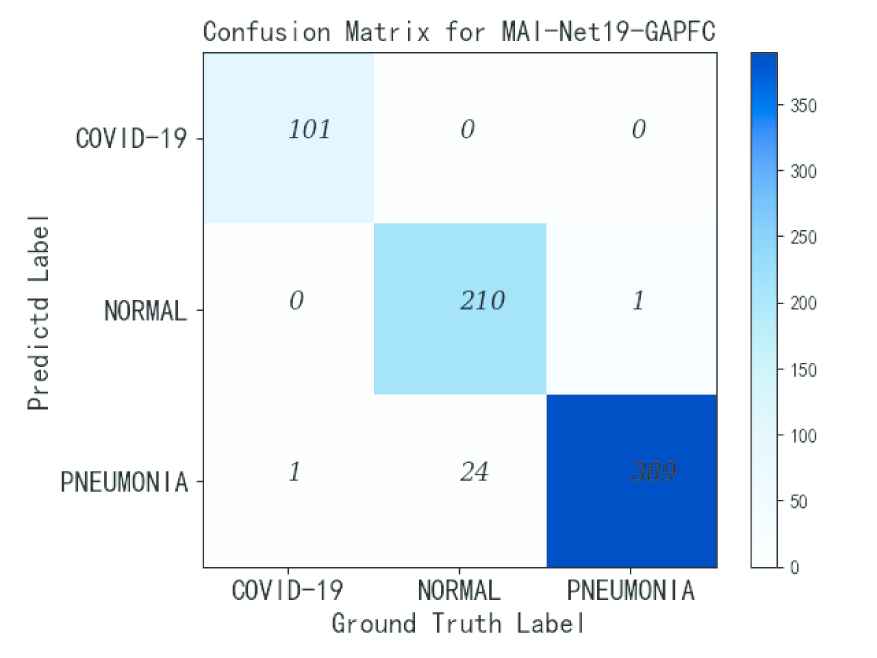

From Table 3, it can be seen that MAI-Net-GAP has worse performance than the networks using the other two classifiers. The overall accuracy of MAI-Net16-GAP is 0.83% lower than that of MAI-Net16-FC and 1.66% lower than that of MAI-Net16-GAPFC. Among them, MAI-Net19-GAPFC has the best overall performance, with better overall accuracy, recall rate, specificity and F1-score. After comprehensive analysis, MAI-Net19-GAPFC has the best performance. The confusion matrix of MAI-Net19-GAPFC is shown in Figure 8, and it can be seen that MAI-Net has good recognition effect on COVID-19. The detailed results of MAI-Net19-GAPFC are given in Table 4.

This figure shows the confusion matrix of the MAI-Net19-GAPFC.

| Class | Precision | Sensitivity | Specificity |

|---|---|---|---|

| COVID-19 | 100.00 | 99.02 | 100.00 |

| Pneumonia | 93.96 | 99.74 | 94.38 |

| Normal | 99.53 | 89.74 | 99.87 |

| Average | 97.83 | 96.16 | 98.08 |

Precision, sensitivity, specificity of MAI-Net19-GAPFC (%).

In Table 4, MAI-Net achieves good classification performance for both COVID-19 positive patients and common pneumonia patients. Especially, the accuracy and specificity of the classification are both 100%, and the sensitivity is 99.02%.

The results of MAI-Net19-GAPFC are also compared with those of traditional convolution neural networks (VGG-19, GoogLeNet, ResNet-50 and DenseNet-121). The experimental results are shown in Table 5.

| Model | Accuracy | Precision | Sensitivity | Specificity | F1-Score | COVID-19 Acc |

|---|---|---|---|---|---|---|

| ResNet-50 [32] | 93.53 | 96.01 | 93.15 | 96.53 | 94.56 | 98.40 |

| DenseNet-121 [33] | 93.11 | 95.98 | 92.75 | 96.38 | 94.34 | 99.02 |

| GoogleNet [31] | 92.56 | 95.29 | 91.56 | 95.78 | 93.37 | 95.10 |

| Vgg-19 [30] | 93.11 | 96.09 | 92.93 | 96.47 | 94.49 | 100.00 |

| MAI-Net19-GAPFC | 96.42 | 97.83 | 96.16 | 98.08 | 96.99 | 99.02 |

Experimental results of other CNNs (%).

ResNet-50 uses skip connections to alleviate gradient explosion and gradient disappearance caused by network model with increasing depth. However, ResNet-50's result is lower than MAI-Net19-GAPFC in all performance indicators. DenseNet-121 further deepens the network by using densely connected dense blocks. Its classification accuracy of COVID-19 is similar to that of MAI-Net19-GAPFC, but other performance indicators are lower than Mai-Net19-GAPFC. GoogleNet performs poorly on the datasets and has the lowest performance. VGG-19 achieves 100% accuracy of COVID-19. But VGG-19 uses a three-layer fully connected layer as classifier, resulting in enormous amount of network parameters and computation, high requirement on equipment and long computation time. Over all, MAI-Net19-GAPFC has obtained good results in comparison with traditional convolution neural networks. We will further compare MAI-Net19-GAPFC with other for COVID-19 disease detection methods, and the results are shown in Table 6.

| Name | Class | Method | Accuracy | COVID-19 Acc |

|---|---|---|---|---|

| Apostolopoulos et al. [39] | 3 | MobileNet v2 | 94.72 | 98.66 |

| Khan et al. [27] | 3 | CoroNet | 95.02 | 96.67 |

| Civit-Masot et al. [40] | 3 | VGG16 | 84.85 | 100.00 |

| Hengyuan Kang et al. [41] | 3 | CPM-Nets | 95.10 | 95.50 |

| Tanvir Mahmud et al. [42] | 4 | CovX-Net | 90.30 | 85.00 |

| Proposed Method | 3 | MAI-Net19-GAPFC | 96.42 | 99.02 |

Comparison with other methods for COVID-19 patients detection.

Although the accuracy of the network proposed by Civit-Masot et al. is as high as 100% for COVID-19, its overall accuracy is lower in all comparison models. The overall accuracy of the network proposed by Hengyuan Kang et al. based on CPM-Nets is only 1.32% lower than that of our model, but the accuracy of COVID-19 is 3.52% lower than that of our model. According to Table 6, the overall accuracy of Chest X-ray image classification of Mai-Net and the category accuracy of COVID-19 both reach high levels. This indicates that our network performance is better and more targeted for chest X-ray image classification tasks.

4.5. Experiments Analysis

According to the experimental results, the overall accuracy of MAI-Net19-GAPFC is the highest (96.42%). The datasets used in the experiments consist of chest X-ray images of COVID-19 patients, common pneumonia patients and normal people. Because there are few categories of chest X-ray images, the amount of network parameters and calculation of network classifier are reduced. To verify the correlation between MAI-Net and COVID-19 patient images, we controlled the number of images to less than 10% of the training set and less than 15% of the test set. In this case, the COVID-19 sensitivity and COVID-19 category accuracy of MAI-Net19-GAPFC reach 99.02% and 100%, respectively. Since MAI-Net is designed according to the X-ray images characteristics of COVID-19, it has good universality for COVID-19 X-ray images in the case of small samples. However, for data sets in other fields, its performance needs to be verified by further experiments.

In addition, 3 models are proposed with different depths. The recognition performance of the deeper networks for our datasets is not significantly improved compared with shallow networks, and the deeper networks are easier to overfitting. Therefore, for the experimental datasets, the shallow network designed by us can not only reduce the network complexity but also achieve better detection results.

Through the analysis of experimental data, the depth of the network model should not be too deep or too shallow. Complex and deep networks such as ResNet have a relatively large amount of network parameters and calculations. Too deepening a network can also cause gradient dispersion or gradient explosion. Lightweight networks such as MobileNet often result in low recognition rates due to insufficient network depth, which cannot reach a satisfactory level.

We should also pay more attention to the generalization ability of the model. Because the chest X-ray image has high inter-class similarity and low intra-class variance, it will lead to model deviation and overfitting. Therefore, the MAIE module is designed to extract channel feature weight, which improves the feature extraction ability of the network, and enhances the channel attention. Based on MAIE module, MAI-Net has a simpler network structure and fewer parameters, with higher classification accuracy and more vital generalization ability to classify COVID-19 patient chest X-ray images.

5. CONCLUSIONS

In this paper, we designed MAIE module according to the characteristics of COVID-19 X-ray images. Based on the MAIE module, a convolution neural network MAI-Net is proposed to classify chest X-ray images for detecting COVID-19 cases. Through comparative analysis and comparison of the experimental results, MAI-Net19-GAPFC has the highest value. Its overall accuracy and COVID-19 category accuracy are 96.42% and 100%, respectively, and the sensitivity of COVID-19 is 99.02%. Compared with the existing work, the network structure of MAI-Net is simpler, and the hardware requirements of the equipment are lower, which can be better used in ordinary equipment.

Besides, variant COVID-19 cases have appeared in some areas, which may lead to a new outbreak trend of COVID-19 on this basis. Although there is a temporary lack of medical image data set with variant COVID-19, there are no relevant medical reports of significant changes in variant COVID-19 images, so MAI-Net still has reasonable practicability. Aiming at the possible outbreak of variant COVID-19, further detection is still needed after obtaining relevant data sets. Our next research focus is to obtain more images of patients with variant COVID-19, and use them to further train our MAI-Net to improve its versatility and accuracy.

CONFLICTS OF INTEREST

The authors declare no conflict of interest.

AUTHORS' CONTRIBUTIONS

W.W. designed the study, wrote the paper, managed the project and was responsible for the final submission and revisions of the manuscript. X.H. wrote the python scripts, trained the CNN models and did the data analysis. J.L. configured the experimental environment arranged experimental data and organized the datasets. P.Z. implemented the feature visualization. X.W. revised the paper. All authors read and approved the final manuscript.

Funding Statement

This research was funded by The National Defense Science and Technology Innovation Special Zone project (2019XXX00701), The Natural Science Foundation of Hunan Province, China (2019JJ80105), The Changsha Science and Technology Project (kq2004071), The Hunan Graduate Student Innovation Project (CX20200882), The Shenzhen Science and Technology Project (KQTD20190929172704911).

ACKNOWLEDGMENTS

We are grateful to our colleagues for their suggestions.

REFERENCES

Cite this article

TY - JOUR AU - Wei Wang AU - Xiao Huang AU - Ji Li AU - Peng Zhang AU - Xin Wang PY - 2021 DA - 2021/05/28 TI - Detecting COVID-19 Patients in X-Ray Images Based on MAI-Nets JO - International Journal of Computational Intelligence Systems SP - 1607 EP - 1616 VL - 14 IS - 1 SN - 1875-6883 UR - https://doi.org/10.2991/ijcis.d.210518.001 DO - 10.2991/ijcis.d.210518.001 ID - Wang2021 ER -