Availability of results from clinical research: Failing policy efforts

- DOI

- 10.1016/j.jegh.2013.08.002How to use a DOI?

- Keywords

- Evidence based medicine; Clinical research Standards; Publication bias; Clinical epidemiology

- Abstract

Objectives: Trial registration has a great potential to increase research transparency and public access to research results. This study examined the availability of results either in journal publications or in the trial registry from all studies registered at ClinicalTrials.gov.

Methods: All 137,612 records from ClinicalTrials.gov in December 2012 were merged with all 19,158 PubMed records containing registration numbers in the indexing field or in the abstracts. A multivariate analysis was conducted to examine the association between the availability of the results with study and participant characteristics available in registration records.

Results: Fewer than 10% of the registered studies and 15% of the registered and completed studies had published results. The highest publication rate of 22.4% was for randomized trials completed between 2005 (starting year for structured indexing in PubMed of study registration) and 2010. For 86% of overall and 78% of completed registered studies, no results were available in ClinicalTrials.gov or in journal publications. Studies funded by industry vs. other funding sources and drug studies vs. all studies of other interventions were published less often after adjustment for study type, subject characteristics, or posting of results in ClinicalTrials.gov.

Conclusion: Existing policy does not ensure availability of results from clinical research. International policy revisions should charge principal investigators with ensuring that the approved protocols and posted data elements are aligned and that results are available from all conducted studies.

- Copyright

- © 2013 Ministry of Health, Saudi Arabia. Published by Elsevier Ltd.

- Open Access

- This is an open access article under the CC BY-NC-ND license (http://creativecommons.org/licenses/by-nc-nd/4.0/).

1. Introduction

Clinical research aims to inform clinical and policy decision-making by providing valid evidence for treatment benefits and harms [1–4]. However, when conclusions about treatment benefits and harms are based on incomplete evidence, biased decisions and ineffective health care can result [5]. Bias in the publication of studies that show impressive results can exaggerate the benefits of examined treatments [6–10].

Several policy initiatives have tried to improve transparency and ensure wider availability of results from clinical research. The Food and Drug Administration Modernization Act of 1997 required that the NIH create a trial registry – ClinicalTrials.gov – for drug efficacy studies approved with Investigational New Drug applications. In 2000, the National Library of Medicine at the National Institutes of Health launched ClinicalTrials.gov and opened it to the public via the Internet, and Congress mandated the registration of all clinical trials of pharmacological treatments for serious or life-threatening diseases at the ClinicalTrials.gov online database [11–14]. Then in 2005, the International Committee of Medical Journal Editors (ICMJE) and the World Association of Medical Editors made registration a condition of publication for all clinical studies [15]. Finally, the International Clinical Trials Registry Platform (ICTRP) was developed to include 13 primary registries which met the requirements of the ICMJE in providing 20 items with “the minimum amount of trial information that must appear in a register in order for a given trial to be considered fully registered” (see Supplementary Appendix 1, also available at http://www.who.int/ictrp/network/trds/en/index.html).

Ensuring the public access to the results from clinical studies, the Food and Drug Administration Amendments Act (FDAAA) of 2007 mandated posting of the results from applicable clinical trials (e.g., interventional, non-phase I trials of drugs and devices subject to FDA regulation) on ClinicalTrials.gov within 1 or 2 years of study completion [16].

Nonetheless, publication of the results in journal articles remains voluntary. Less than half of the NIH-funded registered trials are published in a peer-reviewed journal within 30 months of trial completion [17]. Only 29% of completed registered studies involving children and 53% of NIH-funded trials have been published [18].

Previous research used time-consuming manual searchers of the publications in various subsets of registered studies (i.e., by source of funding [17], study participants [18], and specific health conditions) [19]. In contrast, this paper examines result availability from all studies registered with ClinicalTrials.gov to answer the question: Do existing policies in research registration, publication, and indexing guarantee access to the results? This study defines “availability of the results” as publication in the journals indexed on Medline or posting the results with ClinicalTrials.gov

2. Methods

For this study, ClinicalTrials.gov registration records were linked with Medline publication records by a unique registered study identifier (number of clinical trial or NCT). First, all records of the registered studies with no time restriction were downloaded from ClinicalTrials.gov from February 2000 to December 2012 (Appendix 2). All 20 fields required by the ICMJE were downloaded. The frequency of study types, design, funding, participant characteristics, and posting of the results were analyzed relying on information provided by the investigators in registration records [20]. Accuracy of the data in ClinicalTrials.gov can be confirmed only by comparing the posted data elements with those approved by the institutional review boards, which was beyond the scope of this study. For validation purposes, ambiguous data (e.g., enrollment values of more than 99,999 participants or negative publication time intervals when publications occurred before studies started subject recruitment) were excluded from the validated analyses.

In contrast with the previous research focusing on clinical trials only [21], all registered studies were analyzed irrespective of study design, funding, subject characteristics or market status of the examined treatments assuming that all clinical research evidence is important for decision-making (Appendix 2). The study design was categorized: as randomized trial when the study design field mentioned random allocation of participants into the treatment groups; as non-random studies when investigators did not explicitly mention randomization; and as unknown study design when investigators left this field blank. Interventions were categorized as drug, procedure, radiation, biologics, or behavioral according to the categories in ClinicalTrials.gov. Study findings were categorized into two categories: industry funding category included all studies funded by pharmaceutical or device companies exclusively or in combination with individuals, universities, or community-based organizations; all other funding sources category included studies funded by the National Institutes of Health or other U.S. Federal agencies exclusively or in combination with industry funding, as well as studies funded by individuals, universities, or community-based organizations without any industry involvement. The length of studies was calculated as the time period between start and completion dates. The publication time was estimated as the time period between study completion and journal publication.

Medline publication records have unique study registration numbers in a specifically designated field with secondary study identification ([SI]) (detailed information about indexing of the registered studies is available in PubMed tutorial at http://www.nlm.nih.gov/bsd/disted/pubmedtutorial/020_810.html) [22]. In compliance with ICMJE’s recommendations from 2005, all publications should report a trial registration number in the article abstract. Since the specific field for indexing of registered studies (secondary study identification field) was introduced in 2005, Medline was searched for registration numbers in that designated [SI] field, but also in the article abstracts. A subgroup analysis was then conducted among the studies registered before and after 2005.

All retrieved references were imported into the reference manager Endnote and then were exported to an Excel worksheet compatible with the SAS statistical software.

When the article reported the results from more than one registered study, additional reference records were created for each NCT. When a registered study was published in more than one article, the fact of the publication was counted only once using the earliest available publication.

For validation purposes, in order to determine the accuracy of the Medline indexing of the registered studies (since some publications of the registered trials did not report study identification numbers in abstracts but may have mentioned the registration status in the full texts of the articles), this study examined whether the secondary study identification field identified all publications of the registered studies in the core clinical journal (Abridged Index Medicus list of core clinical journals is available in Appendix 3) that published the greatest number of registered RCTs. To carry out this task, trial registration numbers were searched for in all Medline fields and in the full texts of the articles published in that journal. If full text articles did not include study registration numbers, it was concluded that the published study was not registered [15].

The publication records from PubMed were then merged with the registration records from ClinicalTrials.gov by the unique NCT number and the availability of the results and factors associated with publication status were examined. Publication rates were calculated as percentages of the registered studies with linked publication records in PubMed. The percentages of the registered studies were calculated with disposition of the results within the trial registry. Speculative imputations for missing data were avoided. Multivariate odds ratios of publication were calculated by study type (observational vs. interventional), random allocation of participants, recruitment as reported in ClinicalTrials.gov, funding, examined interventions, posting of the results in ClinicalTrials.gov, and age and sex of enrolled participants. It was hypothesized that odds of publications differ by study funding, design, and examined interventions. Subgroup analyses were conducted of the publication odds among registered completed or terminated studies to test the hypothesis that completed studies would have greater odds of publication. Publication odds may depend on the year of study registration, for example, recently published studies would have lower publication odds compared with the studies registered more than 3 years ago. To address this issue survival analysis was also conducted to examine publication of the completed studies by study design and funding [23]. The detailed analysis of the factors associated with posting the results in ClinicalTrials.gov was beyond the scope of this study [24]. All hypotheses testing at 95% CL were performed using SAS 9.3.

3. Results

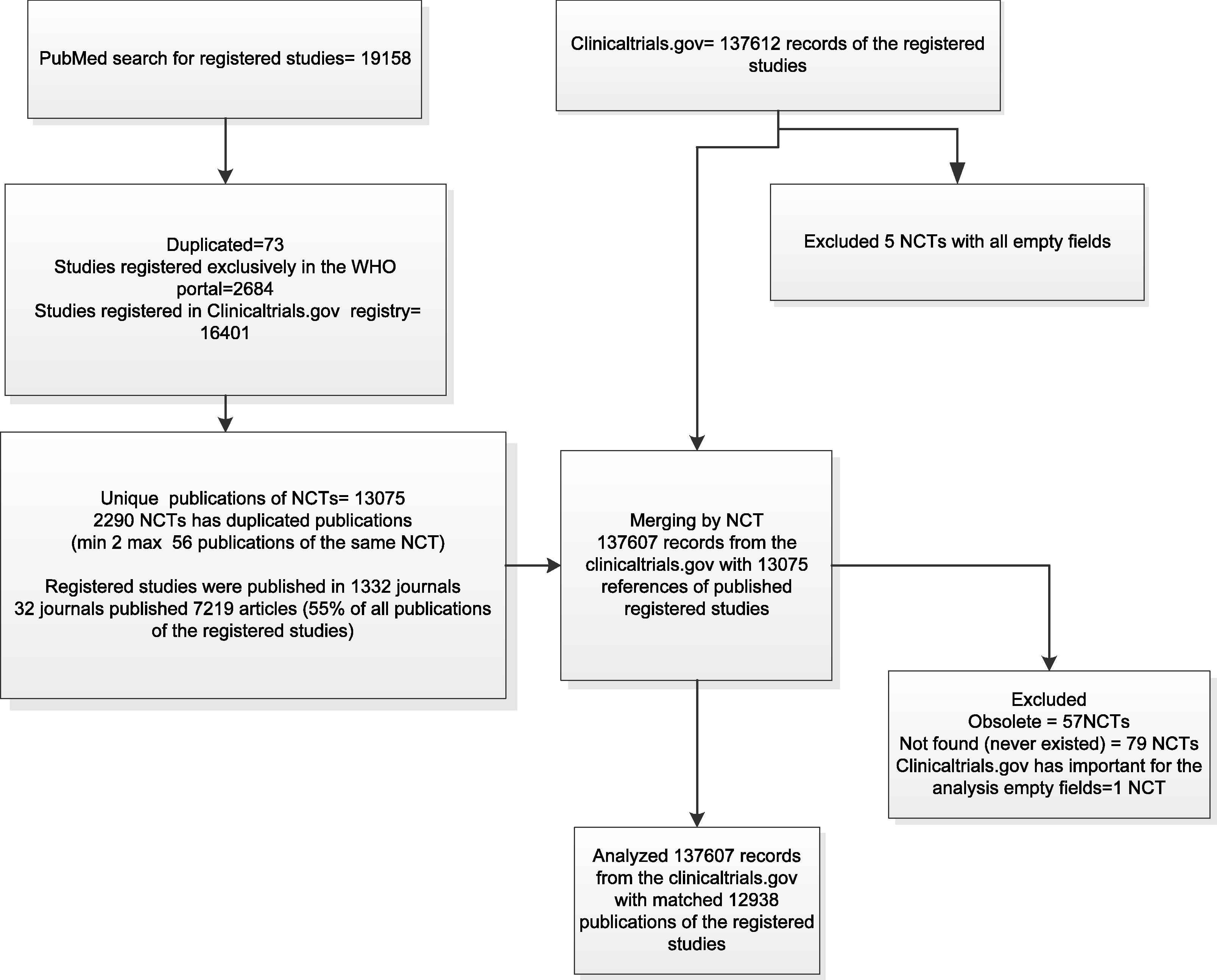

A total of 137,612 registered studies were identified in ClinicalTrials.gov and 19,158 publications of registered studies in PubMed in December 2012 (Fig. 1). After deleting duplicates and merging the data from the trial registry with publication records from PubMed, 137,607 records of the registered studies and 12,938 publications of the registered studies were included in the analyses (analytical data file is available at https://netfiles.umn.edu/xythoswfs/webui/_xy-23709234_1-t_xCJavcvC).

Study flow.

The studies were published in 1332 journals; 55% of the studies were published in 32 journals. Each of these 32 journals published more than 100 registered studies. The completed “Secondary Source ID” field identified 97.3% of the publications of the registered studies and 2.7% of the articles had registration numbers in the full texts of the articles but not in the “Secondary Source ID” field.

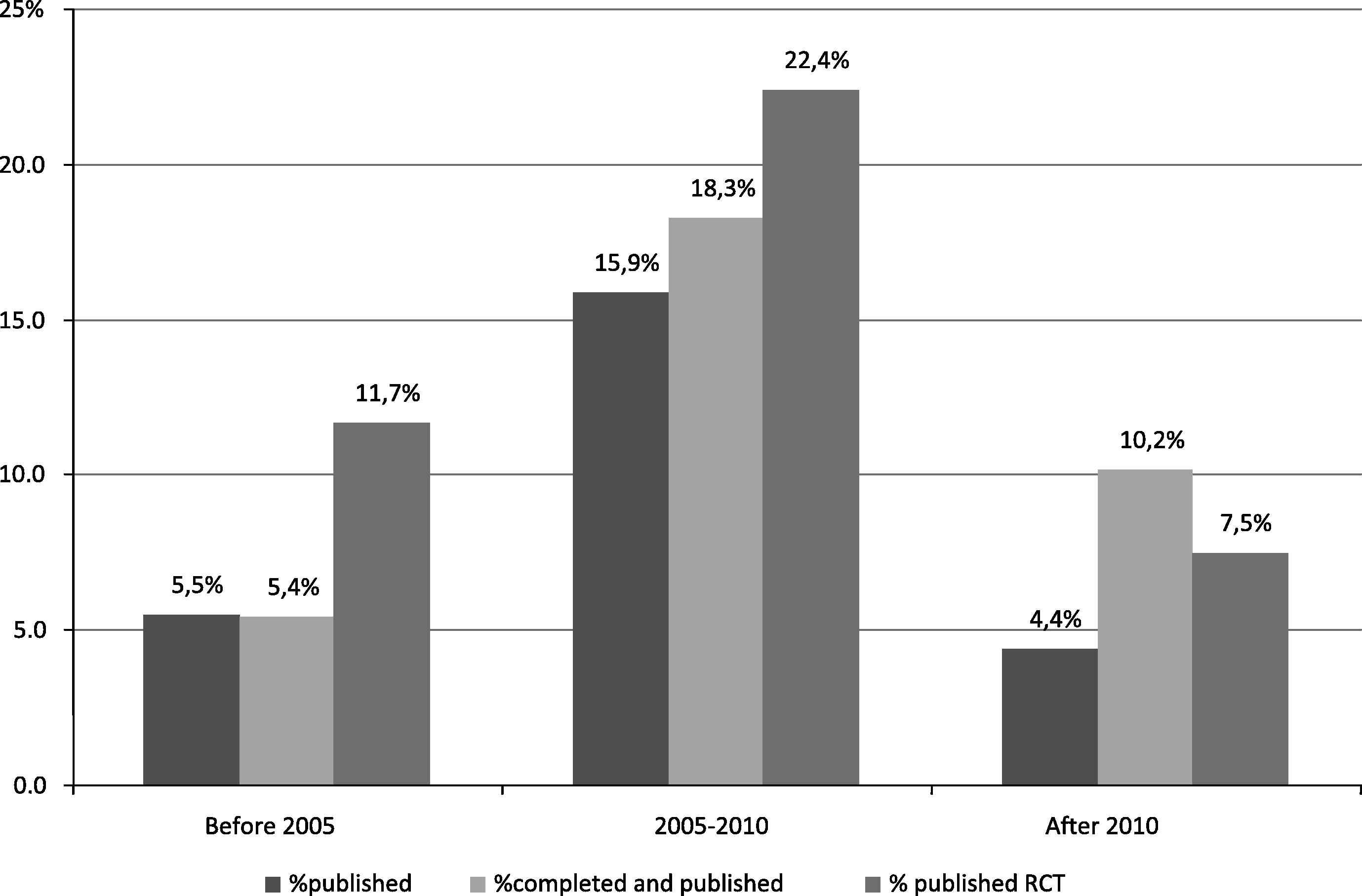

Only 9.4% of all registered studies and 15% of registered and completed studies were published (registration records in ClinicalTrials.gov could be linked to publication records in Medline) (Table 1). Publication rates differed by subject and study characteristics (Table 1). The highest publication rate of 22.4% was among the randomized trials completed between 2005 and 2010 (Fig. 2). The second highest publication rate of 22% was among the registered studies with the results posted in ClinicalTrials.gov. For the majority of registered studies (86% overall and 78% of completed registered studies), no results were available in ClinicalTrials.gov or in journal publications.

Percentage of published among registered studies by the year of completion. RCT – randomized controlled clinical trials % published among all registered; % completed and published among all completed studies; % published RCTs among all registered RCTs.

| Study categories (as reported in ClinicalTrials.gov) | Study characteristics | Unpublished | Published/total (%) |

|---|---|---|---|

| Subject age | Exclusively Children | 7115 | 1087/8202(13.25) |

| All ages | 117554 | 11851/129405(9.16) | |

| Subject gender | Both | 105656 | 11177/116833(9.57) |

| Female | 11945 | 1251/13196(9.48) | |

| Male | 6513 | 490/7003(7) | |

| Examined interventions | Drug | 61142 | 6626/67768(9.78) |

| Non drugs | 63527 | 6312/69839(9.04) | |

| Study type | Interventional | 99936 | 11712/111648(10.49) |

| Observational | 24126 | 1207/25333(4.76) | |

| Study design | Non randomized studies | 58911 | 3382/62293(5.43) |

| Randomized controlled clinical trials | 62136 | 9399/71535(13.14) | |

| Not specified | 3622 | 157/3779(4.15) | |

| Posted the results on ClinicalTrials.gov | Has results | 6034 | 1658/7692(21.55) |

| No results available | 118635 | 11280/129915(8.68) | |

| The FDA definition of the phases of clinical trials | Phase 0 | 785 | 39/824(4.73) |

| Phase 1 | 15182 | 813/15995(5.08) | |

| Phase 1|Phase 2 | 5090 | 431/5521(7.81) | |

| Phase 2 | 23511 | 2017/25528(7.9) | |

| Phase 2|Phase 3 | 2474 | 432/2906(14.87) | |

| Phase 3 | 16252 | 3402/19654(17.31) | |

| Phase 4 | 12996 | 1783/14779(12.06) | |

| Funding | Industry funding | 46757 | 4810/51567(9.33) |

| All other funding sources | 77912 | 8128/86040(9.45) | |

| Recruitment | Active, not recruiting | 14783 | 1695/16478(10.29) |

| Approved for marketing | 33 | 4/37(10.81) | |

| Available | 81 | 2/83(2.41) | |

| Completed | 55311 | 9366/64677(14.48) | |

| Enrolling by invitation | 2084 | 86/2170(3.96) | |

| No longer available | 42 | 5/47(10.64) | |

| Not yet recruiting | 7110 | 76/7186(1.06) | |

| Recruiting | 35697 | 1290/36987(3.49) | |

| Suspended | 781 | 12/793(1.51) | |

| Temporarily not available | 19 | 0/19(0) | |

| Terminated | 6346 | 386/6732(5.73) | |

| Withdrawn | 1950 | 8/1958(0.41) | |

| Withheld | 432 | 8/440(1.82) | |

| Expanded access | 175 | 11/186(5.91) | |

| Total | Total | 124669 | 12,938/137607(9.4) |

Publication status of registered studies.

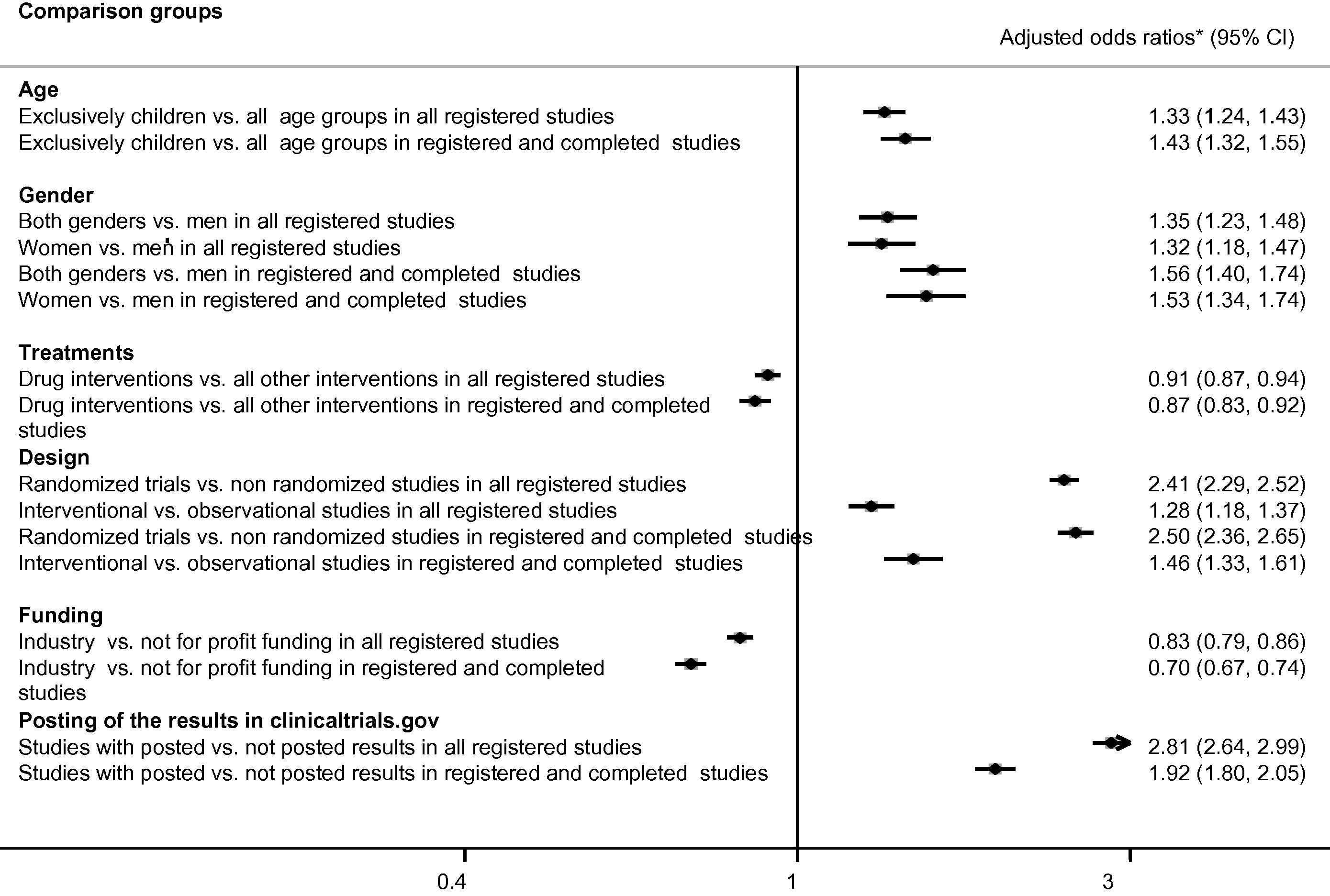

Multivariate analysis demonstrated that participant age and gender, interventional study design, tested interventions, and industry funding were associated with greater odds of publications. The registered studies enrolling exclusively children published the results more often than the studies of all ages had (Fig. 3). Registered studies that enrolled men and women or exclusively women published results more often than studies that enrolled exclusively men.

Publication of the studies registered in ClinicalTrials.gov studies. * Odds ratios were adjusted to all listed variables: subject age, gender, randomized design, funding, intervention types, and posting of the results on ClinicalTrials.gov.

Interventional studies had greater odds of publication compared with observational studies. Randomized trials had greater odds of publication compared with nonrandomized studies. Studies with results posted on ClinicalTrials.gov had greater odds of publication compared with the studies without posting the results. The registered studies examining drugs had lower odds of publication compared with all other studies.

The studies funded by industry published results less often compared with all other studies. The described associations were significant irrespective of the recruitment status (Fig. 3). Survival analysis also demonstrated lower publication rates of industry-funded studies (adjusted hazard rates ratio 0.87 95% CI 0.82–0.91) and the studies examining pharmacological treatments (adjusted hazard rates ratio 0.94 95% CI 0.90–0.99).

This research examined whether study completion modified the association between funding source and the publication. For instance, the studies funded by industry vs. not-for-profit sources published results less often irrespective of the recruitment status of both drug studies and all combined nondrug studies (Table 2). However, the type of the examined interventions modified the association between funding source and publication. In fact, industry funding was associated with lower rates of publication for all drug studies and completed studies examining drugs, devices, and procedures. Industry-funded genetic studies published the results more often than the studies with not-for-profit funding sources. The subject allocation also modified the association between funding source and publication. Industry funding was associated with a lower rate of publication for randomized trials but not for nonrandomized studies (Table 2).

| Comparison groups | Examined interventions | Odds ratio (lower 95%CI to upper 95%CI) | Odds ratio (lower 95%CI to upper 95%CI) |

|---|---|---|---|

| All registered studies | Completed studies | ||

| Industry funding vs. other | Randomized trials | 0.80(0.77 to 0.84) | 0.64(0.60 to 0.67) |

| Non randomized studies | 0.90(0.83 to 0.97) | 0.93b(0.85 to 1.01) | |

| Randomized trials of drugs | 0.81(0.77 to 0.85) | 0.66(0.62 to 0.70) | |

| Randomized trials of non drug interventions | 0.86(0.80 to 0.92) | 0.78(0.72 to 0.84) | |

| Non randomized studies of drugs | 0.86(0.80 to 0.92) | 0.78(0.72 to 0.84) | |

| Non randomized studies of non drug interventions | 0.81(0.77 to 0.85) | 0.66(0.62 to 0.70) | |

| Drug | 0.83(0.78 to 0.87) | 0.66(0.62 to 0.71) | |

| All non drug interventions | 0.84(0.78 to 0.89) | 0.78(0.72 to 0.84) | |

| Behavioral | 1.13(0.81 to 1.56) | 1.19(0.81 to 1.75) | |

| Biological | 1.00(0.86 to 1.15) | 1.02(0.86 to 1.21) | |

| Device | 0.87(0.75 to 1.00) | 0.73(0.61 to 0.87) | |

| Dietary supplement | 0.84(0.62 to 1.15) | 0.71(0.50 to 1.00) | |

| Drug | 0.83(0.79 to 0.87) | 0.67(0.63 to 0.71) | |

| Genetic | 2.51(1.29 to 4.87) | 1.34(0.55 to 3.27) | |

| Other | 0.82(0.64 to 1.03) | 0.63(0.48 to 0.84) | |

| Procedure | 0.82(0.65 to 1.02) | 0.73(0.55 to 0.99) | |

| Radiation | 0.88(0.31 to 2.53) | 0.99(0.21 to 4.74) | |

| Adult vs. child | Drug interventions | 0.57(0.50 to 0.64) | 0.69(0.61 to 0.79) |

| All non drug interventions | 0.76(0.68 to 0.84) | 0.47(0.40 to 0.54) | |

| Adult vs. senior | Drug interventions | 0.53a(0.34 to 0.81) | 0.74b(0.45 to 1.21) |

| All non drug interventions | 0.78a(0.55 to 1.11) | 0.39b(0.23 to 0.67) | |

| Child vs. child adult | Drug interventions | 1.13a(0.96 to 1.33) | 1.65b(1.37 to 2.00) |

| All non drug interventions | 1.46a(1.27 to 1.69) | 1.15b(0.95 to 1.40) | |

| Child vs. senior | Drug interventions | 0.93(0.59 to 1.44) | 1.06(0.65 to 1.75) |

| All non drug interventions | 1.03(0.72 to 1.48) | 0.84(0.49 to 1.44) | |

Differences by intervention status among all studies.

Differences by intervention status among completed studies.

Adjusted odds ratio of publication status by industry funding, examined interventions, and subject age.

Registered studies enrolling exclusively adults vs. exclusively children or vs. exclusively seniors had lower odds of publication irrespective of intervention type and recruitment status (Table 2). The odds of publication did not differ among registered studies enrolling exclusively children vs. exclusively seniors irrespective of intervention type or recruitment status (Table 2).

Because of low publication rates, the results were not available for 73% of the enrolled participants in the completed studies and for 75% of the enrolled subjects in terminated studies (Table 3). Thus, since many studies were not reported at all, the results for more than 70% of the enrolled participants are unknown. The time between study start and completion was shorter for industry-funded (1.8 years) vs. other studies (3.1 years) and for drug studies (2.2 years) vs. other interventions (3 years) (data not shown). The time periods between reporting of results in ClinicalTrials.gov and study completion and study publication in PubMed averaged around 2 years.

| Recruitment and publication status | Suma | Mean ± Standard deviation | Maximum | % Of total |

|---|---|---|---|---|

| Active, not recruiting | 9766327 | 623 ± 3695 | 91000 | 16.83 |

| Completed | 24851854 | 415 ± 2531 | 98832 | 42.82 |

| Enrolling by invitation | 1825475 | 851 ± 3657 | 61864 | 3.15 |

| No longer available | 1000 | 1000 | 1000 | 0.002 |

| Not yet recruiting | 2791464 | 398 ± 2052 | 55000 | 4.81 |

| Recruiting | 17097671 | 470 ± 2552 | 89646 | 29.46 |

| Suspended | 191589 | 248 ± 913 | 12000 | 0.33 |

| Terminated | 1312538 | 201 ± 1211 | 50000 | 2.26 |

| Withdrawn | 203141 | 108 ± 569 | 10000 | 0.35 |

| Completed and published | 6711031 | 746 ± 3474 | 98832 | |

| Completed but unpublished | 18140823 | 356 ± 2321 | 98483 | 73% of all completed |

| Terminated and published | 333745 | 900 ± 3014 | 32867 | |

| Terminated and unpublished | 978793 | 159 ± 989 | 50000 | 75% of all terminated |

| Total unpublished | 47298465 | 401 ± 2462 | 98483 | |

| Total published | 10742594 | 864 ± 3896 | 98832 | 18.51 |

For validation purposes the studies with >99,999 were excluded.

Participant enrollment by recruitment and publication status.

4. Discussion

Very low publication rates were found for studies registered in ClinicalTrials.gov. For the majority of registered studies (86% overall and 78% of completed registered studies), no results were available in ClinicalTrials.gov or in journal publications. These results are in concordance with previously published studies confirming that the results are not available for the public from the majority of clinical research [17,21,25].

These results were compared with the existing literature by the methods to detect publications of the registered studies. When the researchers searched for publications by the names of the principal investigators, study titles, or examined drugs in addition to registration numbers, they reported similar (much less than 50%) or only slightly higher publication rates [25,17]. When the researchers found registration numbers in the full texts of the articles retrieved for inclusion into systematic reviews, the rates were similar to this study [19,26]. These search findings could be affected by poor compliance of the authors and journal editors with reporting registration of published manuscripts [17,27,28]. Reported differences in the availability of the results from clinical research reflect several accountability gaps:

- (1)

The trial registry does not have a single field in which to mark study applicability to the federal law to post the results;

- (2)

Journal editors published unregistered trials or did not require consistent reporting of the valid registration status in the abstracts;

- (3)

PubMed indexed trials without provided valid registration numbers.

The present study has limitations. No analysis was performed as to whether journals adhered to the policy of publishing only registered studies [15]. Only one trial registry, ClinicalTrials.gov, was analyzed since all U.S. studies should be registered in this registry [29]. Other registries do not allow posting the results which makes result availability analysis difficult. The authors of the articles were not contacted to request additional information about study registration [30]. Also, principal investigators of the registered studies were not contacted for additional information about study enrollment or missing information. Actual publication bias was not analyzed by examining greater odds of publication of the studies with favorable statistically significant results [8], nor were deviations from the protocols in the published articles [31], or selective outcome reporting examined [32]. It was not a goal of this study to compare publication rates by way of searching for publications associated with each registered study, because to do so would have been time-prohibitive and unfeasible for clinicians, healthcare consumers or policy makers. Nonetheless, the straightforward search methodology followed in this study should have resulted in easy identification of publications of the registered studies.

It was concluded that existing clinical research policies do not guarantee availability of research results in general. Odds of the publication also differed depending on participant age and gender as well as study funding and design. Studies funded by industry vs. other funding sources and drug studies vs. all other interventions were published less often after adjustment for study type, subject characteristics, or posting of results in ClinicalTrials.gov. Therefore, policy revisions should specifically address the studies with the lowest publication rates, namely industry-funded drug studies.

Several initiatives focus on improving result availability from clinical studies. The recently proposed Trial and Experimental Studies Transparency (TEST) Act (H.R. 6272) would expand the mandatory registration and results posting for all interventional studies of drugs or devices regardless of study design, clinical trial phase, treatment marketing status, or trial location [33]. Under TEST, all American and foreign trials of the drugs or devices marketed in the United States must be registered and the results must be posted in ClinicalTrials.gov within 2 years after study completion (including trials of unapproved drugs or devices) [33]. Under TEST, the investigators will be required to post subject flow, baseline and post-treatment outcomes, and adverse effects. In addition, TEST requires posting of the deposition of consent and protocol documents approved by institutional review boards [33]. This legislation is timely and important.

International AllTrials campaign (available at http://www.alltrials.net) calls for registration and results reporting for all clinical studies. Sharing clinical research data is supported by the World Health Organization (WHO), the US National Institutes of Health (NIH), the Cochrane collaboration, the Institute of Medicine and other respected international organizations [34]. All clinical studies should be registered before recruitment of the first participant [18,35] in trial registries that comply with the World Health Organization (WHO) minimum dataset [36]. Trial registration should be mandatory irrespective of trial phase or design, study completion, foreign participant residency, market status of the examined interventions, or voluntary publication status [18,35,37,38].

Better coordination between the Office of Human Research Protection and the Office of Research Integrity is necessary to guarantee human subject protection and availability of the results. Routine monitoring would be more efficient and cost-effective with better harmonization and consistent use of unique study identification numbers across all databases-including ClinicalTrials.gov, Medline, the NIH grant database, and the FDA database. TEST legislation should require: (1) registration of all human trials as a condition of enrollment approval by institutional review boards; (2) validation of the provided information; and (3) accountability of sponsors for complete, accurate, and timely updated information. Continuous harmonization between IRB electronic databases with approved protocols with the trial registry would preclude subject enrollment in unregistered studies and thus guarantee a registration of all human trials.

Potential trial participants should be advised to participate only in registered studies with publicly available protocols and written assurance of the result availability [39].

Modern information technologies enable inexpensive and effective monitoring of compliance with the policy as well as availability of the results. Trial registries should have analyzable fields for trial completeness, applicability to FDA regulations, enrollment status, and exact reasons for termination. Automated alerts could be used to inform principal investigators, institutional review boards, and regulatory agencies about deadlines to comply with policy, enrollment status, and availability of the results.

Principal investigators and sponsors should be accountable for data integrity during registration and publication processes (Table 4) [35]. This study could not explain why some publications included NCTs that never actually existed, or why the recruitment status was omitted or different in the published articles compared with the protocols posted in ClinicalTrials.gov [35].

| Registration | Consent form | Study status | Results | |

|---|---|---|---|---|

| Principal investigators | Register protocols in ClinicalTrials.gov as part of the IRB application | Include study registration number in the consent form | Updating recruitment status in ClinicalTrials.gov | Posting of study results in ClinicalTrials.gov, including:

|

| Institutional Review Boards (IRBS) | Approve enrollment for registered protocols only | Approve consent form with trial registration number | Suspension of trials that did not provide complete and accurate information about study recruitment and termination Any changes in the protocol or subject enrollment must be posted in ClinicalTrials.gov |

Disciplinary and financial actions against principal investigators who did not post the results in ClinicalTrials.gov within 1 year after completion or termination of the trial Assuring a proper data de-identification for sharing the results |

| Data Safety Monitoring Boards (DSMB) | Disclosure of safety outcomes in ClinicalTrials.gov Disclosure of reasons for termination or suspension to ClinicalTrials.gov |

|||

| ClinicalTrials.gov | Adding downloading option for routine monitoring fields for exact reasons for termination or suspension of clinical trials Creating automated alerts for trials that did not update recruitment status according to the dates in the protocol Informing IRBs (automated emails) about incomplete information regarding protocols, recruitment status, and reasons for trial incompletion by principal investigators |

Creating automated alerts for trials that did not post the results within 1 year after completion or termination of the studies Informing IRBs (via automated emails) about absent or incomplete posting of the results from clinical trials Informing the NIH funding agencies (automated emails) about absent or incomplete posting of the results from the NIH funded trials |

||

| NIH Funding Agencies | Adding downloadable field in the Research Portfolio Online Reporting Tools (RePORT) grant database with a registration status and registration identification number for all clinical trials funded by the NIH | Disciplinary and financial actions against principal investigators who did not post the results in ClinicalTrials.gov within 1 year after completion or termination of the NIH funded trials | ||

| Stakeholders in the results of clinical research | Participants are informed about posted protocols and plans to post the results from clinical trials. | Participants are informed about early termination or suspension of the trials Investigators who conduct independent analysis of reported findings can easily analyze incompletion of clinical trials by the topic of research, treatment, sponsor, or subject characteristics |

Participants are informed about posted results from clinical trials. Investigators who conduct independent analysis of reported findings can make valid conclusions for informed decision-making in clinical settings |

Poor publication rates of the registered studies demonstrate a need for revisions in clinical research policy in order to guarantee availability of the results [33]. It points to some serious deficiencies in the current research enterprise that threatens the societal goal of evidence-based practice [18].

Conflict of interest

The authors declare that there is no conflict of interest.

Acknowledgements

We would like to thank Charley Mullin, SAS Certified Programmer for SAS 9 for his help in formatting the databases, the editor, Jeannine Ouellette, for her help in writing this paper, and Dr.David Goldmann for his editorial comments.

Appendix A. Supplementary data

Supplementary data associated with this article can be found, in the online version, at http://dx.doi.org/10.1016/j.jegh.2013.08.002.

References

Cite this article

TY - JOUR AU - Tatyana A. Shamliyan AU - Robert L. Kane PY - 2013 DA - 2013/09/23 TI - Availability of results from clinical research: Failing policy efforts JO - Journal of Epidemiology and Global Health SP - 1 EP - 12 VL - 4 IS - 1 SN - 2210-6014 UR - https://doi.org/10.1016/j.jegh.2013.08.002 DO - 10.1016/j.jegh.2013.08.002 ID - Shamliyan2013 ER -