Development of a System to Detect Eye Position Abnormality based on Eye-Tracking

- DOI

- 10.2991/jrnal.k.210922.011How to use a DOI?

- Keywords

- Eye position examination; cover test; strabismus/heterophoria; compact camera

- Abstract

In previous research, we developed a method to automate the conventional evaluation of eye misalignment (cover test) and distinguish misaligned eyes from their movements. However, this method had the problem that vertical eye movements were affected by the eyelids and eyelashes, and could not be completely detected. To solve this problem, we have developed another method to recognize abnormalities by observing only the movement near the center of the pupil.

- Copyright

- © 2021 The Authors. Published by Atlantis Press International B.V.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

Misalignment of the eyes such as strabismus and heterophoria causes defective binocular vision and eyestrain, making early detection and training essential. Because strabismus in children is a particular risk factor that causes amblyopia, the diagnosis of strabismus at an early age and the start of appropriate treatment have been active research topics in pediatric ophthalmology [1]. In practice, only highly qualified personnel such as ophthalmologists and orthoptists are able to diagnose eye misalignment. However, given the ongoing shortage of such skilled personnel, developing a method to verify eye alignment without trained staff is becoming an important priority [1,2].

Eye position examinations are classified into two categories: qualitative and quantitative. A qualitative test checks for deviations, while a quantitative test assesses the magnitude of the ocular deviation.

Research on methods for measuring the amount of ocular deviation has been previously reported [2]. However, the proposed approaches work only for exotropia, as it is easy to assess eye location during measurement. We have explored the idea of using an existing eye-tracking system [3,4] as an alternative tool. However, with this method, it is necessary to plan various parameters for the application site and the light needed to detect the very slight eye movements that may suggest strabismus or heterophoria. Following the recent development of medical image diagnostic systems [5–7], we have also considered the possibility of creating a machine learning-based detection system using continuous pictures of eye movements during examination. Unfortunately, we found it arduous to collect study data, since ocular inspection has not previously been a procedure for image diagnosis.

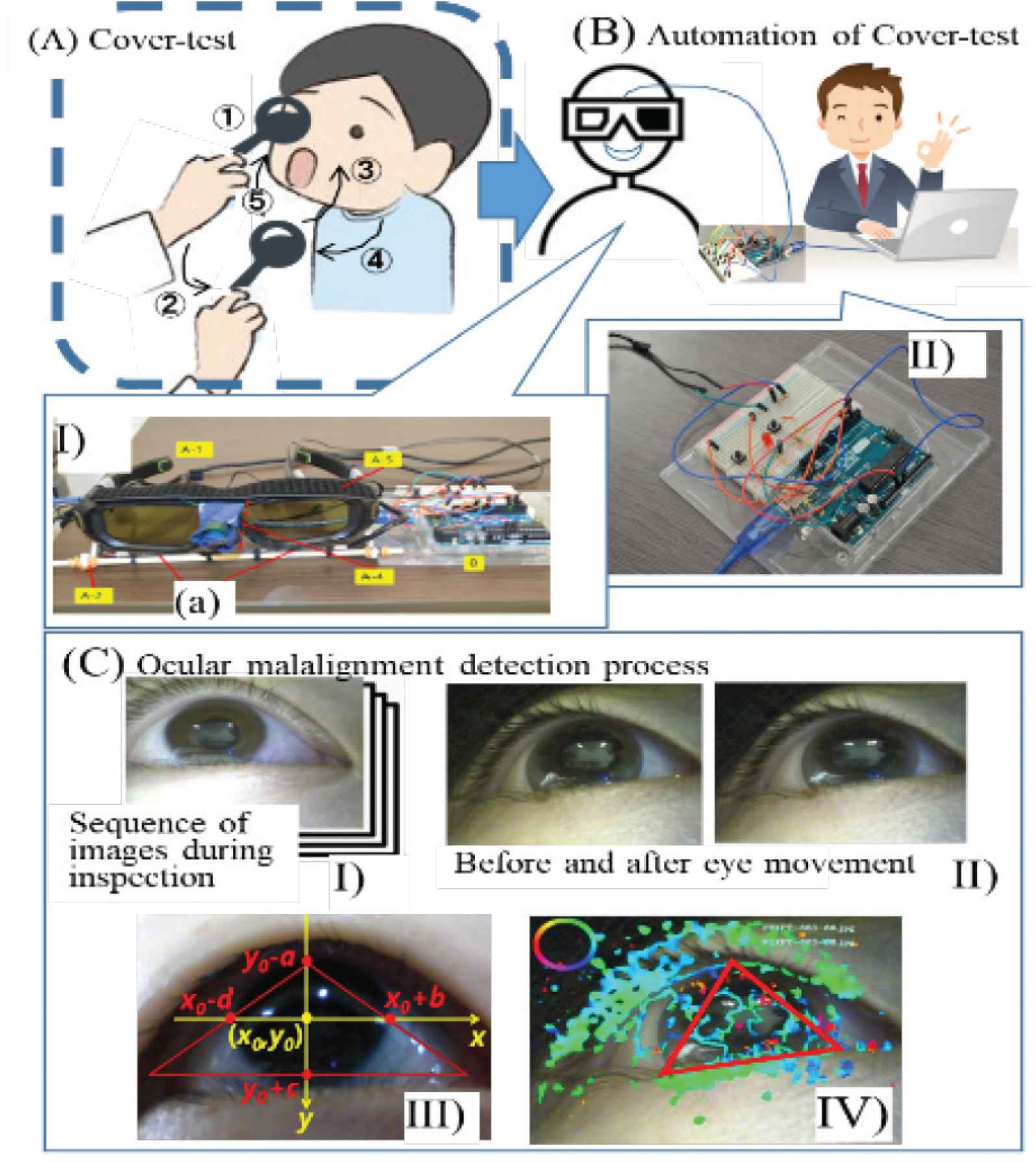

As a result, we developed a basic inspection system capable of screening strabismus and heterophoria without restrictions, automating the inspection process of the traditional qualitative inspection form, the Cover-Test [1] (Figure 1A and 1B-I, II), and establishing and systematizing a procedure for the detection of eye movement abnormalities (Figure 1C-I to IV). The Cover-Test is an inspection method for deciding between tropia or heterophoria by having the subject focus on an object. Tropia is determined if the exposed eye moves when the other eye is covered. If the covered eye moves as it is exposed, it is judged to indicate heterophoria. The conventional approach is to cover-uncover the eyes with an occluder and evaluate abnormality by visual inspection (Figure 1A). In the previous research, we used Head Mounted Display (HMD)-compatible 3D glasses (Nvidia 3D Vision2 [8]) to automate the shielding function.

Cover-test automation and abnormality detection based on eye movement.

With the method as originally developed, we extracted the area for anomaly detection from the moving image around the eye (Figure 1C-III). Then, using optical flow technology [9], we developed a series of processes that detect eye movement (Figure 1C-IV) and assess abnormality [10,11]. We took the moving pictures around the eye with an ultra-compact camera (Figure 1B-I-a) mounted within the left and right lenses. Eye movements displaying signs of eye misalignment occur 7–8 s after being covered or exposed. Therefore, we extracted a series of photos taken at a time interval of approximately 0.2 s during that span. However, due to the influence of the eyelids and eyelashes, there was an application limit to observing the vertical movements of the eye.

Although vertical strabismus/heterophoria does not occur as often as horizontal strabismus, early-stage diagnosis is crucial, as it can be a sign of severe disorders such as cerebral palsy and heart failure. Moreover, the number of cases has increased over the past few years due to the aging of the population [12]. Thus, there is considerable significance in developing a system for detecting strabismus and heterophoria in the vertical direction.

2. RESEARCH PURPOSE

The goal of this study was to develop an alternative method for detecting eye misalignment that could distinguish not only horizontal but also vertical eye movements. Based on the issues that arose in our previous study, we devised a technique to diagnose disorders by monitoring only the actions near the center of the pupil, where the eyelids and eyelashes are less likely to affect the pupil.

The remainder of the paper proceeds as follows: Section 3 explains the proposed method, Section 4 introduces the performance evaluation experiments used to assess the method, and Section 5 summarizes the study and its results.

3. PROPOSED METHOD

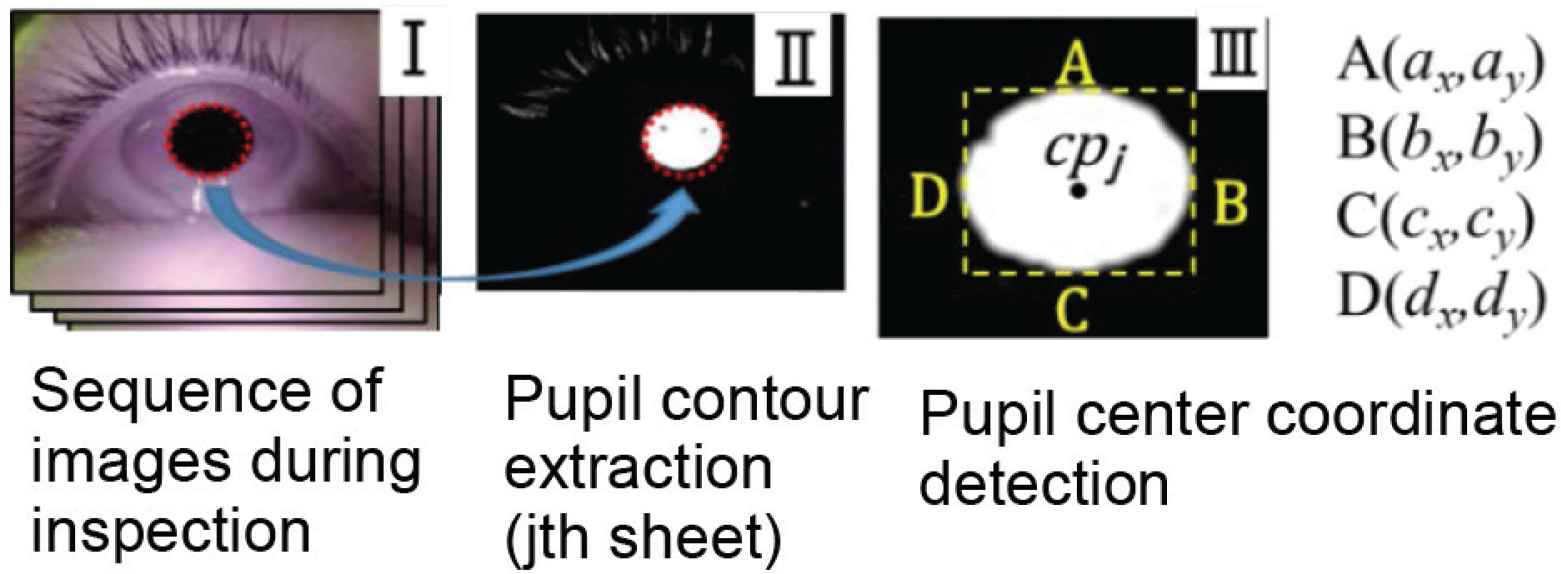

We used an infrared camera to take pictures of the center of the pupil and extract its contour. Focusing on the dispersion of the distance from the reference point (Section 3.3), we found that we could detect strabismus and heterophoria in both directions, horizontally and vertically, as well as any abnormality. The three component processes—(1) the extraction of the pupil area (Figure 2-I, II), (2) the measurement of the pupil center coordinates (Figure 2-III), and (3) the determination of the abnormality—are explained below.

Process of pupil center detection.

3.1. Extraction of the Pupil Area

We attempted to extract the pupil contour with the existing ellipse fitting method [13]. However, this proved insufficient to detect the slight twitches of the eye showing signs of strabismus and oblique location. Therefore, we used the color identification function based on the RGB color model given in Open CV as an additional tool, and found that we could extract the pupil contour by setting the RGB values from (0, 0, 0) to (15, 15, 15).

3.2. Calculation of Pupil Center Coordinates

Figure 2-III shows the coordinates of the extracted pupil area. The position where the y-coordinate is maximum is A (ax, ay); the minimum y-coordinate is at C (cx, cy). The position where the x-coordinate is maximum is B (bx, by); the minimum is at D (dx, dy). We calculate the pupil central coordinates cp as follows.

3.3. Anomaly Evaluation

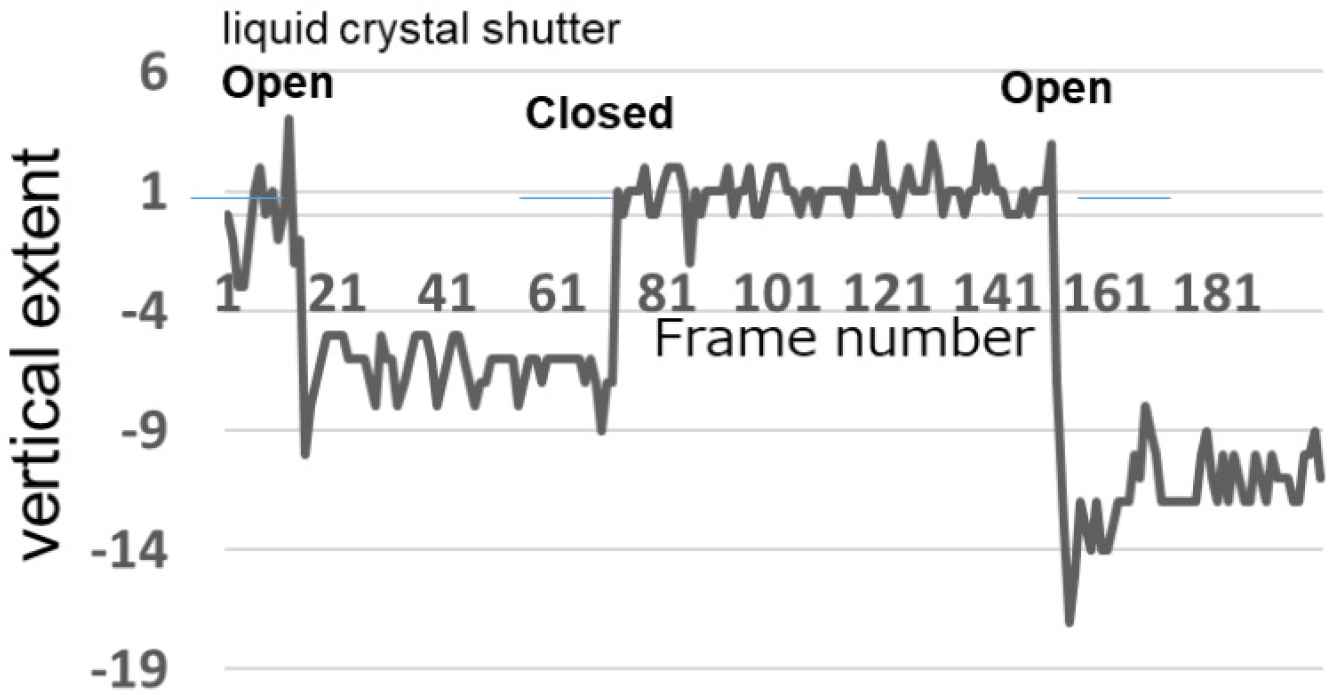

In our previous study, we measured the abnormality of constant eye movements using an automatic Cover-Test apparatus (Figure 1B). We found it possible to evaluate an anomaly by checking the eye movements seen continuously in the same course in photographs taken at 0.2-s intervals for approximately 7–8 s immediately after the cover/uncover-specifically, the fourth to tenth pictures.

Abnormality correlates with the variance of the distance from the pupil center position, denoted as the reference point, from the start of the examination to the pupil center position at each point (see Figure 3). The initial prediction was that the degree of abnormality would be correlated with the absolute distance of eye movement during the examination. However, the findings of the study did not show any such correlation. The most plausible explanation for this is that the distance between the eyes and the infrared camera differs depending on the subject. From the above, we set the measurement parameter based on the variance of the distance from the reference point of the pupil center and carried out an abnormality judgment based on these evaluation criteria.

Distance from the reference point to the center position of the pupil at each time point (When the angle of deviation in strabismus was 3Δ (prism)).

3.4. Setting the Evaluation Criteria

In applying the procedure, we produce a series of transitions of the pupil center coordinates cpj = (x(cpj),y(cpj)) from a series of image data (referred to as a test image) taken from around the eye (1 ≤ j ≤ n, where j is the image number of the series assigned to the test image). Next, we set the central coordinates, cp1, chosen as the reference point from the first image of a series of test images. The horizontal and vertical distances from the reference point of the center coordinates cpj (2 ≤ j ≤ n) on the second and subsequent images are measured. We find the s2x and s2y variances by calculating the average

Applying the above method, we determined the variances of all the collaborators to produce the study data and established the correct judgment results for each individual using the Maddox rod, which is a precision inspection device.

We then set the criterion by comparing each variance with the Maddox rod test results. Table 1 indicates the evaluation criterion that was established with the aid of 44 collaborators. Among these, there were 13 anomalies in the right position and 36 in the assumed horizontal strabismus or oblique position. In 15 cases, the angle of deviation in strabismus was 1Δ (prism), while for another 15, the angle was 2Δ. Three had an angle of 3Δ, and three had an angle of 4Δ.

| Vertical direction | Horizontal direction | ||

|---|---|---|---|

| Deviation angle | Mean of variance | Deviation angle | Mean of variance |

| <1Δ | 1.43 | <1Δ | 2.10 |

| 1Δ | 1.90 | 1Δ | 2.56 |

| 2Δ | 4.02 | 2Δ | 5.63 |

| 3Δ | 9.66 | 3Δ | 11.60 |

| 4Δ | 16.73 | 4Δ | 21.10 |

Evaluation criteria of abnormal

To generate pseudo-perspective data on the minimal cases of study data for the development of a criterion for vertical strabismus, we followed the specified procedure under the guidance of an expert, with the aid of eight of the 13 collaborators noted above.

Apart from the main contents of the proposed detection method, we needed to differentiate between blinking movements and exclude the corresponding system in pursuing our assessment objective of automating the proposed judgment process completely. In addition, we needed to specify the image when the shutter opens and closes. We automated these processes and modified the automation of the entire detection process.

4. VERIFICATION EXPERIMENTS

4.1. Experiment 1

In the first experiment, we produced anomaly judgments using the proposed method for 20 subjects, then compared the results with those of the Maddox rod test. To assess the performance of our system, we used the coincidence rate between the two methods. Table 2-(1) displays the results. The V (vertical) and H (horizontal) labels indicate the direction of the eye movement; the values attached to V and H represent the angle of strabismus. For example, in V0H6, V0 indicates vertical normalcy and H6 indicates a horizontal strabismus/heterophoria of approximately 6Δ. H0-1 denotes a horizontal anomaly of less than 1Δ. The results obtained using the proposed system differed from the correct results obtained by Maddox for only two of the subjects, B and R. Thus, the overall concordance rate was 90% (18/20).

| (1) Experiment 1 | (2) Experiment 2 | ||||

|---|---|---|---|---|---|

| Subject | True value (Maddox) | System’s judgement result | Subject | True value (Expected value) | System’s judgement result |

| A | normal | normal | A′ | V4H0 | V4H0 |

| B | V0H6 | V3H5 | B′ | V2H0 | V2H0 |

| C | normal | normal | C′ | V0H2 | V0H2 |

| D | normal | normal | D′ | V0H2 | V1H2 |

| E | normal | normal | E′ | V3H0 | V3H0 |

| F | normal | normal | F′ | V3H0 | V3H0 |

| G | V0H0-1 | normal | G′ | V2H0 | V1H0 |

| H | normal | normal | H′ | V0H1 | V0H1 |

| I | normal | normal | I′ | V1H0 | V1H0 |

| J | V0H0-1 | normal | J′ | V0H2 | V1H2 |

| K | normal | normal | K′ | V4H0 | V4H0 |

| L | normal | normal | L′ | V0H1 | V0H1 |

| M | VH0-1 | normal | M′ | V1H0 | V0H0 |

| N | normal | normal | N′ | V0H2 | V0H2 |

| O | normal | normal | O′ | V3H0 | V3H0 |

| P | V0H5 | V0H5 | P′ | V1H0 | V1H0 |

| Q | normal | normal | Q′ | V0H1 | V0H1 |

| R | V3H12 | V4H5 | R′ | V1H0 | V1H0 |

| S | normal | normal | S′ | V2H0 | V2H0 |

| T | normal | normal | T′ | V2H0 | V2H1 |

Results

4.2. Experiment 2

As mentioned, our study sought to develop a method to detect misalignment in both horizontal and vertical eye movements. However, of the 20 participant cases, only one case showed an abnormality in the vertical direction, suggesting that we would be unable to achieve our stated research goal in the current study.

To address this, we performed further experimental verification using pseudo-strabismus data. Fifteen of the 20 subjects whose system judgment result in Experiment 1 was normal (abnormality smaller than 1Δ) were chosen as collaborators. The subjects were A, C, D, E, F, G, H, I, K, L, N, O, Q, S, T. We then acquired pseudo-strabismus data for 15 people, four types of abnormalities (1Δ, 2Δ, 3Δ, 4Δ) in the vertical and horizontal directions. Furthermore, we randomly selected as test data 20 cases (Aʹ–Tʹ) from the 8 × 15 data and produced an abnormality judgment for each case. The result was compared with the true value expected when the pseudo data were created. We then produced an abnormality judgment for each case and compared it to the true value expected when the pseudo data were created.

Table 2-(2) shows the results. As indicated, five of the 20 cases (Dʹ, Gʹ, Jʹ, Mʹ, Tʹ) varied from the system’s predicted correct decision, giving a concordance rate of 75% (15/20).

4.3. Discussion

4.3.1. Experiment 1

The system’s horizontal test result for Subject B was an abnormality of 5Δ, as shown in Table 2-(1), which was generally consistent with the Maddox correct result of 6Δ. However, in the same subject’s vertical test, the method identified a 3Δ anomaly, while the Maddox result was normal, suggesting a large discrepancy.

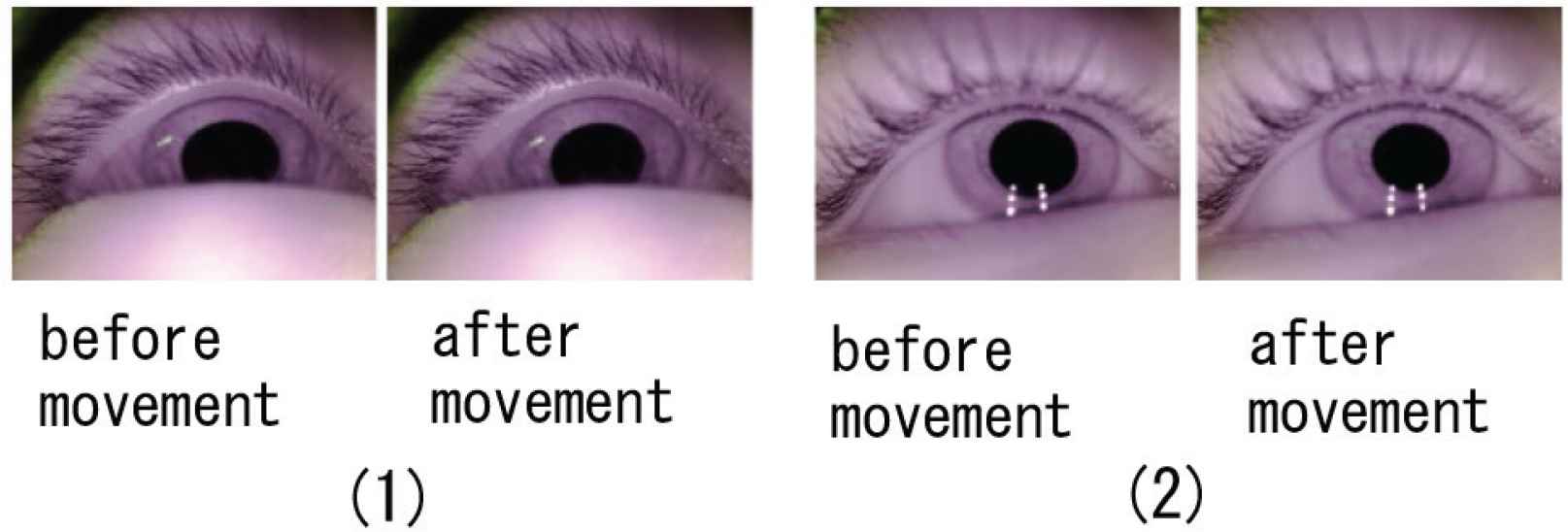

In an effort to explain this discrepancy, we noted that the lower eyelid and pupil overlapped significantly in the subject B test image (See Figure 4). As a consequence, we failed to accurately identify the area near the center of the pupil, which likely led to the wrong decision. Taking photos of the eyes from the front should eliminate the problem. However, the orientation and angle of the camera are important. The test for subject R using the system produced discrepancies in both the vertical and horizontal results, with a particularly significant disparity between the horizontal test result and the correct result. This discrepancy was due to the orientation of the eye in the inspection image being inclined obliquely. As with subject B, the problem should be resolved by adjusting the camera angle.

Comparison of subject B with images of other general subjects. (1) Image of subject B. (2) Image of a general subject.

4.3.2. Experiment 2

As shown in Table 2-(2), the differences in the outcomes of the five cases {D′, G′, J′, M′, T′} that were in disagreement with the expected result were subtle. We classified the causes for these differences into three groups: (1) camera angle issues, as in cases B and R of Experiment 1, where adjusting the camera shooting angle should resolve the problem; (2) the inability to produce proper pseudo strabismus-results (three cases); (3) a distortion of the pupil contour with the use of hard contact lenses, which we considered irrelevant to the system (one case). Based on this analysis, we considered only one of the five cases to be an actual failure of the system.

5. SUMMARY

We developed an alternative method to automate strabismus/heterophoria screening. The method effectively detects not only horizontal eye movements but vertical eye movements as well.

The proposed method is less susceptible to eyelids and eyelashes that make it difficult to recognize the vertical flow of the eye. Specifically, our proposed system senses only the motion of the center of the eye, where eyelids and eyelashes cannot. In our approach, we take an image of the area around the pupil under examination with an infrared camera. The pupil region projected in black is then extracted with RGB values (0, 0, 0) to (15, 15, 15), and the center of the region is set as the pupil center. We then measure the distance variance from the reference point of each pupil center position and use it as a criterion for assessing the ability of the method to detect anomalies.

We set up an inspection framework based on the proposed approach and conducted a performance evaluation test. First, we set out 44 standard inspection results, four pseudo-data forms × 13 = 52 for 96 analysis cases and assessment parameters. We then used the method to produce anomaly judgments for 40 test data cases—20 inspection data cases and 20 pseudo-data cases—and compared each result to the correct result in order to assess the efficiency of the proposed system.

In our evaluation experiments, the apparent success rate was 82.5% (33/40). However, among the seven failures, we found that four were irrelevant to the proposed method (three involved false pseudo data, and one involved the use of hard contact lenses). Thus, the method produced only three actual decision errors out of the 40 cases tested, for a success rate of 92.5% (37/40). Based on our findings, we identified several issues with the pupil center detection error associated with the infrared camera angle of the photograph and suggested improvements to be considered in future research.

CONFLICTS OF INTEREST

The authors declare they have no conflicts of interest.

ACKNOWLEDGMENT

This work was supported by JSPS KAKENHI Grant Number JP19H00505.

AUTHORS INTRODUCTION

Mr. Noriyuki Uchida

He is an orthoptist. He is currently a Doctoral course student in University of Miyazaki, Japan. His research interests include visual optics, strabismus and amblyopia.

He is an orthoptist. He is currently a Doctoral course student in University of Miyazaki, Japan. His research interests include visual optics, strabismus and amblyopia.

Dr. Kayoko Takatuka

She is an expert technical staff with the Faculty of Engineering, University of Miyazaki, Japan. She graduated from Kumamoto University, Japan, in 1992, and the PhD degree in interdisciplinary graduate school of agriculture and engineering from University of Miyazaki, Japan, in 2014. Her research interest is process systems engineering.

She is an expert technical staff with the Faculty of Engineering, University of Miyazaki, Japan. She graduated from Kumamoto University, Japan, in 1992, and the PhD degree in interdisciplinary graduate school of agriculture and engineering from University of Miyazaki, Japan, in 2014. Her research interest is process systems engineering.

Dr. Hisaaki Yamaba

He received the B.S. and M.S. degrees in Chemical Engineering from the Tokyo Institute of Technology, Japan, in 1988 and 1990, respectively, and the PhD degree in Systems Engineering from the University of Miyazaki, Japan in 2011. He is currently an Assistant Professor with the Faculty of Engineering, University of Miyazaki, Japan. His research interests include network security and user authentication. He is a member of SICE and SCEJ.

He received the B.S. and M.S. degrees in Chemical Engineering from the Tokyo Institute of Technology, Japan, in 1988 and 1990, respectively, and the PhD degree in Systems Engineering from the University of Miyazaki, Japan in 2011. He is currently an Assistant Professor with the Faculty of Engineering, University of Miyazaki, Japan. His research interests include network security and user authentication. He is a member of SICE and SCEJ.

Prof. Masayuki Mukunoki

He is now a Professor of Faculty of Engineering at University of Miyazaki in Japan. He received the bachelor, master and doctoral degrees in Information Science from Kyoto University. His research interests include computer vision, pattern recognition and video media processing.

He is now a Professor of Faculty of Engineering at University of Miyazaki in Japan. He received the bachelor, master and doctoral degrees in Information Science from Kyoto University. His research interests include computer vision, pattern recognition and video media processing.

Prof. Naonobu Okazaki

He received his B.S., M.S., and PhD degrees in Electrical and Communication Engineering from Tohoku University, Japan, in 1986, 1988 and 1992, respectively. He joined the Information Technology Research and Development Center, Mitsubishi Electric Corporation in 1991. He is currently a Professor with the Faculty of Engineering, University of Miyazaki since 2002. His research interests include mobile network and network security. He is a member of IPSJ, IEICE and IEEE.

He received his B.S., M.S., and PhD degrees in Electrical and Communication Engineering from Tohoku University, Japan, in 1986, 1988 and 1992, respectively. He joined the Information Technology Research and Development Center, Mitsubishi Electric Corporation in 1991. He is currently a Professor with the Faculty of Engineering, University of Miyazaki since 2002. His research interests include mobile network and network security. He is a member of IPSJ, IEICE and IEEE.

REFERENCES

Cite this article

TY - JOUR AU - Noriyuki Uchida AU - Kayoko Takatuka AU - Hisaaki Yamaba AU - Masayuki Mukunoki AU - Naonobu Okazaki PY - 2021 DA - 2021/10/09 TI - Development of a System to Detect Eye Position Abnormality based on Eye-Tracking JO - Journal of Robotics, Networking and Artificial Life SP - 205 EP - 210 VL - 8 IS - 3 SN - 2352-6386 UR - https://doi.org/10.2991/jrnal.k.210922.011 DO - 10.2991/jrnal.k.210922.011 ID - Uchida2021 ER -