Verification of Robustness against Noise and Moving Speed in Self-localization Method for Soccer Robot

- DOI

- 10.2991/jrnal.k.210521.015How to use a DOI?

- Keywords

- Robustness against noise; robustness against moving speed; self-localization; RoboCup Middle Size League; soccer robot; genetic algorithm

- Abstract

The main focus of the RoboCup competitions is the game of football (soccer), where the research goals concern cooperative multi-robot and multi-agent systems in dynamic environments. In RoboCup, self-localization techniques are crucial for robots to estimate their own position as well as the position of the goal and other robots before deciding on a strategy. This paper presents a self-localization technique using an omnidirectional camera for an autonomous soccer robot. We propose a self-localization method that uses the information on the white lines of the soccer field to recognize the robot position by optimizing the fitness function using a genetic algorithm. Through experiments we verify the robustness of the proposed method against noise and moving speed.

- Copyright

- © 2021 The Authors. Published by Atlantis Press B.V.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

The landmark project RoboCup is a well-known international robotics challenge [1]. This project promotes research on Artificial Intelligence (AI) and robotics with the goal of “creating a team of autonomous humanoid robots that can beat the world-champion soccer team” by 2050 [2]. Various technical challenges must be overcome to achieve this goal. Self-localization—which enables a robot to recognize the real-world environment in real time—is a key function that determines the outcome in any competition because it serves as the starting point for the strategic coordinated movements that follow.

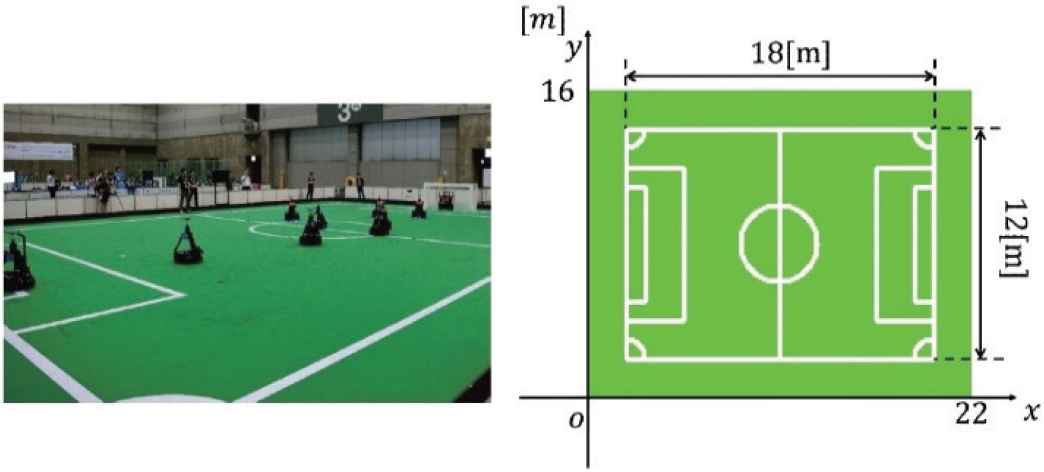

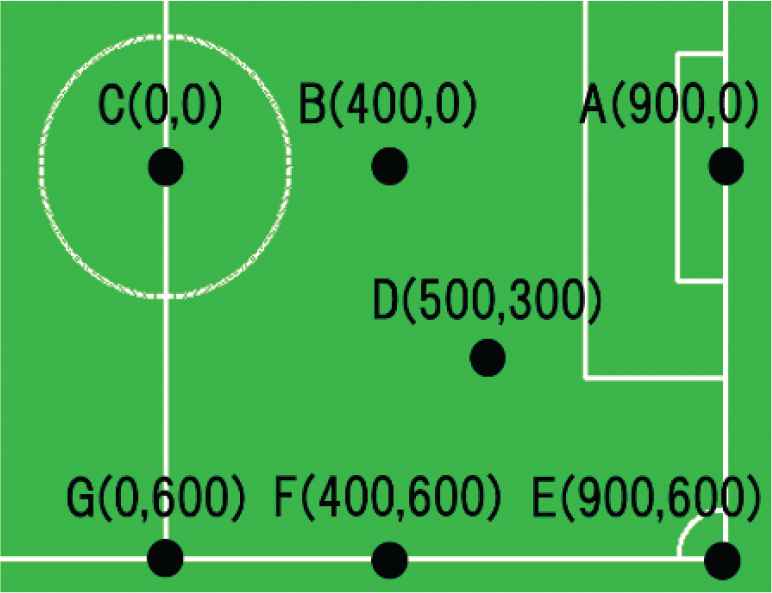

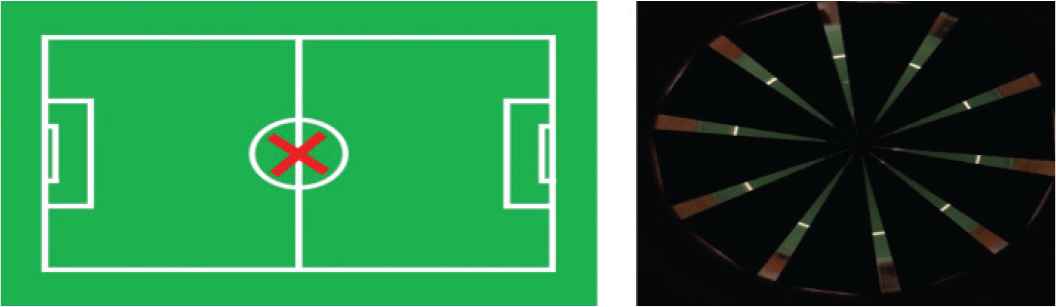

Figure 1 shows the RoboCup Middle Size League (MSL) soccer field, which has the widest field size (12 × 18 m). Many self-localization methods using the shape of the white lines drawn on the field have been proposed, including a method using the Hough transform [3] and a method based on error minimization [4]. However, the Hough transform-based method requires a long processing time because calculations other than estimating the self-localization are generated. Error minimization is a local search method, so the initial position needs to be assumed. As a result of these shortcomings, a method using Monte Carlo localization (MCL) [5,6] with a particle filter has been proposed and implemented by many RoboCup teams. However, improving localization accuracy leads to increased processing time. Furthermore, MCL is known to be relatively vulnerable to the kidnapping problem [7].

RoboCup MSL soccer field.

In this paper, we describe a real-time self-localization method that applies a Genetic Algorithm (GA) [8] for the RoboCup MSL. We estimate the self-position using information on the image, environment, and field. A search space is formed by model-based matching [9], which collates a search model based on the shape of the white lines (obtained from omnidirectional images) and known field data. A GA is then used for optimization processing. Our proposed method enables the robot’s self-position to be identified in real time.

The competition environment is a dynamic system where multiple robots move at high speed, and image noise is generated due to the mutual occlusion of multiple robots. Moreover, the image changes caused by the movements of the robot also affect self-localization, so the robustness of the self-localization method must be evaluated as well. Thus, we conducted experiments to verify the robustness of our method against noise and moving speed.

2. SELF-LOCALIZATION USING OMNIDIRECTIONAL CAMERA

We use a white line on the MSL field for self-localization. Our proposed self-localization method generates the search space based on a model-based matching using white line information. The method recognizes the robot position by optimizing the fitness function, which is the maximum value at the correct robot position. A GA is utilized to optimize the fitness function.

2.1. Hardware of Vision System

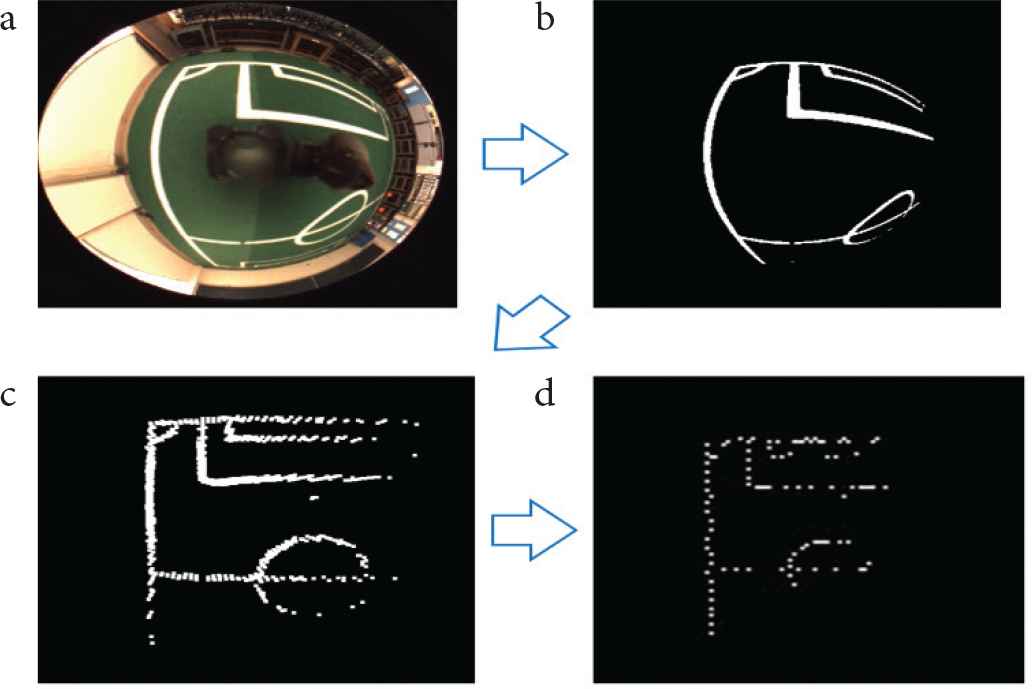

The omnidirectional vision system of our robot consists of a camera (FLIR, Flea3), a varifocal lens (Vstone), and a hyperboloidal mirror (Vstone). We developed the vision system, shown in Figure 2, for the RoboCup MSL robot by combining the above elements. The image captured by this vision system is shown in Figure 3a. The image size and frame rate are 512 × 512 pixels and 30 frame per millisecond (fps), respectively.

Hardware of vision system.

Process of creating search model. (a) Original image from camera. (b) White detection image. (c) Orthogonalized image. (d) Search model.

2.2. Searching Model

Figure 3 shows the process of creating the search model for the proposed method. First, the detection image of the white line is needed to make the searching model. We obtain the white detection image by applying the converting method of color space from RGB to HSV and to YUV as in Figure 3b. Then we generate the field information by orthogonalizing the white line information, shown in Figure 3c. We determine the searching model by thinning down the field information based on the white lines as in Figure 3d. Finally, we use the thinned model as the searching model for self-localization.

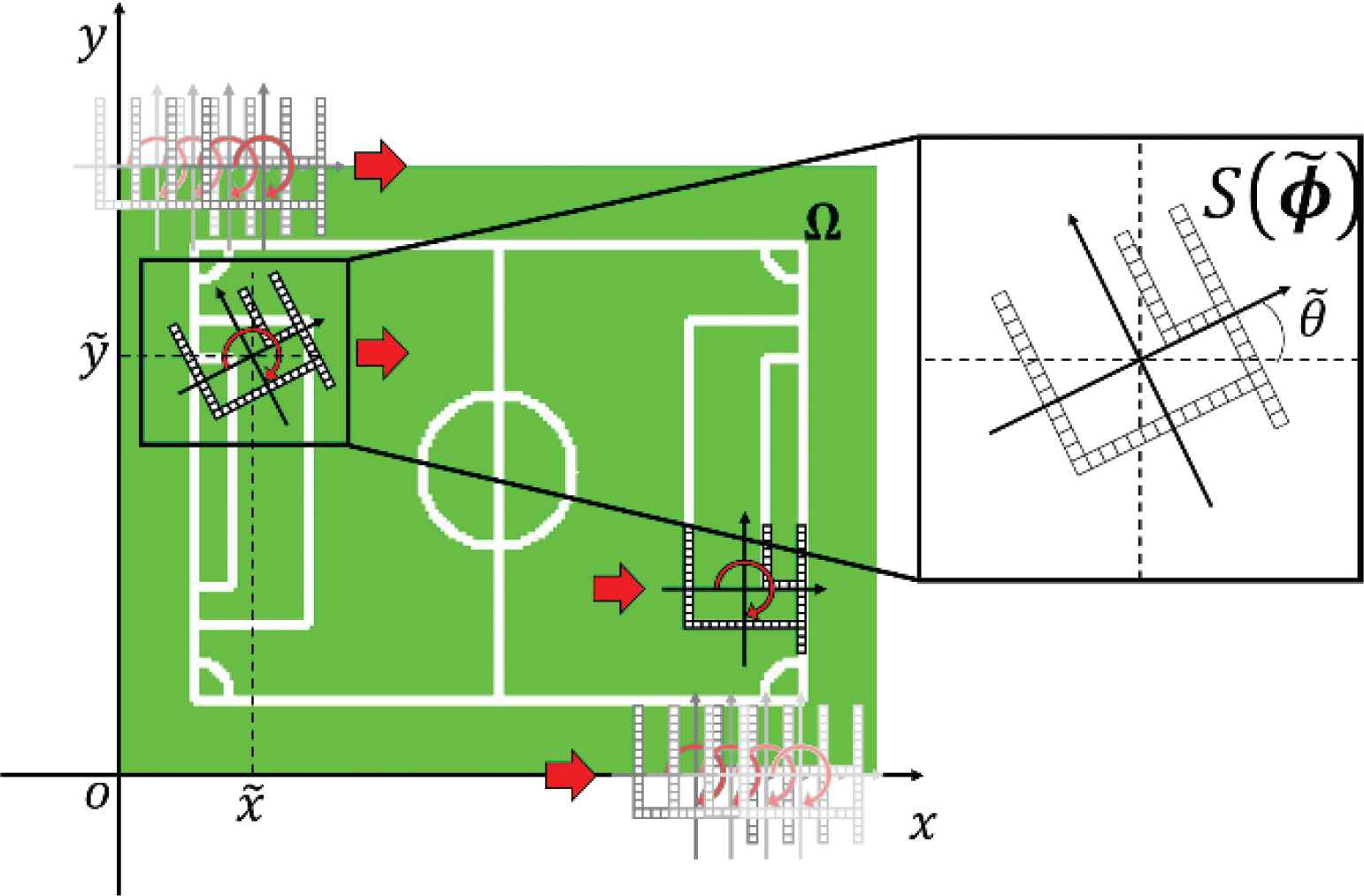

2.3. Model-based Matching

The proposed self-localization method generates the search space by model-based matching between geometric information of the white lines on the MSL field and the above-mentioned searching model shown in Figure 4. The evaluation function

Model-based matching.

Here, the movement of the searching model S in the matching area is expressed as

2.4. Genetic Algorithm

In the proposed self-localization method, we implement a GA to determine the maximum value of the fitness function

2.5. Verification Experiment

We performed a self-localization experiment to determine the error between the correct position and detected position on the MSL field to verify the effectiveness of the proposed method. In the verification experiment, the resulting maximum error was 36.1 cm and the average error was 12.8 cm. These errors are small compared to the field size, indicating that the self-localization could be detected with sufficiently high accuracy.

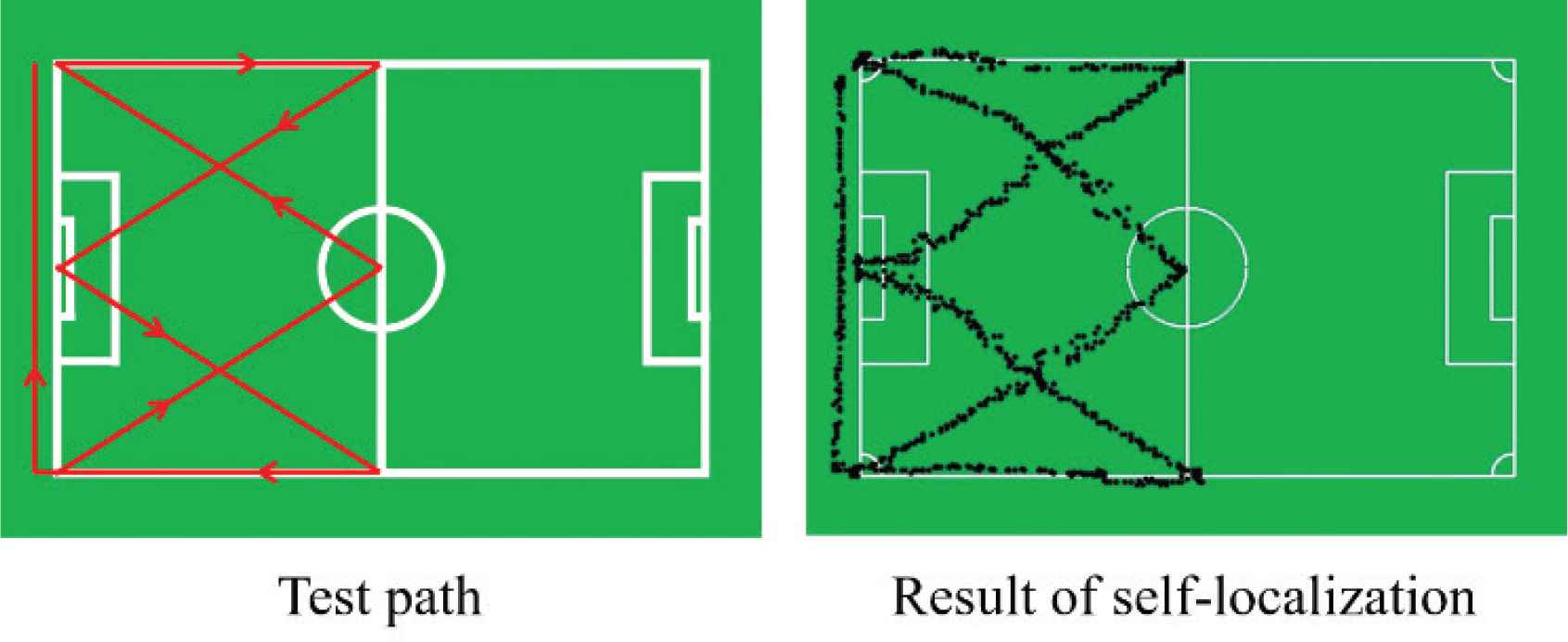

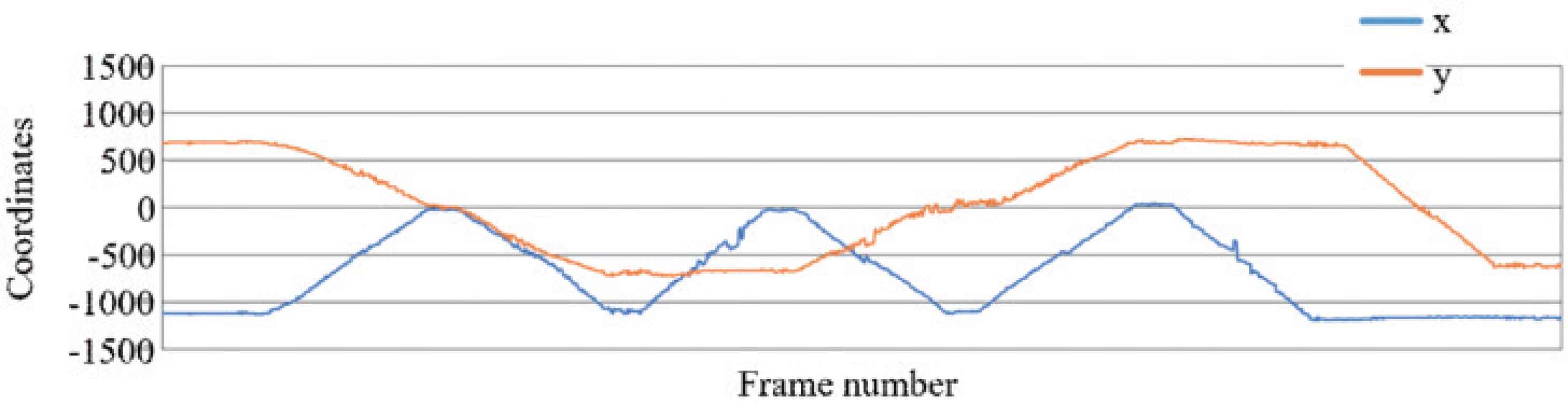

We conducted an additional verification experiment to confirm the smoothness of the recognized position in an actual environment, in which the robot moved along a test path on the field shown on the left side of Figure 5. The results of the self-localization are shown on the right side of the figure, where the black dots represent the detected position at each camera frame. We also recorded the x, y-coordinates of the detected position at each frame number on the test path, shown in Figure 6. The results show that the self-localization result did not deviate significantly, and the robot’s coordinates of its detected position are continuous.

Result of self-localization on test path.

Coordinates of detected position at each frame number.

3. VERIFICATION OF ROBUSTNESS AGAINST NOISE

The accuracy measured thus far is the result of an ideal environment in which there are no other robots on the soccer field. However, in a real game environment, up to 10 robots may exist on the same soccer field. In addition to the robots, people such as the referee and the line referee are on the field at the same time. The robot may not be able to recognize its own position correctly because the people and the other robots become occlusions. Therefore, we conducted additional experiments to verify the robustness of the proposed method against varying amounts of noise.

3.1. Experiment

In a real environment, a variety of noises may appear in the image, so qualitative experiments cannot be performed. Here we perform verification experiments by adding artificial noise.

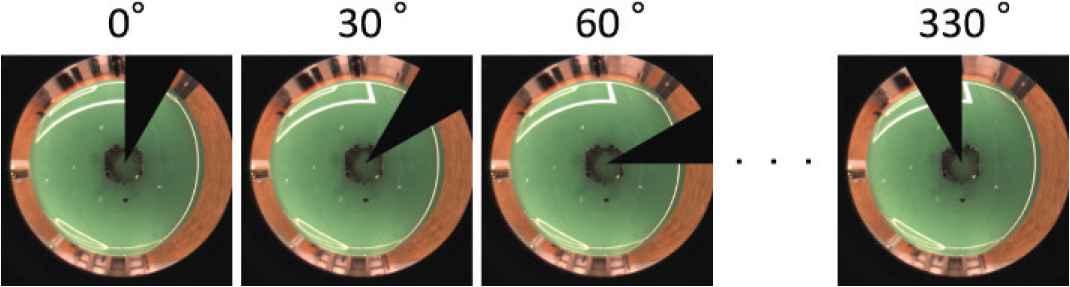

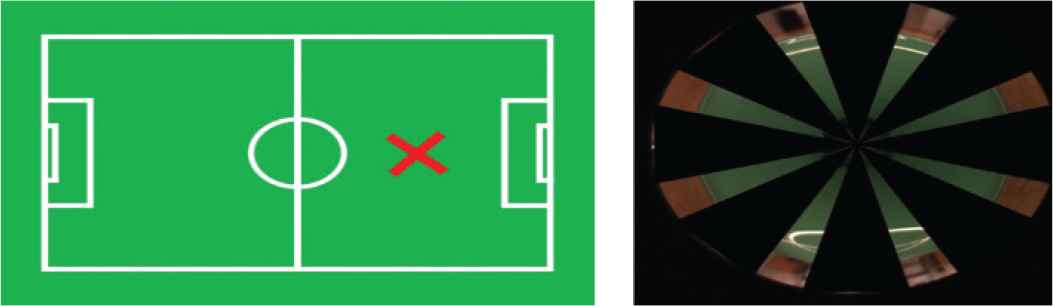

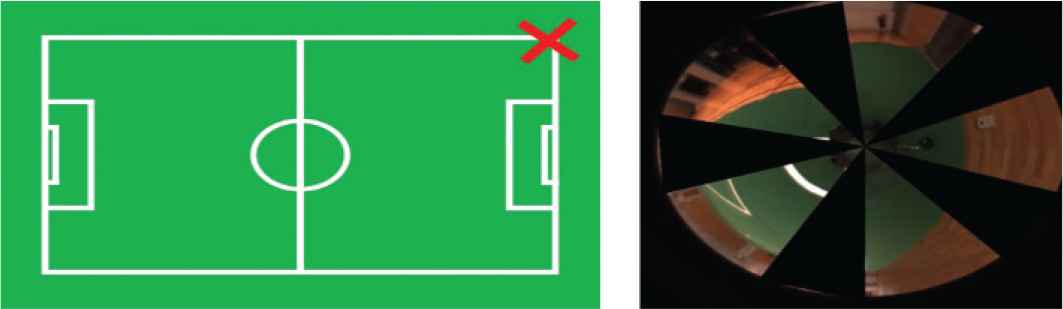

We conducted the experiments at the seven locations on the MSL field indicated in Figure 7. These seven locations are characteristic points on the field. Taking the center of the panoramic image as the center of the circle, we set the fan-shaped area with a center angle of 30° as noise, shown in Figure 8. By changing the position and amount of noise, we can simulate different noise conditions and verify the results of self-localization. In the field of research using omnidirectional cameras, the verification method using fan shape noise as described above is often used [10].

Experiment location.

Varying amounts of noise.

Figure 8 shows the changes in the amount of noise at the position D in Figure 7, and the changes in angle are shown in Figure 9. The image in the left of Figure 8 is the original image without any noise. We verified the error in the self-localization results measured at the angle and number of various noise at each coordinate location on the MSL field. The results of each measurement position are summarized in Table 1. For the position deviation caused by the noise at different angles, we took the average value combined with the deviation value caused by the amount of noise. The bold text in the table indicates that the error value of the self-localization exceeds the width of a robot (50 cm), meaning that the robot has lost its exact position.

Noise at different angles.

| Error [cm] | Noise num | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rate | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

| Point | A | 28.3 | 28.3 | 28.3 | 28.3 | 150.3 | 22.4 | 72.8 | 1510.0 | 28.3 | 131.5 |

| 0.00% | 0.00% | 0.00% | 0.00% | 8.33% | 0.00% | 8.33% | 66.67% | 0.00% | 16.67% | ||

| B | 14.1 | 14.1 | 14.1 | 14.1 | 31.6 | 14.1 | 41.2 | 22.4 | 648.5 | 1164.8 | |

| 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 50.00% | 100.00% | ||

| C | 10.0 | 14.1 | 0.0 | 10.0 | 14.1 | 10.0 | 14.1 | 10.0 | 14.1 | 10.0 | |

| 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | ||

| D | 20.0 | 14.1 | 10.0 | 20.0 | 20.0 | 10.0 | 1310.3 | 429.5 | 676.8 | 1207.8 | |

| 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 8.33% | 66.67% | 75.00% | 100.00% | ||

| E | 22.4 | 22.4 | 22.4 | 58.3 | 1650.5 | 22.4 | 22.4 | 31.6 | 31.6 | 31.6 | |

| 0.00% | 0.00% | 0.00% | 33.33% | 8.33% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | ||

| F | 30.0 | 30.0 | 41.2 | 14.1 | 20.0 | 40.0 | 20.0 | 40.0 | 41.2 | 370.1 | |

| 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 0.00% | 66.67% | ||

| G | 10.0 | 10.0 | 40.0 | 120.0 | 40.0 | 10.0 | 820.1 | 900.1 | 890.1 | 900.1 | |

| 0.00% | 0.00% | 0.00% | 33.33% | 0.00% | 0.00% | 33.33% | 66.67% | 75.00% | 66.67% | ||

Comparison of detection error

3.2. Experiment Results

As shown in the results in Table 1, when the amount of noise increases, the self-localization error also increases. Generally, when the number of noises is greater than 7, it will cause large errors except for special locations, i.e., where there are more than two features in the image obtained by the robot (Locations B and F) and when the robot is in the center circle (Location C), shown in Figures 10 and 11, respectively. Locations E and G also have errors when the noise is low. This may be because the robot was on the vertical white line, and the noise obscured all of the white line information, shown in Figure 12. Overall, the results show that when the number of noise is less than 3, the self-localization error is very small, and when the noise increases to 4, the maximum self-localization error is 120 cm. In a real game environment, the probability of more than four noises existing at the same time is very low.

Eight noise patterns at position B.

10 noise patterns at position C.

Five noise patterns at position E.

4. VERIFICATION OF ROBUSTNESS AGAINST MOVING SPEED

Genetic algorithm searching obtains the optimum value of fitness function by changing and converging the generation continuously against a fixed search space such as a static image. However, when processing a dynamic image, the target position changes at every moment. The target position ϕp in the image changes and is represented by ϕp(t) as the time function. Real-time processing, which calculates within 33 ms per frame is very important for recognizing target position ϕp(t) without delay. In this research, the proposed method outputs the target position obtained by the maximum value of the genetic fitness function at every frame change.

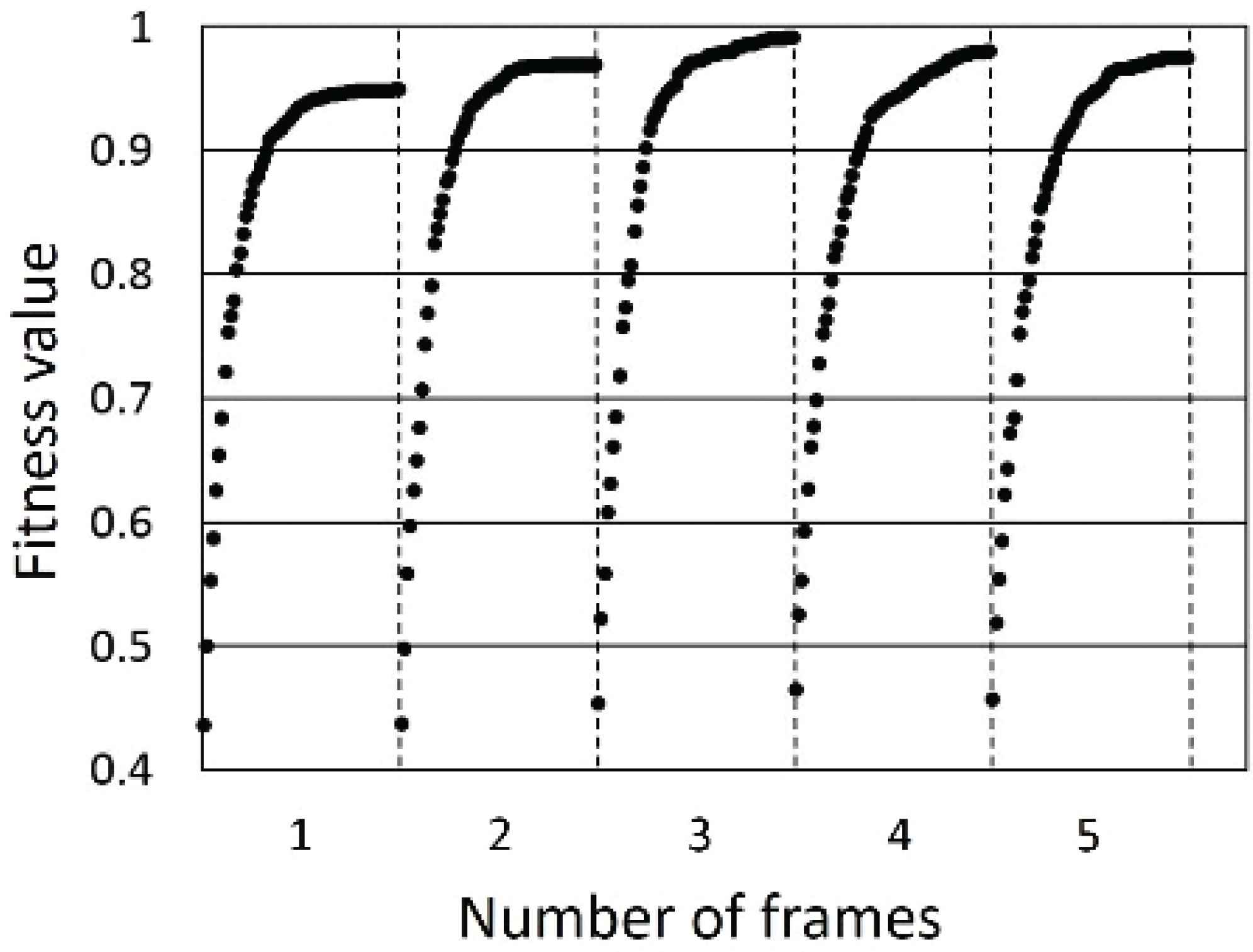

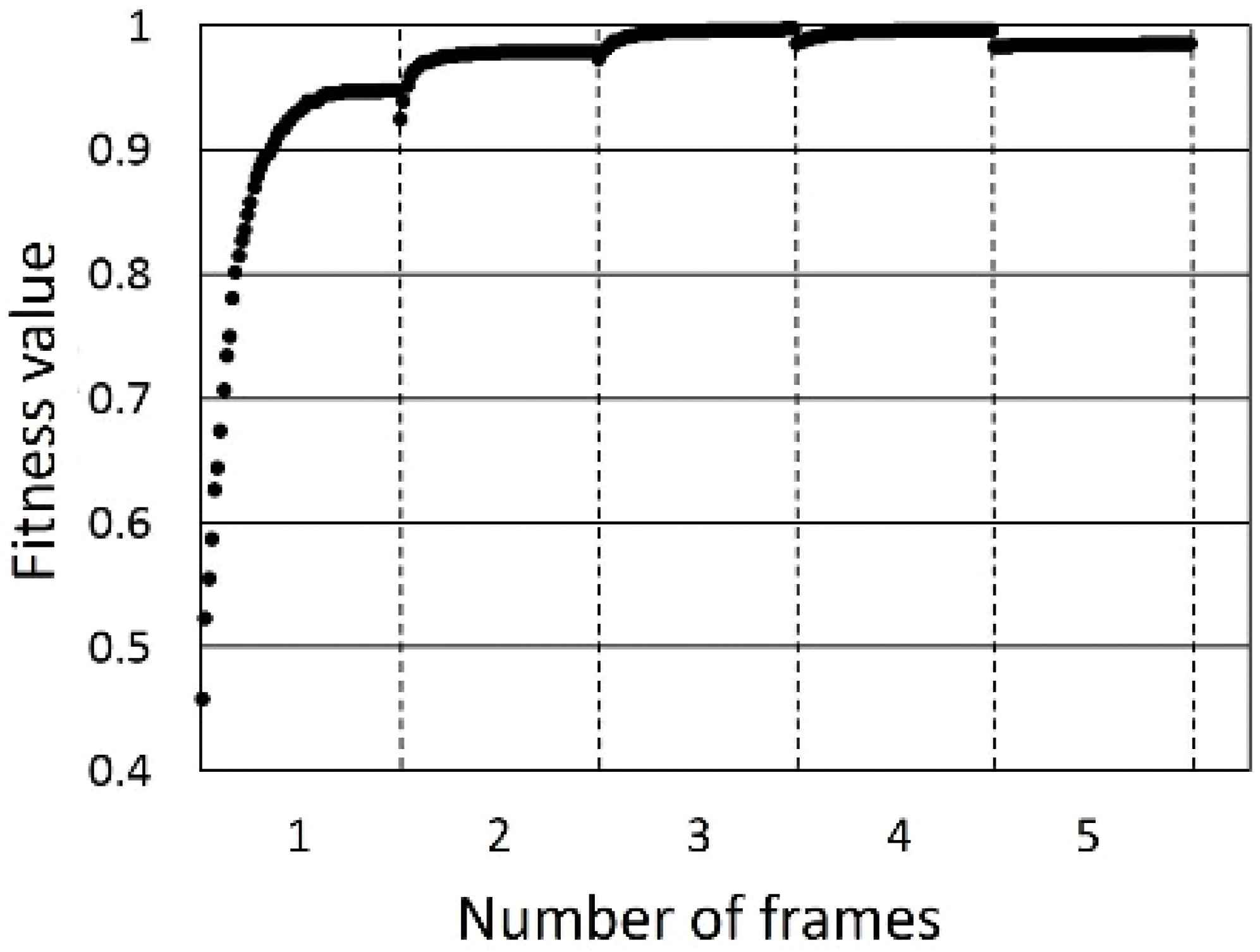

4.1. Inheritance of Genetic Information

An example of the output fitness value from the general GA search is shown in Figure 13, where the vertical line represents the timing of frame-to-frame switching. In this figure, the fitness value drops significantly because the best individual is reset with every frame change and the search restarts from a very low initial fitness value. The proposed method uses the genetic information of the previous frame as the initial genetic information of the next frame at every frame change. The resulting output of the proposed method is shown in Figure 14. As seen in the figure, a high fitness value is maintained by inheriting the individual information.

Output of fitness value from general GA search.

Output of fitness value using proposed method.

4.2. Experiment Results

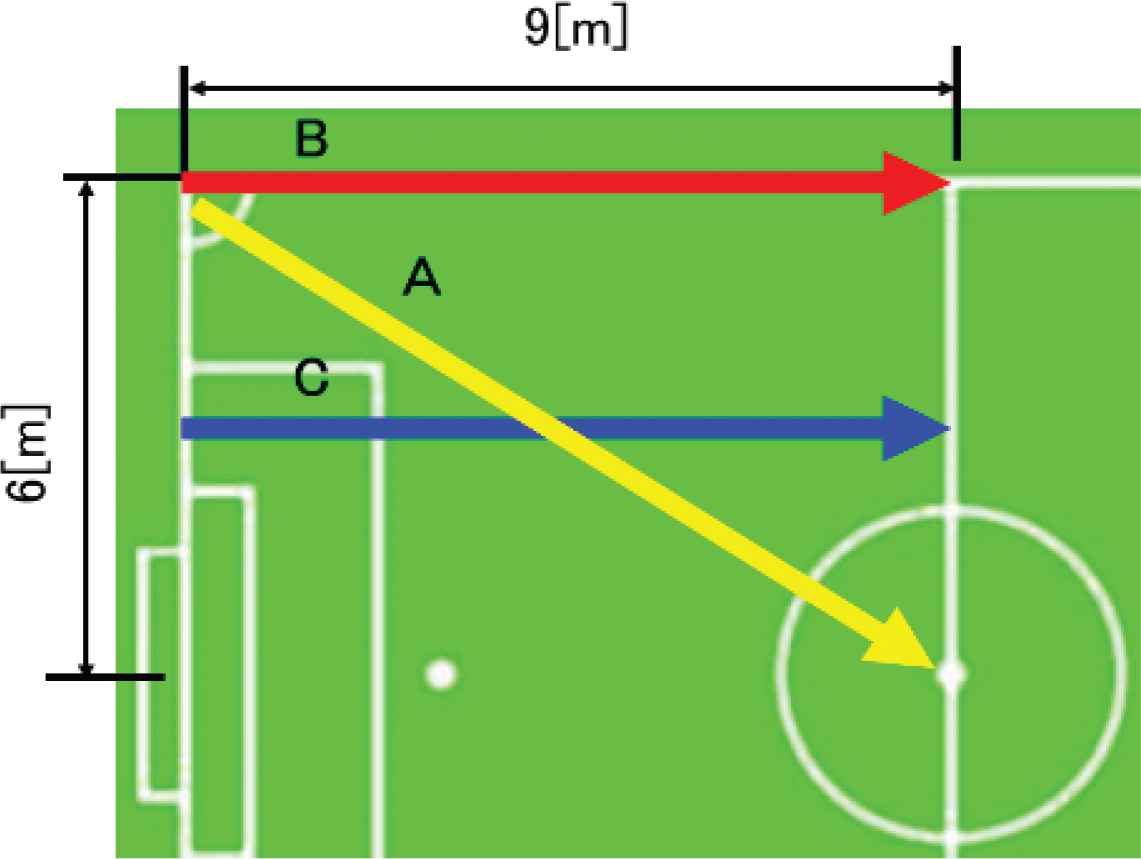

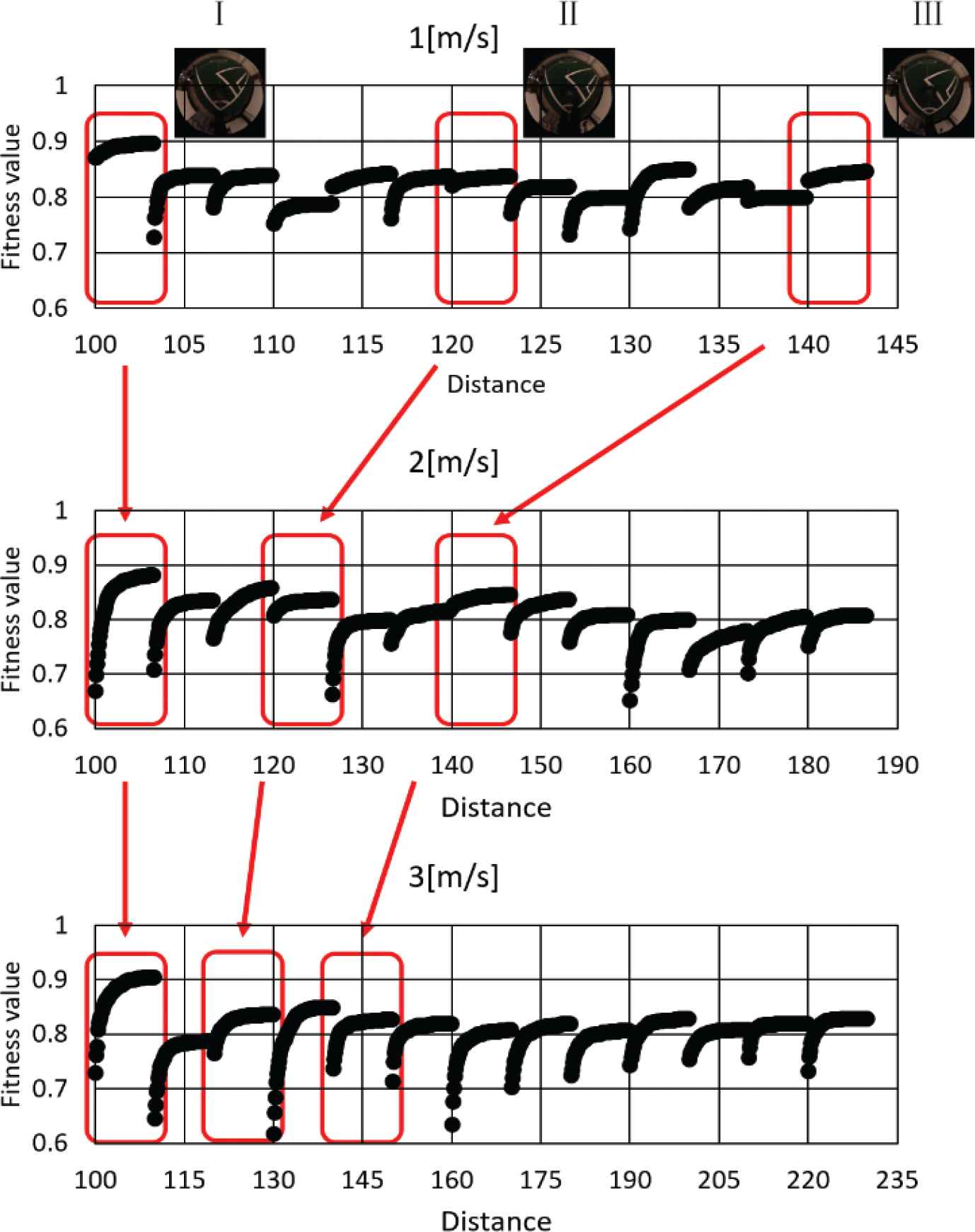

To verify the method’s robustness to moving speed, we conducted experiments in which we measured the robot’s fitness value and self-localization accuracy at different robot speeds. The robot moves forward on test paths A–C, shown in Figure 15, at a speed of 1–3 m/s.

Test paths A–C.

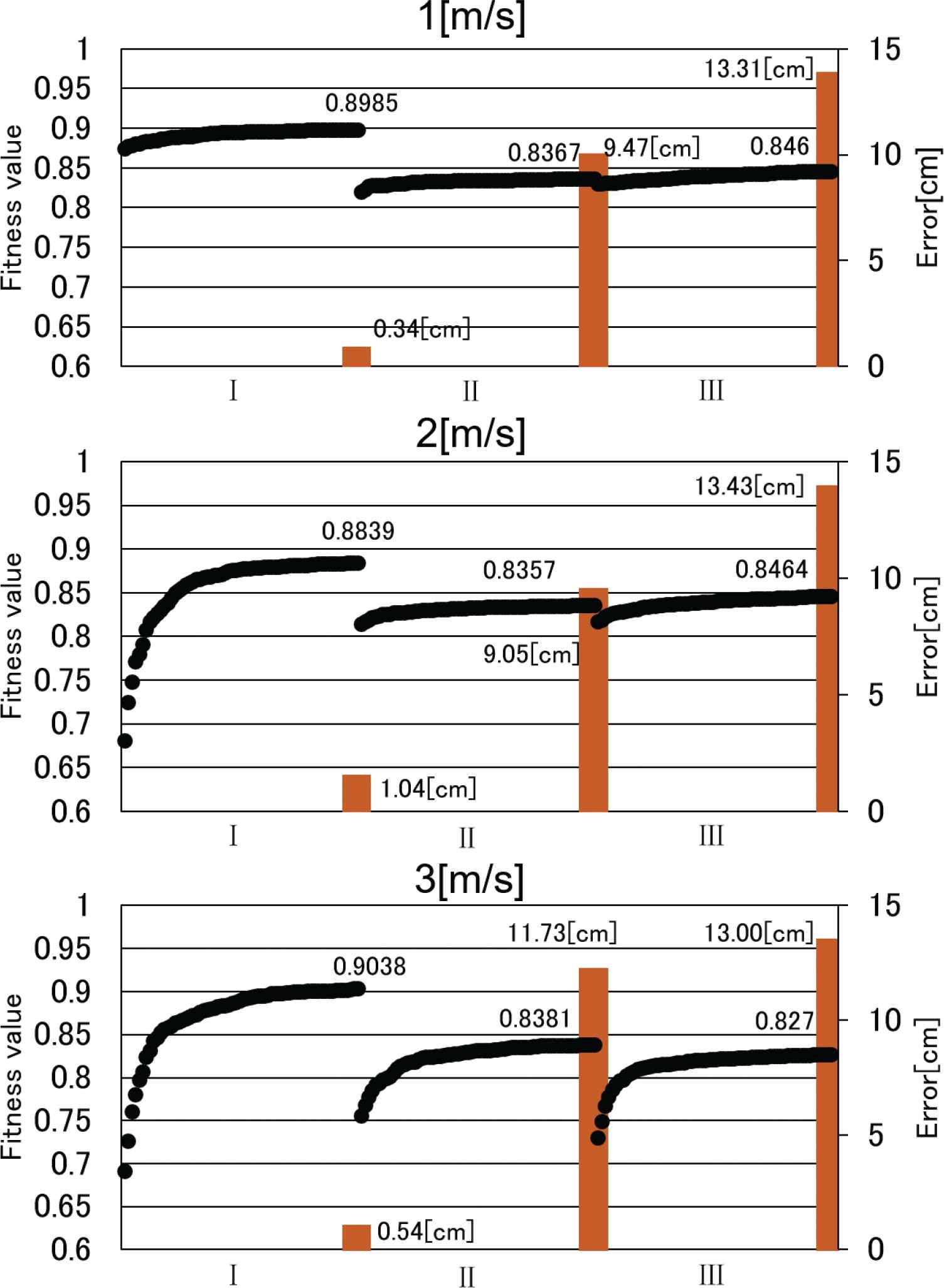

The result of test path A is shown in Figures 16 and 17. In Figure 16, the vertical axis represents the fitness value and the horizontal axis represents the distance from the starting point. To verify the relationship between accuracy and moving speed, we picked three areas surrounded by rectangles in Figure 16, which are rearranged in Figure 17. In Figure 17, I–III are the respective positions and frames at each speed.

Results of fitness value at each moving speed (test path A).

Self-localization error at each moving speed (test path A).

Here, the position error is represented with a bar graph. As shown in Figure 17, the self-localization error does not change greatly as moving speed changes. The maximum error is about 13 cm, which is small enough compared to the size of the MSL field. Test paths B and C yielded similar results to test path A; the maximum errors were 9.9 and 10.12 cm for B and C, respectively. This shows that the proposed method is robust against changes in the robot’s moving speed within 1–3 m/s.

5. CONCLUSION

We proposed a self-localization method that generates the search space based on a model-based matching with the white line information of the RoboCup MSL soccer field. The method recognizes the robot position by optimizing the fitness function using a genetic algorithm. We verified the effectiveness and accuracy of the proposed self-localization method using the GA in an actual environment. Furthermore, we experimentally evaluated the robustness of the proposed method against noise and moving speed and confirmed that it is sufficient for RoboCup soccer games.

CONFLICTS OF INTEREST

The authors declare they have no conflicts of interest.

AUTHORS INTRODUCTION

Mr. Yuehang Ma

He received his B.S. and M.S. degree in Engineering in 2018 and 2020 from the Faculty of Engineering, Tokyo Polytechnic University in Japan. He is acquiring the PhD in Tokyo Polytechnic University.

He received his B.S. and M.S. degree in Engineering in 2018 and 2020 from the Faculty of Engineering, Tokyo Polytechnic University in Japan. He is acquiring the PhD in Tokyo Polytechnic University.

Dr. Hitoshi Kono

He received his B.S., M.S. and PhD degrees from Tokyo Denki University, Japan, in 2008, 2010 and 2015 respectively. He joined Fujitsu Limited from 2010 to 2011. In 2015, he joined the Department of Precision Engineering, The University of Tokyo as a Project Researcher. Since 2017, he has been an Assistant Professor in the Department of Electronics and Mechatronics, Tokyo Polytechnic University. His research interests include disaster response robotics, transfer reinforcement learning and it’s applications. He is a member of RSJ, JSAI, and IEEE R&A.

He received his B.S., M.S. and PhD degrees from Tokyo Denki University, Japan, in 2008, 2010 and 2015 respectively. He joined Fujitsu Limited from 2010 to 2011. In 2015, he joined the Department of Precision Engineering, The University of Tokyo as a Project Researcher. Since 2017, he has been an Assistant Professor in the Department of Electronics and Mechatronics, Tokyo Polytechnic University. His research interests include disaster response robotics, transfer reinforcement learning and it’s applications. He is a member of RSJ, JSAI, and IEEE R&A.

Ms. Kaori Watanabe

She received her Master’s degree from the Department of System Electronics and Information Science, Tokyo Polytechnic University, Japan in 2013. She is currently a Doctoral course student in Tokyo Polytechnic University, Japan.

She received her Master’s degree from the Department of System Electronics and Information Science, Tokyo Polytechnic University, Japan in 2013. She is currently a Doctoral course student in Tokyo Polytechnic University, Japan.

Dr. Hidekazu Suzuki

He is an Associate Professor of Faculty of Engineering at Tokyo Polytechnic University in Japan. He graduated from the Department of Mechanical Engineering, Fukui University, in 2000. He received his D. Eng. degree in System Design Engineering from Fukui University in 2005. His research interest is Robotics.

He is an Associate Professor of Faculty of Engineering at Tokyo Polytechnic University in Japan. He graduated from the Department of Mechanical Engineering, Fukui University, in 2000. He received his D. Eng. degree in System Design Engineering from Fukui University in 2005. His research interest is Robotics.

REFERENCES

Cite this article

TY - JOUR AU - Yuehang Ma AU - Kaori Watanabe AU - Hitoshi Kono AU - Hidekazu Suzuki PY - 2021 DA - 2021/06/06 TI - Verification of Robustness against Noise and Moving Speed in Self-localization Method for Soccer Robot JO - Journal of Robotics, Networking and Artificial Life SP - 66 EP - 71 VL - 8 IS - 1 SN - 2352-6386 UR - https://doi.org/10.2991/jrnal.k.210521.015 DO - 10.2991/jrnal.k.210521.015 ID - Ma2021 ER -