Development of Automation Recognition of Hazmat Marking Chart for Rescue Robot

- DOI

- 10.2991/jrnal.k.190220.002How to use a DOI?

- Keywords

- Hazmat marking chart; SURF; FLANN; rescue robot

- Abstract

A long history of first place awards in World RoboCup Rescue Robot competitions is Invigorating Robot Activity Project (iRAP) such as iRAP_PRO, iRAP_FURIOUS, iRAP_JUNIOR, and iRAP_ROBOT. In this paper, we would like to introduce and explain an autonomous system of our rescue robots for detection and recognition of hazardous material (Hazmat) marking charts. All Hazmat tags are considered and computed by using speeded-up robust feature combined with fast library for approximate nearest neighbors to match with the templates. Finally, the paper presents experimental results based on real situations to confirm an effect of the pattern recognition of robotics.

- Copyright

- © 2019 The Authors. Published by Atlantis Press SARL.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

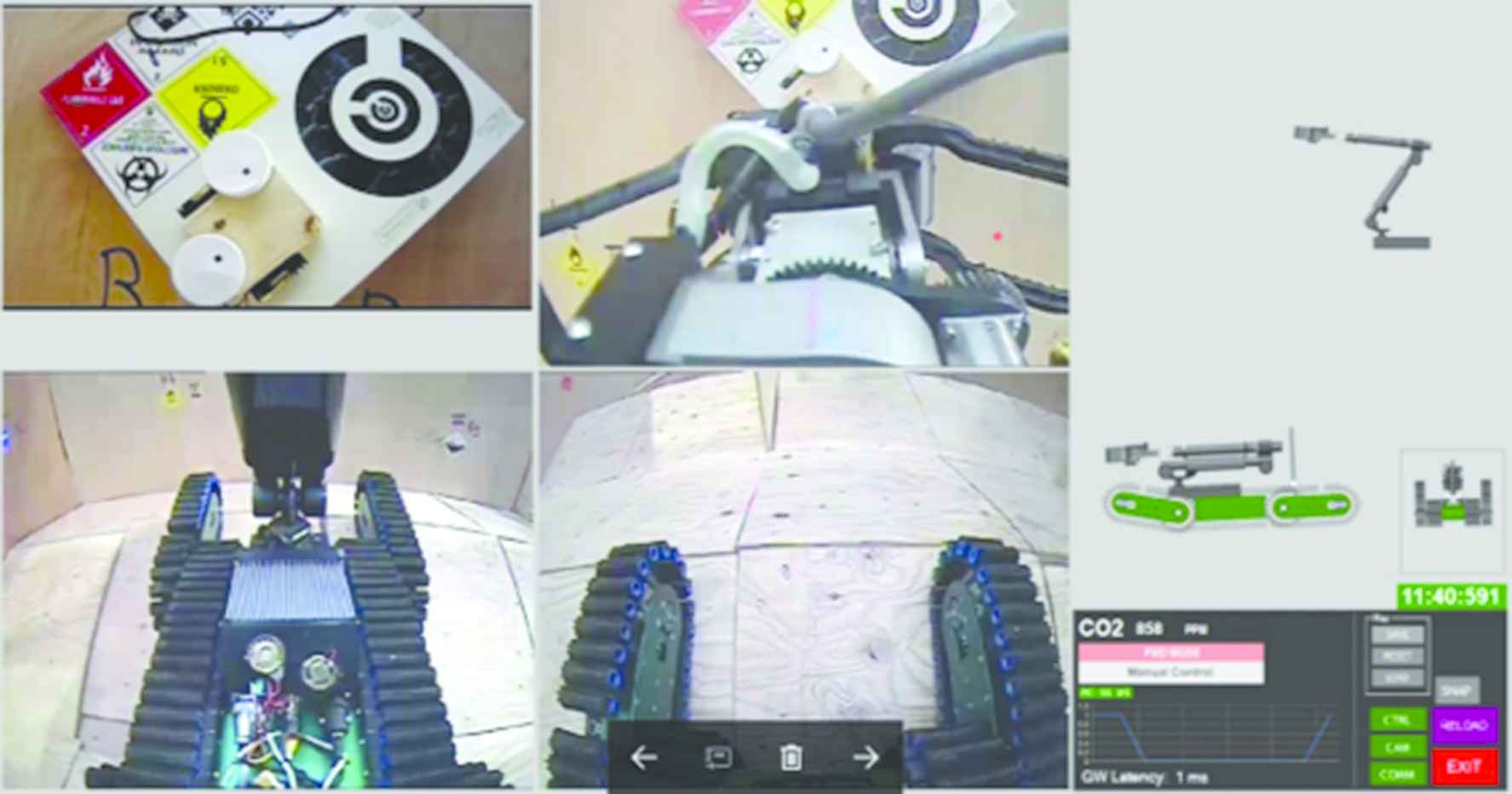

Invigorating Robot Activity Project (iRAP) is the robotics club of students from King Mongkut’s University of Technology North Bangkok, Thailand. Every year, we participate in the World RoboCup Rescue Robot competitions, and our teams have won first place many times in the competitions [1]. Our rescue robot can be divided into three major parts such as the mechanical robot part, the electrical system part, and the software system part. The unique point of the iRAP_ROBOT is the human–robot interface (the operator console), which can show and present extensive sensor values and visual data (CO2 sensor, visual temperature sensor, laser-scanner, IMU sensor and four cameras) in real time as shown in Figure 1. Moreover, our rescue robot is designed based on proficiency by the agility and performance tests.

Operator console of iRAP_ROBOT.

2. STRUCTURE OF iRAP_ROBOT

The structure of the iRAP rescue robot is an original platform for the rescue robot which has two pairs of flippers (in the front and in the rear) as shown in Figure 2. In addition, the 6-DOF robotic arm (one prismatic joint and five revolute joints) is set up on a body of the robot, to search and observe the victim and situation in the surrounding area. The multi-sensors are installed in the end-effector of robotic arm, and also, the camera on the robotic arm is used for recognizing the Hazmat chart autonomously.

iRAP rescue robot.

3. AUTOMATIC RECOGNITION OF HAZMAT CHART

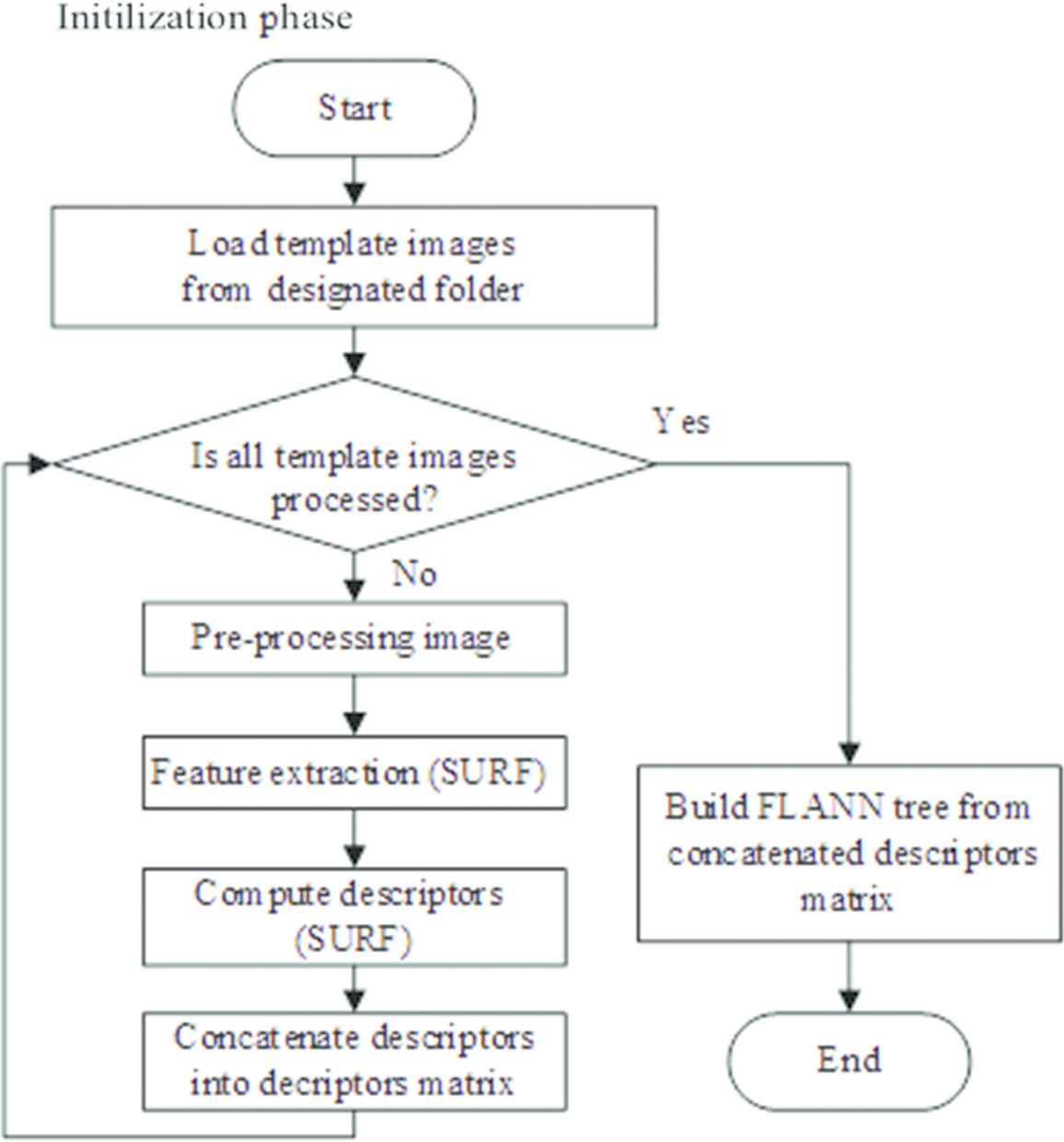

The proposed paper that presents the Speeded-up Robust Feature (SURF) method [2] for extraction the information from the image and then using the Fast Library for Approximate Nearest Neighbors (FLANN) model [3–5], which is used to match the input image with Hazmat chart templates. And the system uses blob detection [6] to mark the position of Hazmat chart. The overview system can be divided into two phases: the initialization phase (Figure 3) and the real-time processing phase.

Initialization phase of the proposed method.

3.1. Initialization Phase

3.1.1. Load all template images

The first part of initialization phase is to input all the Hazmat chart templates. There are 12 templates for training the proposed methods as displayed in Figure 4.

Hazmat chart templates.

3.1.2. Pre-processing image

In the pre-processing image, there is a common task to improve or adjust the feature of image (scale, color, edge, area etc.).

3.1.3. Feature extraction

The SURF algorithm is applied to the feature extraction. It consists of detector (Hessian matrix), as expressed and descriptor schemes (Haar wavelet). The determinant of a Hessian matrix in Equation (1) shows the expression of the local change around the area, where H(x, σ) is the hessian matrix, as illustrated in Equation (2), and Dxx(x, σ), Dxy(x, σ) in Equations (3) and (4) are the convolution of the integral image and the second derivative of the Gaussian function.

After having create the response map, the next step is to consider a non-maximum suppression based on the determents of the Hessian matrix that is the objective of the SURF detection.

3.1.4. Compute descriptors

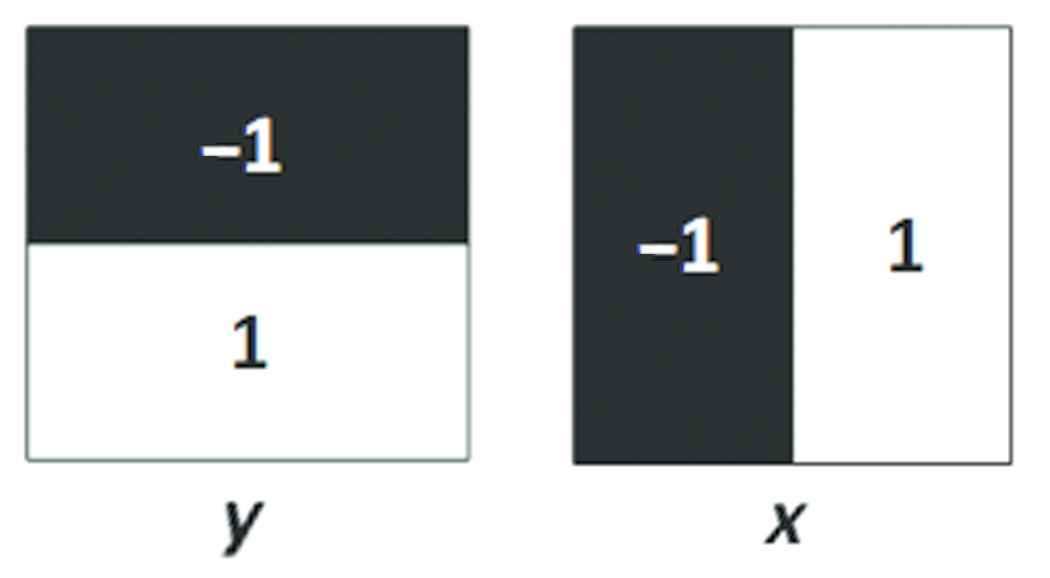

At each interest point, we have to indicate the unique description of a feature that do not depend on the size and rotation. The SURF descriptor is based on the Haar wavelet model that can be calculated efficiently with integral images. The wavelets response in the x- and y-direction is defined as dx and dy respectively as shown in Figure 5. For each sub-area of a vector, descriptors are calculated based on Equation (5).

The wavelets response. Black and white areas show the weight −1 and 1 for the Haar kernels.

3.1.5. Concatenate descriptors

In this sub-process, it is created by the new platform of descriptors to the sample feature. Then, we have 12 sample feature descriptors to match with the input image.

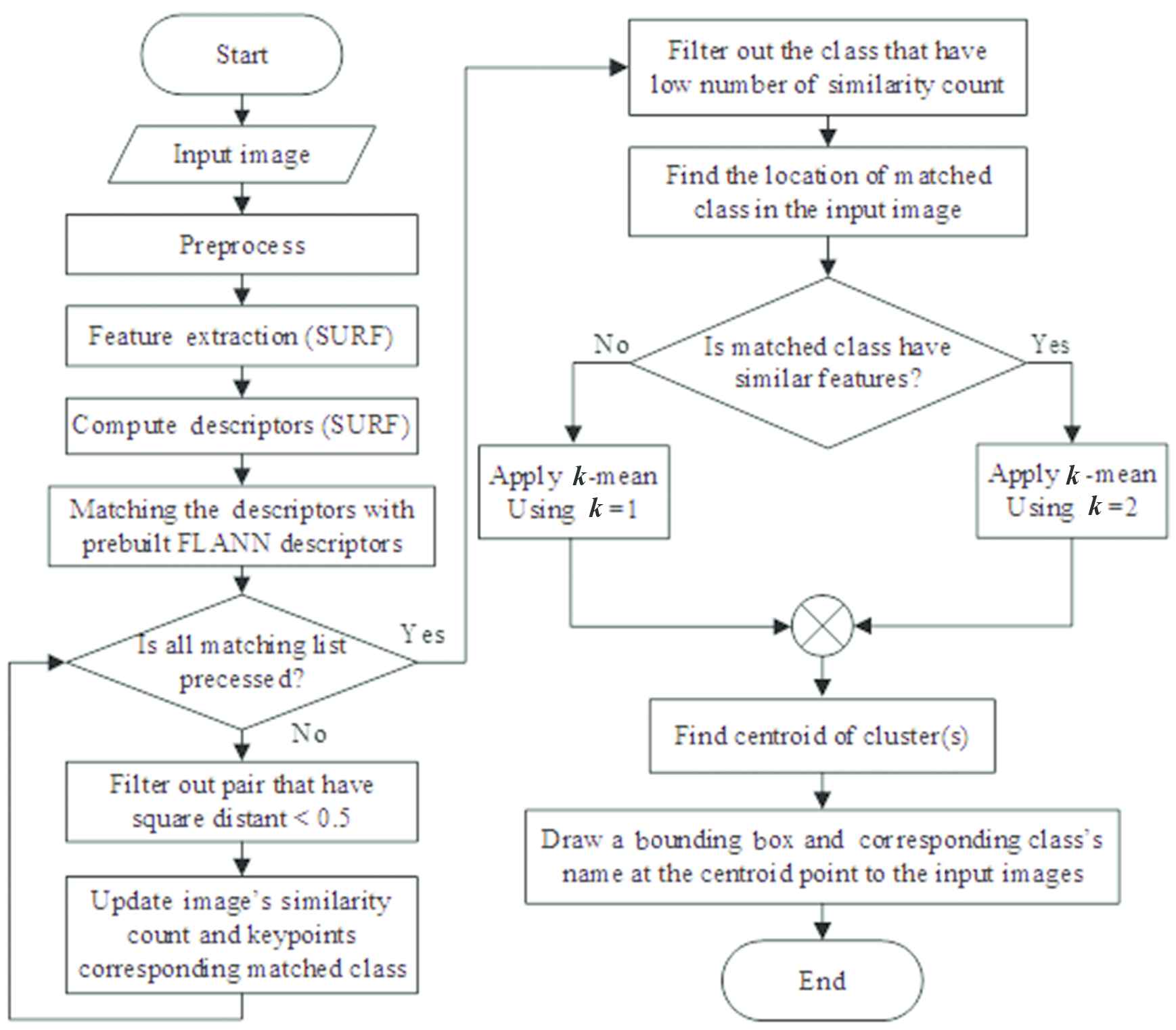

3.2. Real-time Processing Phase

The real-time processing phase (Figure 6) is similar to an initialization phase. First, the acquired image is input into the main process. The SURF method is used to extract the interest points and compute the descriptors. And then, the descriptors of the input image are compared with all sample images. The similarity score of the keypoint is calculated in each class, Finally, the input image is specified in the winner class, which is the maximum similarity score. Then, the position of the Hazmat image is in the frame that will be considered to calculate the centroid of an interest area.

Real-time processing phase.

4. EXPERIMENTAL RESULTS

The experimental results were verified with the Visual Studio C# program and an open source library such as Emgu CV library.

4.1. Verification I

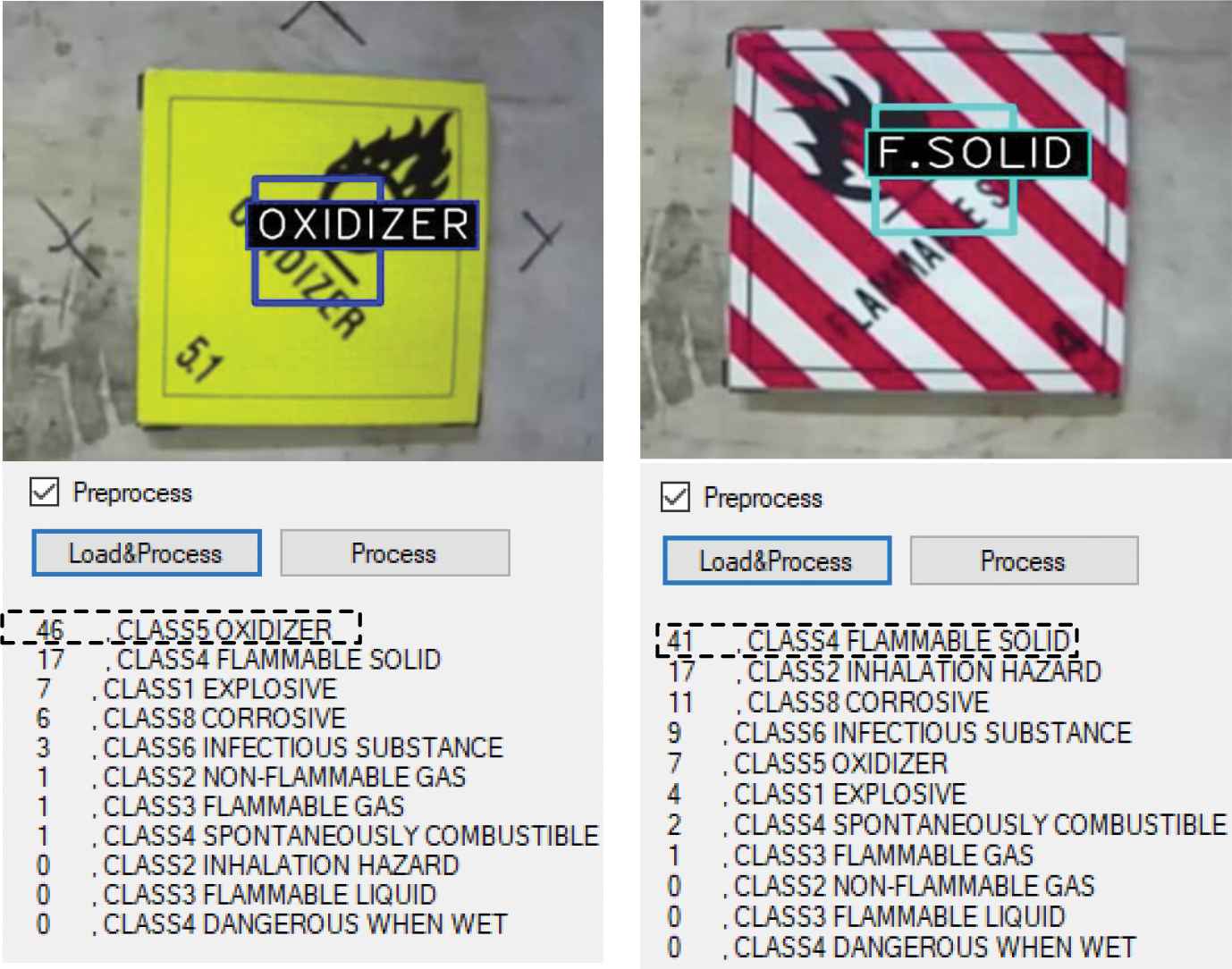

In this case there is only one Hazmat image in the frame, the results of the proposed method can be used in recognition and detection autonomously, it did not depend on the size of input image and rotation. In Figure 7a and 7b, the system that will select highest similarity score as the answer.

One Hazmat chart matching. (a) Oxidizer Hazmat chart. (b) Flammable solid Hazmat chart.

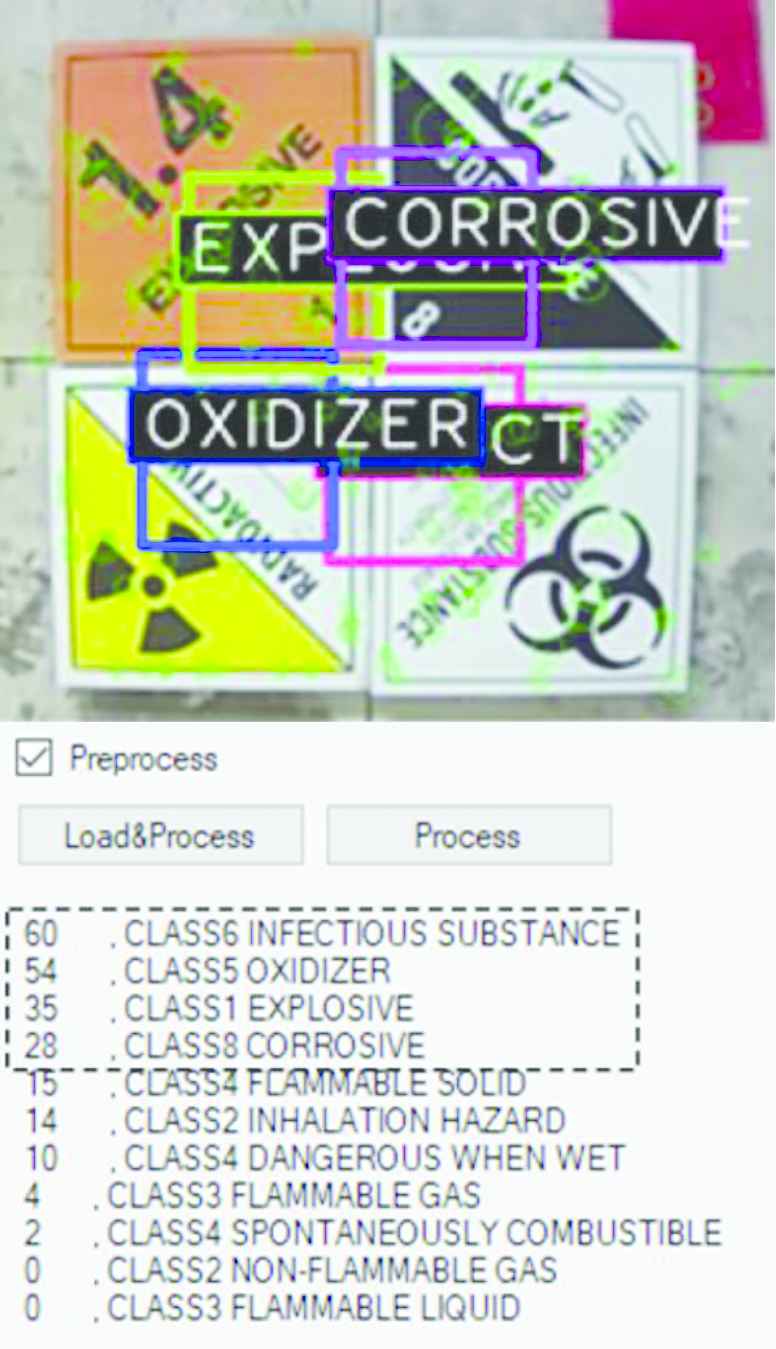

4.2. Verification II

Besides, we had more testing of the proposed method by adding four Hazmat charts as the input image to verify the uncertain image group. In Figure 8, the experimental results show four maximum similarity scores such as “INFECTIOUS SUBSTANCE (Class6)”, “OXIDIZER (Class5), “EXPLOSIVE (Class1)”, and “CORROSIVE (Class8)”, which were matched with the training images. The results that confirm the proposed method can classify and identify the Hazmat chart accurately and correctly. Moreover, our proposed method that could draw the frames and mark the position of each Hazmat chart is based on their density features.

Four Hazmat charts matching.

5. CONCLUSION

This paper presents the development of the automatic recognition of a Hazmat marking chart for rescue robots. The results that affirm the proposed method can be used in detection and recognition in the real situation. In the future work, we will improve not only the speed of the recognition system but also the accuracy of method using deep learning method.

Authors Introduction

Dr. Wisanu Jitviriya

He received his PhD from Kyushu Institute of Technology, Japan in 2016. He is a lecturer in the Department of Production Engineering, Faculty of Engineering, King Mongkut’s University of Technology North Bangkok, Thailand.

He received his PhD from Kyushu Institute of Technology, Japan in 2016. He is a lecturer in the Department of Production Engineering, Faculty of Engineering, King Mongkut’s University of Technology North Bangkok, Thailand.

His main research interests are Robotics and Automation systems.

Mr. Poommitol Chaicherdkiat

He received his Bachelor degree from the Department of Electrical Computer Engineering, Faculty of Engineering, King Mongkut’s University of Technology North Bangkok, Thailand. His main research interests are Computer Vision and Robotics Systems.

He received his Bachelor degree from the Department of Electrical Computer Engineering, Faculty of Engineering, King Mongkut’s University of Technology North Bangkok, Thailand. His main research interests are Computer Vision and Robotics Systems.

Mr. Noppadol Pudchuen

He received his Master degree from Department of Instrumentation and Electronics Engineering, Faculty of Engineering, King Mongkut’s University of Technology North Bangkok, Thailand in 2017. He works as a lecturer in Faculty of Engineering at King Mongkut’s University of Technology North Bangkok. His main research interests are the Computer Vision and SLAM for Robotic systems.

He received his Master degree from Department of Instrumentation and Electronics Engineering, Faculty of Engineering, King Mongkut’s University of Technology North Bangkok, Thailand in 2017. He works as a lecturer in Faculty of Engineering at King Mongkut’s University of Technology North Bangkok. His main research interests are the Computer Vision and SLAM for Robotic systems.

Prof. Dr. Eiji Hayashi

He received his PhD from Graduate School, Division of Science and Engineering, Waseda University, Japan in 1994. He is a Professor in Faculty of Computer Science and Systems Engineering, Kyushu Institute of Technology, Japan. His main research interests are Perception information processing and Intelligent robotics system.

He received his PhD from Graduate School, Division of Science and Engineering, Waseda University, Japan in 1994. He is a Professor in Faculty of Computer Science and Systems Engineering, Kyushu Institute of Technology, Japan. His main research interests are Perception information processing and Intelligent robotics system.

REFERENCES

Cite this article

TY - JOUR AU - Wisanu Jitviriya AU - Poommitol Chaicherdkiat AU - Noppadol Pudchuen AU - Eiji Hayashi PY - 2019 DA - 2019/03/30 TI - Development of Automation Recognition of Hazmat Marking Chart for Rescue Robot JO - Journal of Robotics, Networking and Artificial Life SP - 223 EP - 227 VL - 5 IS - 4 SN - 2352-6386 UR - https://doi.org/10.2991/jrnal.k.190220.002 DO - 10.2991/jrnal.k.190220.002 ID - Jitviriya2019 ER -